Hypothesis Testing with the Binomial Distribution

Contents Toggle Main Menu 1 Hypothesis Testing 2 Worked Example 3 See Also

Hypothesis Testing

To hypothesis test with the binomial distribution, we must calculate the probability, $p$, of the observed event and any more extreme event happening. We compare this to the level of significance $\alpha$. If $p>\alpha$ then we do not reject the null hypothesis. If $p<\alpha$ we accept the alternative hypothesis.

Worked Example

A coin is tossed twenty times, landing on heads six times. Perform a hypothesis test at a $5$% significance level to see if the coin is biased.

First, we need to write down the null and alternative hypotheses. In this case

The important thing to note here is that we only need a one-tailed test as the alternative hypothesis says “in favour of tails”. A two-tailed test would be the result of an alternative hypothesis saying “The coin is biased”.

We need to calculate more than just the probability that it lands on heads $6$ times. If it landed on heads fewer than $6$ times, that would be even more evidence that the coin is biased in favour of tails. Consequently we need to add up the probability of it landing on heads $1$ time, $2$ times, $\ldots$ all the way up to $6$ times. Although a calculation is possible, it is much quicker to use the cumulative binomial distribution table. This gives $\mathrm{P}[X\leq 6] = 0.058$.

We are asked to perform the test at a $5$% significance level. This means, if there is less than $5$% chance of getting less than or equal to $6$ heads then it is so unlikely that we have sufficient evidence to claim the coin is biased in favour of tails. Now note that our $p$-value $0.058>0.05$ so we do not reject the null hypothesis. We don't have sufficient evidence to claim the coin is biased.

But what if the coin had landed on heads just $5$ times? Again we need to read from the cumulative tables for the binomial distribution which shows $\mathrm{P}[X\leq 5] = 0.021$, so we would have had to reject the null hypothesis and accept the alternative hypothesis. So the point at which we switch from accepting the null hypothesis to rejecting it is when we obtain $5$ heads. This means that $5$ is the critical value .

Selecting a Hypothesis Test

Binomial Distribution: Hypothesis Testing

We welcome your feedback, comments and questions about this site or page. Please submit your feedback or enquiries via our Feedback page.

Learning Materials

- Business Studies

- Combined Science

- Computer Science

- Engineering

- English Literature

- Environmental Science

- Human Geography

- Macroeconomics

- Microeconomics

- Binomial Hypothesis Test

When calculating probabilities using binomial expansions, we can calculate these probabilities for an individual value (\(P(x = a)\)) or a cumulative value \(P(x<a), \space P(x\leq a), \space P(x\geq a)\) .

Millions of flashcards designed to help you ace your studies

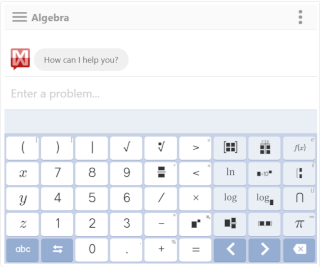

Need help? Meet our AI Assistant

Need help with Binomial Hypothesis Test? Ask our AI Assistant

Review generated flashcards

to start learning or create your own AI flashcards

Start learning or create your own AI flashcards

Vaia Editorial Team

Team Binomial Hypothesis Test Teachers

- 11 minutes reading time

- Checked by Vaia Editorial Team

- Applied Mathematics

- Decision Maths

- Discrete Mathematics

- Logic and Functions

- Mechanics Maths

- Probability and Statistics

- Bayesian Statistics

- Bias in Experiments

- Binomial Distribution

- Biostatistics

- Bivariate Data

- Categorical Data Analysis

- Categorical Variables

- Causal Inference

- Central Limit Theorem

- Chi Square Test for Goodness of Fit

- Chi Square Test for Homogeneity

- Chi Square Test for Independence

- Chi-Square Distribution

- Cluster Analysis

- Combining Random Variables

- Comparing Data

- Comparing Two Means Hypothesis Testing

- Conditional Probability

- Conducting A Study

- Conducting a Survey

- Conducting an Experiment

- Confidence Interval for Population Mean

- Confidence Interval for Population Proportion

- Confidence Interval for Slope of Regression Line

- Confidence Interval for the Difference of Two Means

- Confidence Intervals

- Correlation Math

- Cox Regression

- Cumulative Distribution Function

- Cumulative Frequency

- Data Analysis

- Data Interpretation

- Decision Theory

- Degrees of Freedom

- Discrete Random Variable

- Discriminant Analysis

- Distributions

- Empirical Bayes Methods

- Empirical Rule

- Errors In Hypothesis Testing

- Estimation Theory

- Estimator Bias

- Events (Probability)

- Experimental Design

- Factor Analysis

- Frequency Polygons

- Generalization and Conclusions

- Geometric Distribution

- Geostatistics

- Hierarchical Modeling

- Hypothesis Test for Correlation

- Hypothesis Test for Regression Slope

- Hypothesis Test of Two Population Proportions

- Hypothesis Testing

- Inference For Distributions Of Categorical Data

- Inferences in Statistics

- Item Response Theory

- Kaplan-Meier Estimate

- Kernel Density Estimation

- Large Data Set

- Lasso Regression

- Latent Variable Models

- Least Squares Linear Regression

- Linear Interpolation

- Linear Regression

- Logistic Regression

- Machine Learning

- Mann-Whitney Test

- Markov Chains

- Mean and Variance of Poisson Distributions

- Measures of Central Tendency

- Methods of Data Collection

- Mixed Models

- Multilevel Modeling

- Multivariate Analysis

- Neyman-Pearson Lemma

- Non-parametric Methods

- Normal Distribution

- Normal Distribution Hypothesis Test

- Normal Distribution Percentile

- Ordinal Regression

- Paired T-Test

- Parametric Methods

- Path Analysis

- Point Estimation

- Poisson Regression

- Principle Components Analysis

- Probability

- Probability Calculations

- Probability Density Function

- Probability Distribution

- Probability Generating Function

- Product Moment Correlation Coefficient

- Quantile Regression

- Quantitative Variables

- Random Effects Model

- Random Variables

- Randomized Block Design

- Regression Analysis

- Residual Sum of Squares

- Robust Statistics

- Sample Mean

- Sample Proportion

- Sampling Distribution

- Sampling Theory

- Scatter Graphs

- Sequential Analysis

- Single Variable Data

- Spearman's Rank Correlation

- Spearman's Rank Correlation Coefficient

- Standard Deviation

- Standard Error

- Standard Normal Distribution

- Statistical Graphs

- Statistical Inference

- Statistical Measures

- Stem and Leaf Graph

- Stochastic Processes

- Structural Equation Modeling

- Sum of Independent Random Variables

- Survey Bias

- Survival Analysis

- Survivor Function

- T-distribution

- The Power Function

- Time Series Analysis

- Transforming Random Variables

- Tree Diagram

- Two Categorical Variables

- Two Quantitative Variables

- Type I Error

- Type II Error

- Types of Data in Statistics

- Variance for Binomial Distribution

- Venn Diagrams

- Wilcoxon Test

- Zero-Inflated Models

- Theoretical and Mathematical Physics

Jump to a key chapter

In hypothesis testing , we are testing as to whether or not these calculated probabilities can lead us to accept or reject a hypothesis.

We will be focusing on regions of binomial distribution ; therefore, we are looking at cumulative values.

Types of hypotheses

There are two main types of hypotheses:

The null hypothesis (H 0 ) is the hypothesis we assume happens, and it assumes there is no difference between certain characteristics of a population. Any difference is purely down to chance.

The alternative hypothesis (H 1 ) is the hypothesis we can try to prove using the data we have been given.

We can either:

Accept the null hypothesis OR

Reject the null hypothesis and accept the alternative hypothesis .

What are the steps to undertake a hypothesis test?

There are some key terms we need to understand before we look at the steps of hypothesis testing :

Critical value – this is the value where we go from accepting to rejecting the null hypothesis.

Critical region – the region where we are rejecting the null hypothesis.

Significance Level – a significance level is the level of accuracy we are measuring, and it is given as a percentage . When we find the probability of the critical value, it should be as close to the significance level as possible.

One-tailed test – the probability of the alternative hypothesis is either greater than or less than the probability of the null hypothesis.

Two-tailed test – the probability of the alternative hypothesis is just not equal to the probability of the null hypothesis.

So when we undertake a hypothesis test, generally speaking, these are the steps we use:

STEP 1 – Establish a null and alternative hypothesis, with relevant probabilities which will be stated in the question.

STEP 2 – Assign probabilities to our null and alternative hypotheses.

STEP 3 – Write out our binomial distribution .

STEP 4 – Calculate probabilities using binomial distribution. (Hint: To calculate our probabilities, we do not need to use our long-winded formula, but in the Casio Classwiz calculator, we can go to Menu -> Distribution -> Binomial CD and enter n as our number in the sample, p as our probability, and X as what we are trying to calculate).

STEP 5 – Check against significance level (whether this is greater than or less than the significance level).

STEP 6 – Accept or reject the null hypothesis.

Let's look at a few examples to explain what we are doing.

One-tailed test example

As stated above a one-tailed hypothesis test is one where the probability of the alternative hypothesis is either greater than or less than the null hypothesis.

A researcher is investigating whether people can identify the difference between Diet Coke and full-fat coke. He suspects that people are guessing. 20 people are selected at random, and 14 make a correct identification. He carries out a hypothesis test.

a) Briefly explain why the null hypothesis should be H 0 , with the probability p = 0.5 suggesting they have made the correct identification.

b) Complete the test at the 5% significance level.

Two-tailed test example

In a two-tailed test, the probability of our alternative hypothesis is just not equal to the probability of the null hypothesis.

A coffee shop provides free espresso refills. The probability that a randomly chosen customer uses these refills is stated to be 0.35. A random sample of 20 customers is chosen, and 9 of them have used the free refills.

Carry out a hypothesis test to a 5% significance level to see if the probability that a randomly chosen customer uses the refills is different to 0.35.

So our key difference with two-tailed tests is that we compare the value to half the significance level rather than the actual significance level.

Critical values and critical regions

Remember from earlier critical values are the values in which we move from accepting to rejecting the null hypothesis. A binomial distribution is a discrete distribution; therefore, our value has to be an integer.

You have a large number of statistical tables in the formula booklet that can help us find these; however, these are inaccurate as they give us exact values not values for the discrete distribution.

Therefore the best way to find critical values and critical regions is to use a calculator with trial and error till we find an acceptable value:

STEP 1 - Plug in some random values until we get to a point where for two consecutive values, one probability is above the significance level, and one probability is below.

STEP 2 - The one with the probability below the significance level is the critical value.

STEP 3 - The critical region, is the region greater than or less than the critical value.

Let's look at this through a few examples.

Worked examples for critical values and critical regions

A mechanic is checking to see how many faulty bolts he has. He is told that 30% of the bolts are faulty. He has a sample of 25 bolts. He believes that less than 30% are faulty. Calculate the critical value and the critical region.

Let's use the above steps to help us out.

A teacher believes that 40% of the students watch TV for two hours a day. A student disagrees and believes that students watch either more or less than two hours. In a sample of 30 students, calculate the critical regions.

As this is a two-tailed test, there are two critical regions, one on the lower end and one on the higher end. Also, remember the probability we are comparing with is that of half the significance level.

Binomial Hypothesis Test - Key takeaways

- Hypothesis testing is the process of using binomial distribution to help us reject or accept null hypotheses.

- A null hypothesis is what we assume to be happening.

- If data disprove a null hypothesis, we must accept an alternative hypothesis.

- We use binomial CD on the calculator to help us shortcut calculating the probability values.

- The critical value is the value where we start rejecting the null hypothesis.

- The critical region is the region either below or above the critical value.

- Two-tailed tests contain two critical regions and critical values.

Learn with 0 Binomial Hypothesis Test flashcards in the free Vaia app

We have 14,000 flashcards about Dynamic Landscapes.

Already have an account? Log in

Frequently Asked Questions about Binomial Hypothesis Test

How many samples do you need for the binomial hypothesis test?

There isn't a fixed number of samples, any sample number you are given you will use as n in X-B(n , p).

What is the null hypothesis for a binomial test?

The null hypothesis is what we assume is true before we conduct our hypothesis test.

What does a binomial test show?

It shows us the probability value is of undertaking a test, with fixed outcomes.

What is the p value in the binomial test?

The p value is the probability value of the null and alternative hypotheses.

Discover learning materials with the free Vaia app

Vaia is a globally recognized educational technology company, offering a holistic learning platform designed for students of all ages and educational levels. Our platform provides learning support for a wide range of subjects, including STEM, Social Sciences, and Languages and also helps students to successfully master various tests and exams worldwide, such as GCSE, A Level, SAT, ACT, Abitur, and more. We offer an extensive library of learning materials, including interactive flashcards, comprehensive textbook solutions, and detailed explanations. The cutting-edge technology and tools we provide help students create their own learning materials. StudySmarter’s content is not only expert-verified but also regularly updated to ensure accuracy and relevance.

Team Math Teachers

Study anywhere. Anytime.Across all devices.

Create a free account to save this explanation..

Save explanations to your personalised space and access them anytime, anywhere!

By signing up, you agree to the Terms and Conditions and the Privacy Policy of Vaia.

Sign up to highlight and take notes. It’s 100% free.

Join over 22 million students in learning with our Vaia App

The first learning app that truly has everything you need to ace your exams in one place

- Flashcards & Quizzes

- AI Study Assistant

- Study Planner

- Smart Note-Taking

Privacy Overview

How to Do Hypothesis Testing with Binomial Distribution

A hypothesis test has the objective of testing different results against each other. You use them to check a result against something you already believe is true. In a hypothesis test, you’re checking if the new alternative hypothesis H A would challenge and replace the already existing null hypothesis H 0 .

Hypothesis tests are either one-sided or two-sided. In a one-sided test, the alternative hypothesis is left-sided with p < p 0 or right-sided with p > p 0 . In a two-sided test, the alternative hypothesis is p ≠ p 0 . In all three cases, p 0 is the pre-existing probability of what you’re comparing, and p is the probability you are going to find.

Note! In hypothesis testing, you calculate the alternative hypothesis to say something about the null hypothesis.

Hypothesis Testing Binomial Distribution

For example, you would have a reason to believe that a high observed value of p , makes the alternative hypothesis H a : p > p 0 seem reasonable.

There is a drug on the market that you know cures 8 5 % of all patients. A company has come up with a new drug they believe is better than what is already on the market. This new drug has cured 92 of 103 patients in tests. Determine if the new drug is really better than the old one.

This is a classic case of hypothesis testing by binomial distribution. You now follow the recipe above to answer the task and select 5 % level of significance since it is not a question of medication for a serious illness.

The alternative hypothesis in this case is that the new drug is better. The reason for this is that you only need to know if you are going to approve for sale and thus the new drug must be better:

This result indicates that there is a 1 3 . 6 % chance that more than 92 patients would be cured with the old medicine.

so H 0 cannot be rejected. The new drug does not enter the market.

If the p value had been less than the level of significance, that would mean that the new drug represented by the alternative hypothesis is better, and that you are sure of this with statistical significance.

Hypothesis Testing Using the Binomial Distribution

- First Online: 04 June 2021

Cite this chapter

- Alese Wooditch 6 ,

- Nicole J. Johnson 6 ,

- Reka Solymosi 7 ,

- Juanjo Medina Ariza 8 &

- Samuel Langton 9

898 Accesses

Many people involved in criminology and criminal justice research spend time making predictions about populations in the real world. These predictions tend to be based on a theoretical framework and are formally stated as hypotheses in order to answer a specific research question. Using inferential statistics (see Chap. 6 ), we can test to what extent our data support these hypotheses and provide empirical evidence to support (or reject) our expectations in R . This chapter uses a simulated dataset of results from a crime reduction intervention for at-risk youth to explore how the binomial distribution allows us to generalize from a sample of 100 participants in a study to the wider population.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as EPUB and PDF

- Read on any device

- Instant download

- Own it forever

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Agresti, A., & Coull, B. A. (1998). Approximate is better than “exact” for interval estimation of binomial proportions. The American Statistician, 52 (2), 119–126.

Google Scholar

Brown, L. D., Cai, T. T., & DasGupta, A. (2001). Interval estimation for a binomial proportion. Statistical Science, 16 (2), 101–117.

Article Google Scholar

Wilson, E. B. (1927). Probable inference, the law of succession, and statistical inference. Journal of the American Statistical Association, 22 (158), 209–212.

Download references

Author information

Authors and affiliations.

Department of Criminal Justice, Temple University, Philadelphia, PA, USA

Alese Wooditch & Nicole J. Johnson

School of Social Sciences, University of Manchester, Manchester, UK

Reka Solymosi

Department of Criminal Law and Crime Science, School of Law, University of Seville, Seville, Spain

Juanjo Medina Ariza

Netherlands Institute for the Study of Crime and Law Enforcement, Amsterdam, The Netherlands

Samuel Langton

You can also search for this author in PubMed Google Scholar

The probability or sampling distribution for an event that has only two possible outcomes.

A research hypothesis that indicates a specific type of outcome by specifying the nature of the relationship that is expected.

The extent to which a study sample is reflective of the population from which it is drawn. A study is said to have high external validity when the sample used is representative of the population to which inferences are made.

A research hypothesis that does not indicate a specific type of outcome, stating only that there is a relationship or a difference.

Tests that do not make an assumption about the distribution of the population, also called distribution-free tests.

A statement that reduces the research question to a simple assertion to be tested by the researcher. The null hypothesis normally suggests that there is no relationship or no difference.

Tests that make an assumption about the shape of the population distribution.

Also known as alpha error and false-positive. The mistake made when a researcher rejects the null hypothesis on the basis of a sample statistic (i.e., claiming that there is a relationship) when in fact the null hypothesis is true (i.e., there is actually no such relationship in the population).

Rights and permissions

Reprints and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Wooditch, A., Johnson, N.J., Solymosi, R., Medina Ariza, J., Langton, S. (2021). Hypothesis Testing Using the Binomial Distribution. In: A Beginner’s Guide to Statistics for Criminology and Criminal Justice Using R. Springer, Cham. https://doi.org/10.1007/978-3-030-50625-4_8

Download citation

DOI : https://doi.org/10.1007/978-3-030-50625-4_8

Published : 04 June 2021

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-50624-7

Online ISBN : 978-3-030-50625-4

eBook Packages : Law and Criminology Law and Criminology (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Hypothesis Testing

Measuring the consistency between a model and data

C lassical statistics features two primary methods for using a sample of data to make an inference about a more general process. The first is the confidence interval, which expresses the uncertainty in an estimate of a population parameter. The second classical method of generalization is the hypothesis test.

The hypothesis test takes a more active approach to reasoning: it posits a specific explanation for how the data could be generated, then evaluates whether or not the observed data is consistent with that model. The hypothesis test is one of the most common statistical tools in the social and natural sciences, but the reasoning involved can be counter-intuitive. Let’s introduce the logic of a hypothesis test by looking at another criminal case that drew statisticians into the mix.

Example: The United States vs Kristen Gilbert

In 1989, fresh out of nursing school, Kristen Gilbert got a job at the VA Medical Center in Northampton, Massachusetts, not far from where she grew up 1 . Within a few years, she became admired for her skill and competence.

Gilbert’s skill was on display whenever a “code blue” alarm was sounded. This alarm indicates that a patient has gone into cardiac arrest and must be addressed quickly by administering a shot of epinephrine to restart the heart. Gilbert developed for a reputation for her steady hand in these crises.

By the mid-1990s, however, the other nurses started to grow suspicious. There seemed to be a few too many code blues, and a few too many deaths, during Gilbert’s shifts. The staff brought their concerns to the VA administration, who brought in a statistician to evaluate the data.

The data that the VA provided to the statistician contained the number of deaths at the medical center over the previous 10 years, broken out by the three shifts of the days: night, daytime, and evening. As part of the process of exploratory data analysis, the statistician constructed a plot.

This visualization reveals several striking trends. Between 1990 and 1995, there were dramatically more deaths than the years before and after that interval. Within that time span, it was the evening shift that had most of the deaths. The exception is 1990, when the night and daytime shifts had the most deaths.

So when was Gilbert working? She began working in this part of the hospital in March 1990 and stopped working in February 1996. Her shifts throughout that time span? The evening shifts. The one exception was 1990, when she was assigned to work the night shift.

This evidence is compelling in establishing an association between Gilbert and the increase in deaths. When the district attorney brought a case against Gilbert in court, this was the first line of evidence they provided. In a trial, however, there is a high burden of proof.

Could there be an alternative explanation for the trend found in this data?

The role of random chance

Suppose for a moment that the occurrence of deaths at the hospital had nothing to do with Gilbert being on shift. In that case we would expect that the proportion of shifts with a death would be fairly similar when comparing shifts where Gilbert was working and shifts where she was not. But we wouldn’t expect those proportions to be exactly equal. It’s reasonable to think that a slightly higher proportion of Gilbert’s shifts could have had a death just due to random chance, not due to anything malicious on her part.

So just how different were these proportions in the data? The plot above shows data from 1,641 individual shifts, on which three different variables were recorded: the shift number, whether or not there was a death on the shift, and whether or not Gilbert was working that shift.

Here are the first 10 observations.

Using this data frame, we can calculate the sample proportion of shifts where Gilbert was working (257) that had a death (40) and compare them to the sample proportion of shifts where Gilbert was not working (1384) that had a death (34).

\[ \hat{p}_{gilbert} - \hat{p}_{no\_gilbert} = \frac{40}{257} - \frac{34}{1384} = .155 - .024 = .131 \]

A note on notation: it’s common to use \(\hat{p}\) (“p hat”) to indicate that a proportion has been computed from a sample of data.

A difference of .131 seems dramatic, but is that within the bounds of what we might expect just due to chance? One way to address this question is to phrase it as: if in fact the probability of a death on a given shift is independent of whether or not Gilbert is on the shift, what values would we expect for the difference in observed proportions?

We can answer this question by using simulation. To a simulate a world in which deaths are independent of Gilbert, we can

- Shuffle (or permute) the values in the death variable in the data frame to break the link between that variable and the staff variable.

- Calculate the resulting difference in proportion of deaths in each group.

The rationale for shuffling values in one of the columns is that if in fact those two columns are independent of one another, then it was just random chance that led to a value of one variable landing in the same row as the value of the other variable. It could just as well have been a different pairing. Shuffling captures another example of the arbitrary pairings that we could have observed if the two variables were independent of one another 2 .

By repeating steps 1 and 2 many many times, we can build up the full distribution of the values that this difference in proportions could take.

As expected, in a world where these two variables are independent of one another, we would expect a difference in proportions around zero. Sometimes, however, that statistic might reach values of +/- .01 or .02 or rarely .03. In the 500 simulated statistics shown above, however, none of them reached beyond +/- .06.

So if that’s the range of statistics we would expect in a world where random chance is the only mechanism driving the difference in proportions, how does it compare to the world that we actually observed? The statistic that we observed in the data was .131, more than twice the value of the most extreme statistic observed above.

To put that into perspective, we can plot the observed statistic as a vertical line on the same plot.

The method used above shows that the chance of observing a difference of .131 is incredibly unlikely if in fact deaths were independent of Gilbert being on shift. On this point, the statisticians on the case agreed that they could rule out random chance as an explanation for this difference. Something else must have been happening.

Elements of a Hypothesis Test

The logic used by the statisticians in the Gilbert case is an example of a hypothesis test. There are a few key components common to every hypothesis test, so we’ll lay them out one-by-one.

A hypothesis test begins with the assertion of a null hypothesis.

It is common for the null hypothesis to be that nothing interesting is happening or that it is business as usual, a hypothesis that statisticians try to refute with data. In Gilbert case, this could be described as “The occurrence of a death is independence of the presence of Gilbert” or “The probability of death is the same whether or not Gilbert is on shift” or “The difference in the probability of death is zero, when comparing shifts where Gilbert is present to shifts where Gilbert is not present”. Importantly, the null model describes a possible state of the world, therefore the latter two versions are framed in terms of parameters ( \(p\) for proportions) instead of observed statistics ( \(\hat{p}\) ).

The hypothesis that something indeed is going on is usually framed as the alternative hypothesis.

In the Gilbert case, the corresponding alternative hypothesis is that there is “The occurrence of a death is dependent on the presence of Gilbert” or “The probability of death is different whether or not Gilbert is on shift” or “The difference in the probability of death is non-zero , when comparing shifts where Gilbert is present to shifts where Gilbert is not present”

In order to determine whether the observed data is consistent with the null hypothesis, it is necessary to compress the data down into a single statistic.

In Gilbert’s case, a difference in two proportions, \(\hat{p}_1 - \hat{p}_2\) is a natural test statistic and the observed test statistic was .131.

It’s not enough, though, to just compute the observed statistic. We need to know how likely this statistic would be in a world where the null hypothesis is true. This probability is captured in the notion of a p-value.

If the p-value is high, then the data is consistent with the null hypothesis. If the p-value is very low, however, there the statistic that was observed would be very unlikely in a world where the null hypothesis was true. As a consequence, the null hypothesis can be rejected as reasonable model for the data.

The p-value can be estimated using the proportion of statistics from the simulated null distribution that are as or more extreme than the observed statistic. In the simulation for the Gilbert case, there were 0 statistics greater than .131, so the estimated p-value is zero.

What a p-value is not

The p-value has been called the most used as well as the most abused tool in statistics. Here are three common misinterpretations to be wary of.

The p-value is the probability that the null hypothesis is true (FALSE!)

This is one of the most common confusions about p-values. Graphically, a p-value corresponds to the area in the tail of the null distribution that is more extreme than the observed test statistic. That null distribution can only be created if you assume that the null hypothesis is true. The p-value is fundamentally a conditional probability of observing the statistic (or more extreme) given the null hypothesis is true. It is flawed reasoning to start with an assumption that the null hypothesis is true and arrive at a probability of that same assumption.

A very high p-value suggests that the null hypothesis is true (FALSE!)

This interpretation is related to the first one but can lead to particularly wrongheaded decisions. One way to keep your interpretation of a p-value straight is to recall the distinction made in the US court system. A trial proceeds under the assumption that the defendant is innocent. The prosecution presents evidence of guilt. If the evidence is convincing the jury will render a verdict of “guilty”. If the evidence is not-convincing (that is, the p-value is high) then the jury will render a verdict of “not guilty” - not a verdict of “innocent”.

Imagine a setting where the prosecution has presented no evidence at all. That by no means indicates that the defendant is innocent, just that there was insufficient evidence to establish guilt.

The p-value is the probability of the data (FALSE!)

This statement has a semblance of truth to it but is missing an important qualifier. The probability is calculated based on the null distribution, which requires the assumption that the null hypothesis is true. It’s also not quite specific enough. Most often p-values are calculated as probabilities of test statistics, not probabilities of the full data sets.

Another more basic check on your understanding of a p-value: a p-value is a (conditional) probability, therefore it must between a number between 0 and 1. If you ever find yourself computing a p-value of -6 or 3.2, be sure to pause and revisit your calculations!

One test, many variations

The hypothesis testing framework laid out above is far more general than just this particular example from the case of Kristen Gilbert where we computed a difference in proportions and used shuffling (aka permutation) to build the null distribution. Below are just a few different research questions that could be addressed using a hypothesis test.

Pollsters have surveyed a sample of 200 voters ahead of an election to assess their relative support for the Republican and Democratic candidate. The observed difference in those proportions is .02. Is this consistent with the notion of evenly split support for the two candidates, or is one decidedly in the lead?

Brewers have tapped 7 barrels of beer and measured the average level of a compound related to the acidity of the beer as 610 parts per million. The acceptable level for this compound is 500 parts per million. Is this average of 610 consistent with the notion that the average of the whole batch of beer (many hundreds of barrels) is at the acceptable level of this compound?

A random sample of 40 users of a food delivery app were randomly assigned two different versions of a menu where they entered the amount of their tip: one with the tip amount in ascending order, the other in descending order. The average tip amount of those with the menu in ascending order was found to be $3.87 while the average tip of the users in the descending order group was $3.96. Could this difference in averages be explained by chance?

Although the contexts of these problems are very different, as are the types of statistics they’ve calculated, they can still be characterized as a hypothesis test by asking the following questions:

What is the null hypothesis used by the researchers?

What is the value of the observed test statistic?

How did researchers approximate the null distribution?

What was the p-value, what does it tell us and what does it not tell us?

In classical statistics there are two primary tools for assessing the role that random variability plays in the data that you have observed. The first is the confidence interval, which quantifies the amount of uncertainty in a point estimate due to the variability inherent in drawing a small random sample from a population. The second is the hypothesis test, which postings a specific model by which the data could be generated, then assesses the degree to which the observed data is consistent with that model.

The hypothesis test begins with the assertion of a null hypothesis that describes a chance mechanism for generating data. A test statistic is then selected that corresponds to that null hypothesis. From there, the sampling distribution of that statistic under the null hypothesis is approximated through a computational method (such as using permutation, as shown here) or one rooted in probability theory (such as the Central Limit Theorem). The final result of the hypothesis test procedure is the p-value, which is approximated as the proportion of the null distribution that is as or more extreme than the observed test statistic. The p-value measures the consistency between the null hypothesis and the observed test statistic and should be interpreted carefully.

A postscript on the case of Kristen Gilbert. Although the hypothesis test ruled out random chance as the reason for the spike in deaths under her watch, it didn’t rule out other potential causes for that spike. It’s possible, after all, that the nightshifts that Gilbert was working happen to be the time of day when cardiac arrests are more common. For this reason, the statistical evidence was never presented to the jury, but the jury nonetheless found her guilty based on other evidence presented in the trial.

The Ideas in Code

A hypothesis test using permutation can be implemented by introducing one new step into the process used for calculating a bootstrap interval. The key distinction is that in a hypothesis test the researchers puts forth a model for how the data could be generated. That is the role of hypothesize() .

hypothesize()

A function to place before generate() in an infer pipeline where you can specify a null model under which to generate data. The one necessary argument is

- null : the null hypothesis. Options include "independence" and "point" .

The following example implements a permutation test under the null hypothesis that there is no relationship between the body mass of penguins and their

- The output is the original data frame with new information appended to describe what the null hypothesis is for this data set.

- There are other forms of hypothesis tests that you will see involving a "point" null hypothesis. Those require adding additional arguments to hypothesize() .

Calculating an observed statistic

Let’s say for this example you select as your test statistic a difference in means, \(\bar{x}_{female} - \bar{x}_{male}\) . While you can use tools you know - group_by() and summarize() to calculate this statistic, you can also recycle much of the code that you’ll use to build the null distribution with infer .

Calculating the null distribution

To generate a null distribution of the kind of differences in means that you’d observe in a world where body mass had nothing to do with sex, just add the hypothesis with hypothesize() and the generation mechanism with generate() .

- The output data frame has reps rows and 2 columns: one indicating the replicate and the other with the statistic (a difference in means).

visualize()

Once you have a collection of test statistics under the null hypothesis saved as null , it can be useful to visualize that approximation of the null distribution. For that, use the function visualize() .

- visualize() expects a data frame of statistics.

- It is a short cut to creating a particular type of ggplot, so like any ggplot, you can add layers to it with + +.

- shade_p_value() is a function you can add to shade the part of the null distribution that corresponds to the p-value. The first argument is the observed statistic, which we’ve recorded as 100 here to see the behavior of the function. direction is an argument where you specify if you would like to shade values "less than" or "more than" the observed value, or "both" for a two-tailed p-value.

This case study appears in Statistics in the Courtroom: United States v. Kristen Gilbert by Cobb and Gelbach, published in Statistics: A Guide to the Unknown by Peck et. al. ↩︎

The technical notion that motivates the use of shuffling is a slightly more general notion than independence called exchangability. The distinction between these two related concepts is a topic in a course in probability. ↩︎

FactTest : Factuality Testing in Large Language Models with Finite-Sample and Distribution-Free Guarantees

The propensity of Large Language Models (LLMs) to generate hallucinations and non-factual content undermines their reliability in high-stakes domains, where rigorous control over Type I errors (the conditional probability of incorrectly classifying hallucinations as truthful content) is essential. Despite its importance, formal verification of LLM factuality with such guarantees remains largely unexplored. In this paper, we introduce FactTest , a novel framework that statistically assesses whether a LLM can confidently provide correct answers to given questions with finite-sample and distribution-free correctness guarantees. We formulate factuality testing as hypothesis testing problem to enforce an upper bound of Type I errors at user-specified significance levels. Notably, we prove that our framework also ensures strong Type II error control under mild conditions and can be extended to maintain its effectiveness when covariate shifts exist. Our approach is distribution-free and works for any number of human-annotated samples. It is model-agnostic and applies to any black-box or white-box LM. Extensive experiments on question-answering (QA) and multiple-choice benchmarks demonstrate that FactTest effectively detects hallucinations and improves the model’s ability to abstain from answering unknown questions, leading to an over 40% accuracy improvement.

1 Introduction

Large Language Models (LLMs) like ChatGPT and GPT-4 (Ouyang et al., 2022 ; OpenAI et al., 2024 ) have demonstrated substantial advancements in various domains such as summarization systems, search engines and virtual assistants. However, their outputs cannot be fully trusted due to their propensity to generate nonfactual and incorrect information with seemingly high confidence, a challenge often referred to as hallucination (Maynez et al., 2020b ; Huang et al., 2023 ; Ji et al., 2023 ) . This tendency undermines the reliability and trustworthiness of the generated content, highlighting a critical need for robust mechanisms to verify the factuality and correctness of LLM outputs.

Existing approaches to hallucination detection like retrieval-based methods (Thorne et al., 2018b ; Gou et al., 2024 ; Chen et al., 2024 ) and training-based approaches Zhang et al. ( 2023 ) either rely on external databases or resource-intensive fine-tuning process, which are often impractical or costly. Therefore, there has been growing interest in uncertainty estimation as a zero-resource alternative for hallucination detection (Varshney et al., 2023 ; Xiong et al., 2024 ) , operating under the premise that hallucinations are intrinsically tied to the model’s uncertainty Huang et al. ( 2023 ) . However, none of these methods can provide theoretical guarantees for the detection or testing results, which are essential for deploying LLMs in high-stakes domains Kumar et al. ( 2023 ) where precise control of Type I errors (incorrectly flagging a hallucination as truthful content) is needed for decision-making. For instance, incorrect medical diagnoses in healthcare or the provision of uncertain legal advice in the legal field could result in detrimental consequences.

To address these limitations, we introduce FactTest , a framework that statistically evaluates whether an LLM can reliably generate correct answers to given questions with provable correctness guarantees and teach LLMs to abstain from answering uncertain questions. We formulate the factuality testing within a hypothesis testing framework to theoretically control the Type I error while minimizing the Type II error. Leveraging the fundamental connection between Neyman-Pearson (NP) classification and statistical testing (Tong et al., 2018 ; Tong, 2013 ; Scott & Nowak, 2005 ) , we define a score function to quantify model certainty and select an appropriate threshold based on a constructed calibration dataset to instruct LLMs to refuse uncertain questions and control the false positive rate. Furthermore, we prove that, under mild conditions, FactTest achieves strong power control. This ensures that our method not only controls Type I error but also maintains a low Type II error, thereby providing reliable factuality assessments. On the other hand, since statistical tests often rely on the i.i.d. assumption, which may not hold in reality, we enhance the robustness of this framework by adding an extension to accommodate covariate shifts through the estimation of density ratios and the use of rejection sampling. Our approach is model-agnostic and does not rely on specific data distribution assumptions, making it broadly applicable to any language model. Importantly, it works for any finite number of human-annotated samples, ensuring practicality and ease of implementation.

To the best of our knowledge, this study is the first to introduce statistical factuality testing in large language models, thereby facilitating safer and more reliable deployment in high-stakes applications. We evaluate the effectiveness of our proposed framework on question-answering (QA) and multiple-choice benchmarks. The results indicate that our approach offers several significant advantages: (1) it consistently outperforms base models by a substantial margin without requiring additional training or external data sources; (2) it surpasses fine-tuned baselines by a large margin while utilizing only half of the training data; and (3) it maintains superior performance on out-of-distribution testing data. Notably, the theoretical guarantees of our method remain valid even when the i.i.d. assumption is violated. We summarize the main contributions below .

We propose FactTest , a novel statistical testing framework that evaluates the factuality of LLMs while teaching them to decline uncertain questions with user-specified Type I error guarantees.

We prove that our statistical framework achieves strong power control under mild conditions, ensuring that the predictor can also maintain a low Type II error. This power analysis is directly applicable to the standard NP classification problems, not limited to this setting.

We extend our framework to accommodate covariate shifts by approximating density ratios and applying rejection sampling, thereby enhancing its robustness in real-world applications.

We demonstrate that FactTest effectively detects hallucinations while maintaining Type I error below user-specified significance levels, achieving an over 40% improvement in accuracy compared to pretrained models without any fine-tuning. Additionally, it surpasses training-based baselines by 30% using only half of the fine-tuning data.

2 Statistical Factuality Testing

In this section, we formulate the evaluation of factuality in LLMs as a statistical hypothesis testing problem and introduce our FactTest framework to overcome hallucination issues.

2.1 Problem Formulation

We consider a text generation task in which a language model M 𝑀 M italic_M will generate its answers M ( q ) 𝑀 𝑞 M(q) italic_M ( italic_q ) based on a question q 𝑞 q italic_q . Our goal is to statistically evaluate whether M 𝑀 M italic_M can correctly answer q 𝑞 q italic_q , termed as M 𝑀 M italic_M being certain of q 𝑞 q italic_q . We formulate this objective as a hypothesis testing problem with the following hypotheses:

For any question-answer pair ( q , a ) 𝑞 𝑎 (q,a) ( italic_q , italic_a ) with a 𝑎 a italic_a to be the correct answer for question q 𝑞 q italic_q , we apply M 𝑀 M italic_M to generate an answer M ( q ) 𝑀 𝑞 M(q) italic_M ( italic_q ) . Then we classify the question-generated answer pair ( q , M ( q ) ) 𝑞 𝑀 𝑞 (q,M(q)) ( italic_q , italic_M ( italic_q ) ) as certain if the null hypothesis H 0 subscript 𝐻 0 H_{0} italic_H start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT is rejected, i.e., M ( q ) 𝑀 𝑞 M(q) italic_M ( italic_q ) aligns with a 𝑎 a italic_a ; otherwise, it is deemed uncertain. Let P 0 subscript 𝑃 0 P_{0} italic_P start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT and P 1 subscript 𝑃 1 P_{1} italic_P start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT represent the distributions of uncertain and certain question-generated answer pair ( q , M ( q ) ) 𝑞 𝑀 𝑞 (q,M(q)) ( italic_q , italic_M ( italic_q ) ) , respectively.

Given a dataset { ( q 1 , a 1 ) , … , ( q n , a n ) } ⊂ 𝒬 × 𝒜 subscript 𝑞 1 subscript 𝑎 1 … subscript 𝑞 𝑛 subscript 𝑎 𝑛 𝒬 𝒜 \{(q_{1},a_{1}),...,(q_{n},a_{n})\}\subset\mathcal{Q}\times\mathcal{A} { ( italic_q start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT , italic_a start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT ) , … , ( italic_q start_POSTSUBSCRIPT italic_n end_POSTSUBSCRIPT , italic_a start_POSTSUBSCRIPT italic_n end_POSTSUBSCRIPT ) } ⊂ caligraphic_Q × caligraphic_A comprising n 𝑛 n italic_n question-answer pairs, we apply M 𝑀 M italic_M to generate answers for all questions, resulting in the set 𝒟 = { ( q 1 , M ( q 1 ) , a 1 ) , … , ( q n , M ( q n ) , a n ) } 𝒟 subscript 𝑞 1 𝑀 subscript 𝑞 1 subscript 𝑎 1 … subscript 𝑞 𝑛 𝑀 subscript 𝑞 𝑛 subscript 𝑎 𝑛 {\mathcal{D}}=\{(q_{1},M(q_{1}),a_{1}),\ldots,(q_{n},M(q_{n}),a_{n})\} caligraphic_D = { ( italic_q start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT , italic_M ( italic_q start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT ) , italic_a start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT ) , … , ( italic_q start_POSTSUBSCRIPT italic_n end_POSTSUBSCRIPT , italic_M ( italic_q start_POSTSUBSCRIPT italic_n end_POSTSUBSCRIPT ) , italic_a start_POSTSUBSCRIPT italic_n end_POSTSUBSCRIPT ) } . Then our goal is to construct a predictor f ^ α : 𝒬 × 𝒜 → { 0 , 1 } : subscript ^ 𝑓 𝛼 → 𝒬 𝒜 0 1 \hat{f}_{\alpha}:\mathcal{Q}\times\mathcal{A}\to\{0,1\} over^ start_ARG italic_f end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT : caligraphic_Q × caligraphic_A → { 0 , 1 } that classifies a pair ( q , M ( q ) ) 𝑞 𝑀 𝑞 (q,M(q)) ( italic_q , italic_M ( italic_q ) ) as certain (output 1) or uncertain (output 0) while ensuring that the false positive rate, or Type I error, does not exceed a pre-specified significance level α 𝛼 \alpha italic_α . Formally, we seek f ^ α subscript ^ 𝑓 𝛼 \hat{f}_{\alpha} over^ start_ARG italic_f end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT such that the error of predicting uncertain ( q , M ( q ) ) 𝑞 𝑀 𝑞 (q,M(q)) ( italic_q , italic_M ( italic_q ) ) as certain is below level α 𝛼 \alpha italic_α with probability at least 1 − δ 1 𝛿 1-\delta 1 - italic_δ , i.e.,

where δ 𝛿 \delta italic_δ denotes the allowable probability of exceeding the significance level. Note that given any question q 𝑞 q italic_q , the answer M ( q ) 𝑀 𝑞 M(q) italic_M ( italic_q ) generated by M 𝑀 M italic_M is randomized. While the distribution of M ( q ) 𝑀 𝑞 M(q) italic_M ( italic_q ) is fully determined by q 𝑞 q italic_q , the realization M ( q ) 𝑀 𝑞 M(q) italic_M ( italic_q ) involves additional sampling randomness independent of q 𝑞 q italic_q . By taking ( q , M ( q ) ) 𝑞 𝑀 𝑞 (q,M(q)) ( italic_q , italic_M ( italic_q ) ) as inputs to f ^ α subscript ^ 𝑓 𝛼 \hat{f}_{\alpha} over^ start_ARG italic_f end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT , we enable the predictor to utilize information from the question q 𝑞 q italic_q , the distribution of M ( q ) 𝑀 𝑞 M(q) italic_M ( italic_q ) (by asking M 𝑀 M italic_M the same question q 𝑞 q italic_q multiple times), and the current realization M ( q ) 𝑀 𝑞 M(q) italic_M ( italic_q ) of the produced answer.

2.2 Finite-sample and Distribution-free Type I Error Control

Calibration Dataset Construction. Following the methodology of Zhang et al. ( 2023 ) , we adopt a supervised identification strategy to partition the dataset 𝒟 𝒟 {\mathcal{D}} caligraphic_D into a certain subset 𝒟 1 subscript 𝒟 1 {\mathcal{D}}_{1} caligraphic_D start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT and an uncertain subset 𝒟 0 subscript 𝒟 0 {\mathcal{D}}_{0} caligraphic_D start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT .

Specifically, for each question-generated answer pair ( q i , M ( q i ) ) subscript 𝑞 𝑖 𝑀 subscript 𝑞 𝑖 (q_{i},M(q_{i})) ( italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT , italic_M ( italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ) ) in 𝒟 𝒟 {\mathcal{D}} caligraphic_D , we define an indicator variable y i ∈ { 0 , 1 } subscript 𝑦 𝑖 0 1 y_{i}\in\{0,1\} italic_y start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ∈ { 0 , 1 } to indicate whether M 𝑀 M italic_M is certain about q i subscript 𝑞 𝑖 q_{i} italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT such that

Based on these indicators, the dataset is divided into:

Assuming that { ( q i , M ( q i ) , y i ) } i = 1 n superscript subscript subscript 𝑞 𝑖 𝑀 subscript 𝑞 𝑖 subscript 𝑦 𝑖 𝑖 1 𝑛 \{(q_{i},M(q_{i}),y_{i})\}_{i=1}^{n} { ( italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT , italic_M ( italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ) , italic_y start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ) } start_POSTSUBSCRIPT italic_i = 1 end_POSTSUBSCRIPT start_POSTSUPERSCRIPT italic_n end_POSTSUPERSCRIPT are independent and identically distributed (i.i.d.) samples from distribution P 𝑃 P italic_P , and that the distributions of 𝒟 0 subscript 𝒟 0 \mathcal{D}_{0} caligraphic_D start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT and 𝒟 1 subscript 𝒟 1 \mathcal{D}_{1} caligraphic_D start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT are P 0 subscript 𝑃 0 P_{0} italic_P start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT and P 1 subscript 𝑃 1 P_{1} italic_P start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT , respectively.

Certainty Predictor based on Score Function. Suppose there is a score function η ^ : 𝒬 × 𝒜 → ℝ : ^ 𝜂 → 𝒬 𝒜 ℝ \hat{\eta}:\mathcal{Q}\times\mathcal{A}\to\mathbb{R} over^ start_ARG italic_η end_ARG : caligraphic_Q × caligraphic_A → blackboard_R that measures the certainty. The value is expected to be large if M 𝑀 M italic_M has the ability to provide a factual answer. The predictor f ^ α ( q , M ( q ) ) subscript ^ 𝑓 𝛼 𝑞 𝑀 𝑞 \hat{f}_{\alpha}(q,M(q)) over^ start_ARG italic_f end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT ( italic_q , italic_M ( italic_q ) ) can then be defined as:

where 𝕀 𝕀 {\mathbb{I}} blackboard_I is the indicator function and τ ^ α subscript ^ 𝜏 𝛼 \hat{\tau}_{\alpha} over^ start_ARG italic_τ end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT is a threshold to be determined. The task thus reduces to selecting a threshold τ ^ α subscript ^ 𝜏 𝛼 \hat{\tau}_{\alpha} over^ start_ARG italic_τ end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT that satisfies the requirement in Eq. 1 :

T_{(n_{0}+1)}=+\infty italic_T start_POSTSUBSCRIPT ( italic_n start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT + 1 ) end_POSTSUBSCRIPT = + ∞ . Motivated by Tong et al. ( 2018 ) , the threshold τ ^ α subscript ^ 𝜏 𝛼 \hat{\tau}_{\alpha} over^ start_ARG italic_τ end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT is selected based on the following probabilistic guarantee:

subscript 𝑛 0 1 k=n_{0}+1 italic_k = italic_n start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT + 1 , v ( k ) 𝑣 𝑘 v(k) italic_v ( italic_k ) is defined to be 0. We then determine k ^ ^ 𝑘 \hat{k} over^ start_ARG italic_k end_ARG as

\hat{\tau}_{\alpha}=T_{(n_{0}+1)}=+\infty over^ start_ARG italic_τ end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT = italic_T start_POSTSUBSCRIPT ( italic_n start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT + 1 ) end_POSTSUBSCRIPT = + ∞ , causing f ^ α subscript ^ 𝑓 𝛼 \hat{f}_{\alpha} over^ start_ARG italic_f end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT to conservatively classify all pairs ( q , M ( q ) ) 𝑞 𝑀 𝑞 (q,M(q)) ( italic_q , italic_M ( italic_q ) ) as uncertain, thereby abstaining from answering any question. The derivation is deferred to Appendix A .

n\in\mathbb{N}_{+} italic_n ∈ blackboard_N start_POSTSUBSCRIPT + end_POSTSUBSCRIPT , with probability at least 1 − δ 1 𝛿 1-\delta 1 - italic_δ , the constructed classifier f ^ α subscript ^ 𝑓 𝛼 \hat{f}_{\alpha} over^ start_ARG italic_f end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT has type I error below α 𝛼 \alpha italic_α , i.e.,

This theorem provides a finite-sample and distribution-free guarantee of Type I error control. With the determined threshold τ ^ α subscript ^ 𝜏 𝛼 \hat{\tau}_{\alpha} over^ start_ARG italic_τ end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT , the predictor f ^ α ( q , M ( q ) ) = 𝕀 ( η ^ ( q , M ( q ) ) > τ ^ α ) subscript ^ 𝑓 𝛼 𝑞 𝑀 𝑞 𝕀 ^ 𝜂 𝑞 𝑀 𝑞 subscript ^ 𝜏 𝛼 \hat{f}_{\alpha}(q,M(q))={\mathbb{I}}(\hat{\eta}(q,M(q))>\hat{\tau}_{\alpha}) over^ start_ARG italic_f end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT ( italic_q , italic_M ( italic_q ) ) = blackboard_I ( over^ start_ARG italic_η end_ARG ( italic_q , italic_M ( italic_q ) ) > over^ start_ARG italic_τ end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT ) is formally defined. This classifier ensures that, for a given significance level α 𝛼 \alpha italic_α , the Type I error is controlled below α 𝛼 \alpha italic_α with high probability 1 − δ 1 𝛿 1-\delta 1 - italic_δ . Consequently, when η ^ ( q , M ( q ) ) ≥ τ ^ α ^ 𝜂 𝑞 𝑀 𝑞 subscript ^ 𝜏 𝛼 \hat{\eta}(q,M(q))\geq\hat{\tau}_{\alpha} over^ start_ARG italic_η end_ARG ( italic_q , italic_M ( italic_q ) ) ≥ over^ start_ARG italic_τ end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT , we reject the null hypothesis H 0 subscript 𝐻 0 H_{0} italic_H start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT and assert that the model M 𝑀 M italic_M can answer the question q 𝑞 q italic_q certainly and correctly. Otherwise, the model will output an acknowledgment of uncertainty.

2.3 Type II Error Control

The effectiveness of FactTest not only hinges on Type I error control but also on ensuring sufficient statistical power to detect true positives. We then analyze the Type II error of the constructed classifier.

Denote η ( q ′ , M ( q ′ ) ) = ℙ ( q , M ( q ) , y ) ∼ P ( y = 1 | q = q ′ , M ( q ) = M ( q ′ ) ) \eta(q^{\prime},M(q^{\prime}))={\mathbb{P}}_{(q,M(q),y)\sim P}(y=1|q=q^{\prime% },M(q)=M(q^{\prime})) italic_η ( italic_q start_POSTSUPERSCRIPT ′ end_POSTSUPERSCRIPT , italic_M ( italic_q start_POSTSUPERSCRIPT ′ end_POSTSUPERSCRIPT ) ) = blackboard_P start_POSTSUBSCRIPT ( italic_q , italic_M ( italic_q ) , italic_y ) ∼ italic_P end_POSTSUBSCRIPT ( italic_y = 1 | italic_q = italic_q start_POSTSUPERSCRIPT ′ end_POSTSUPERSCRIPT , italic_M ( italic_q ) = italic_M ( italic_q start_POSTSUPERSCRIPT ′ end_POSTSUPERSCRIPT ) ) to be the conditional probability that M 𝑀 M italic_M answers the question q ′ superscript 𝑞 ′ q^{\prime} italic_q start_POSTSUPERSCRIPT ′ end_POSTSUPERSCRIPT certainly given any question q ′ superscript 𝑞 ′ q^{\prime} italic_q start_POSTSUPERSCRIPT ′ end_POSTSUPERSCRIPT and the generated answer M ( q ′ ) 𝑀 superscript 𝑞 ′ M(q^{\prime}) italic_M ( italic_q start_POSTSUPERSCRIPT ′ end_POSTSUPERSCRIPT ) . Note that a question q 𝑞 q italic_q may have multiple correct answers and a 𝑎 a italic_a is just one realization. Therefore, a 𝑎 a italic_a , and thus y 𝑦 y italic_y , may still be random given ( q , M ( q ) ) 𝑞 𝑀 𝑞 (q,M(q)) ( italic_q , italic_M ( italic_q ) ) , implying η ( q , M ( q ) ) 𝜂 𝑞 𝑀 𝑞 \eta(q,M(q)) italic_η ( italic_q , italic_M ( italic_q ) ) may take value in ( 0 , 1 ) 0 1 (0,1) ( 0 , 1 ) . Suppose there exists an increasing function H 𝐻 H italic_H such that ‖ H ∘ η ^ − η ‖ ∞ ≤ ϵ η subscript norm 𝐻 ^ 𝜂 𝜂 subscript italic-ϵ 𝜂 \|H\circ\hat{\eta}-\eta\|_{\infty}\leq\epsilon_{\eta} ∥ italic_H ∘ over^ start_ARG italic_η end_ARG - italic_η ∥ start_POSTSUBSCRIPT ∞ end_POSTSUBSCRIPT ≤ italic_ϵ start_POSTSUBSCRIPT italic_η end_POSTSUBSCRIPT , where H ∘ η ^ ( q , M ( q ) ) = H ( η ^ ( q , M ( q ) ) ) 𝐻 ^ 𝜂 𝑞 𝑀 𝑞 𝐻 ^ 𝜂 𝑞 𝑀 𝑞 H\circ\hat{\eta}(q,M(q))=H(\hat{\eta}(q,M(q))) italic_H ∘ over^ start_ARG italic_η end_ARG ( italic_q , italic_M ( italic_q ) ) = italic_H ( over^ start_ARG italic_η end_ARG ( italic_q , italic_M ( italic_q ) ) ) is the composition of H 𝐻 H italic_H and η ^ ^ 𝜂 \hat{\eta} over^ start_ARG italic_η end_ARG . Note that k ^ ^ 𝑘 \hat{k} over^ start_ARG italic_k end_ARG , and thus f ^ α subscript ^ 𝑓 𝛼 \hat{f}_{\alpha} over^ start_ARG italic_f end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT , are invariant if we replace η ^ ^ 𝜂 \hat{\eta} over^ start_ARG italic_η end_ARG in Section 2 by H ∘ η ^ 𝐻 ^ 𝜂 H\circ\hat{\eta} italic_H ∘ over^ start_ARG italic_η end_ARG . Therefore, without the loss of generality, we assume H 𝐻 H italic_H is the identity function. Denote F ( t ) = ℙ ( q , M ( q ) ) ∼ P 0 ( η ^ ( q , M ( q ) ) ≤ t ) 𝐹 𝑡 subscript ℙ similar-to 𝑞 𝑀 𝑞 subscript 𝑃 0 ^ 𝜂 𝑞 𝑀 𝑞 𝑡 F(t)={\mathbb{P}}_{(q,M(q))\sim P_{0}}(\hat{\eta}(q,M(q))\leq t) italic_F ( italic_t ) = blackboard_P start_POSTSUBSCRIPT ( italic_q , italic_M ( italic_q ) ) ∼ italic_P start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT end_POSTSUBSCRIPT ( over^ start_ARG italic_η end_ARG ( italic_q , italic_M ( italic_q ) ) ≤ italic_t ) to be the CDF of η ^ ( q , M ( q ) ) ^ 𝜂 𝑞 𝑀 𝑞 \hat{\eta}(q,M(q)) over^ start_ARG italic_η end_ARG ( italic_q , italic_M ( italic_q ) ) under P 0 subscript 𝑃 0 P_{0} italic_P start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT . For any classifier f 𝑓 f italic_f , we set ℛ 0 ( f ) = ℙ ( q , M ( q ) ) ∼ P 0 ( f ( q , M ( q ) ) = 1 ) subscript ℛ 0 𝑓 subscript ℙ similar-to 𝑞 𝑀 𝑞 subscript 𝑃 0 𝑓 𝑞 𝑀 𝑞 1 {\mathcal{R}}_{0}(f)={\mathbb{P}}_{(q,M(q))\sim P_{0}}(f(q,M(q))=1) caligraphic_R start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT ( italic_f ) = blackboard_P start_POSTSUBSCRIPT ( italic_q , italic_M ( italic_q ) ) ∼ italic_P start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT end_POSTSUBSCRIPT ( italic_f ( italic_q , italic_M ( italic_q ) ) = 1 ) (resp. ℛ 1 ( f ) = ℙ ( q , M ( q ) ) ∼ P 1 ( f ( q , M ( q ) ) = 0 ) subscript ℛ 1 𝑓 subscript ℙ similar-to 𝑞 𝑀 𝑞 subscript 𝑃 1 𝑓 𝑞 𝑀 𝑞 0 {\mathcal{R}}_{1}(f)={\mathbb{P}}_{(q,M(q))\sim P_{1}}(f(q,M(q))=0) caligraphic_R start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT ( italic_f ) = blackboard_P start_POSTSUBSCRIPT ( italic_q , italic_M ( italic_q ) ) ∼ italic_P start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT end_POSTSUBSCRIPT ( italic_f ( italic_q , italic_M ( italic_q ) ) = 0 ) ) to be the Type I error (resp. Type II error). It follows from Theorem 1 in Tong ( 2013 ) that the Bayes optimal classifier f α ∗ superscript subscript 𝑓 𝛼 f_{\alpha}^{*} italic_f start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT start_POSTSUPERSCRIPT ∗ end_POSTSUPERSCRIPT

has the form f α ∗ ( q , M ( q ) ) = 𝕀 ( η ( q , M ( q ) ) > τ α ) subscript superscript 𝑓 𝛼 𝑞 𝑀 𝑞 𝕀 𝜂 𝑞 𝑀 𝑞 subscript 𝜏 𝛼 f^{*}_{\alpha}(q,M(q))={\mathbb{I}}(\eta(q,M(q))>\tau_{\alpha}) italic_f start_POSTSUPERSCRIPT ∗ end_POSTSUPERSCRIPT start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT ( italic_q , italic_M ( italic_q ) ) = blackboard_I ( italic_η ( italic_q , italic_M ( italic_q ) ) > italic_τ start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT ) for some τ α ∈ [ 0 , 1 ] subscript 𝜏 𝛼 0 1 \tau_{\alpha}\in[0,1] italic_τ start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT ∈ [ 0 , 1 ] .

Let p y = ℙ ( q , M ( q ) , y ) ∼ P ( y = 1 ) subscript 𝑝 𝑦 subscript ℙ similar-to 𝑞 𝑀 𝑞 𝑦 𝑃 𝑦 1 p_{y}={\mathbb{P}}_{(q,M(q),y)\sim P}(y=1) italic_p start_POSTSUBSCRIPT italic_y end_POSTSUBSCRIPT = blackboard_P start_POSTSUBSCRIPT ( italic_q , italic_M ( italic_q ) , italic_y ) ∼ italic_P end_POSTSUBSCRIPT ( italic_y = 1 ) denote the marginal probability that model M 𝑀 M italic_M is certain. We define

for some constant c > 0 𝑐 0 c>0 italic_c > 0 . If we denote G α ( ϵ ) = ℙ ( q , M ( q ) ) ∼ P 0 ( | η ( q , M ( q ) ) − τ α | ≤ ϵ ) subscript 𝐺 𝛼 italic-ϵ subscript ℙ similar-to 𝑞 𝑀 𝑞 subscript 𝑃 0 𝜂 𝑞 𝑀 𝑞 subscript 𝜏 𝛼 italic-ϵ G_{\alpha}(\epsilon)={\mathbb{P}}_{(q,M(q))\sim P_{0}}(|\eta(q,M(q))-\tau_{% \alpha}|\leq\epsilon) italic_G start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT ( italic_ϵ ) = blackboard_P start_POSTSUBSCRIPT ( italic_q , italic_M ( italic_q ) ) ∼ italic_P start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT end_POSTSUBSCRIPT ( | italic_η ( italic_q , italic_M ( italic_q ) ) - italic_τ start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT | ≤ italic_ϵ ) to be the probability measure around the classification boundary of f α ∗ subscript superscript 𝑓 𝛼 f^{*}_{\alpha} italic_f start_POSTSUPERSCRIPT ∗ end_POSTSUPERSCRIPT start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT , then the Type II error of the proposed algorithm can be controlled as follows.

subscript 𝜏 𝛼 subscript italic-ϵ 𝜏 subscript italic-ϵ 𝜂 1 \tau_{\alpha}+\epsilon_{\tau}+\epsilon_{\eta}<1 italic_τ start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT + italic_ϵ start_POSTSUBSCRIPT italic_τ end_POSTSUBSCRIPT + italic_ϵ start_POSTSUBSCRIPT italic_η end_POSTSUBSCRIPT < 1 , then with probability at least 1 − 2 δ 1 2 𝛿 1-2\delta 1 - 2 italic_δ , we have

3 Extension of FactTest to Covariate Shifts

The threshold selection procedure developed in Section 2 relies on the assumption that the calibration dataset 𝒟 0 = { ( q i , M ( q i ) ) ∈ 𝒬 ∣ y i = 0 } subscript 𝒟 0 conditional-set subscript 𝑞 𝑖 𝑀 subscript 𝑞 𝑖 𝒬 subscript 𝑦 𝑖 0 {\mathcal{D}}_{0}=\{(q_{i},M(q_{i}))\in{\mathcal{Q}}\mid y_{i}=0\} caligraphic_D start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT = { ( italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT , italic_M ( italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ) ) ∈ caligraphic_Q ∣ italic_y start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT = 0 } follows the target distribution P 0 subscript 𝑃 0 P_{0} italic_P start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT of uncertain question-generated answer pairs. However, labeled samples from the target distribution may not always be available in practice. Instead, people may use the labeled data that they believe to be similar to the target distribution, which necessitates methods to handle distribution shifts. In this section, we study the case of covariate shift, where the distribution of the question-generated answer pairs in the calibration data differs from that in the target distribution, while the conditional distribution of y 𝑦 y italic_y given ( q , M ( q ) ) 𝑞 𝑀 𝑞 (q,M(q)) ( italic_q , italic_M ( italic_q ) ) remains the same.

3.2 Type I Error Control under Covariate Shift

To extend the procedure in Section 2 to the covariate shift setting, we take an additional step to transform the samples in 𝒟 0 subscript 𝒟 0 {\mathcal{D}}_{0} caligraphic_D start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT from P ~ 0 subscript ~ 𝑃 0 \tilde{P}_{0} over~ start_ARG italic_P end_ARG start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT to P 0 subscript 𝑃 0 P_{0} italic_P start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT distributed random variables by rejection sampling.

In the first step, we generate n 0 subscript 𝑛 0 n_{0} italic_n start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT uniform random variables U 1 , … , U n 0 ∼ i . i . d . Unif [ 0 , B ] U_{1},\ldots,U_{n_{0}}\overset{i.i.d.}{\sim}{\rm Unif}[0,B] italic_U start_POSTSUBSCRIPT 1 end_POSTSUBSCRIPT , … , italic_U start_POSTSUBSCRIPT italic_n start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT end_POSTSUBSCRIPT start_OVERACCENT italic_i . italic_i . italic_d . end_OVERACCENT start_ARG ∼ end_ARG roman_Unif [ 0 , italic_B ] and select the indexes ℐ = { i ∈ [ n 0 ] : U i ≤ w ( q i ( 0 ) , M ( q i ( 0 ) ) ) } ℐ conditional-set 𝑖 delimited-[] subscript 𝑛 0 subscript 𝑈 𝑖 𝑤 superscript subscript 𝑞 𝑖 0 𝑀 superscript subscript 𝑞 𝑖 0 {\mathcal{I}}=\{i\in[n_{0}]:U_{i}\leq w(q_{i}^{(0)},M(q_{i}^{(0)}))\} caligraphic_I = { italic_i ∈ [ italic_n start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT ] : italic_U start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ≤ italic_w ( italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT start_POSTSUPERSCRIPT ( 0 ) end_POSTSUPERSCRIPT , italic_M ( italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT start_POSTSUPERSCRIPT ( 0 ) end_POSTSUPERSCRIPT ) ) } . If we collect all the samples in 𝒟 0 subscript 𝒟 0 {\mathcal{D}}_{0} caligraphic_D start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT with indexes in ℐ ℐ {\mathcal{I}} caligraphic_I to form 𝒟 ~ 0 = { ( q i ( 0 ) , M ( q i ( 0 ) ) ) : i ∈ ℐ } = △ { ( q ~ i , M ( q ~ i ) ) : i ∈ [ n ~ 0 ] } subscript ~ 𝒟 0 conditional-set superscript subscript 𝑞 𝑖 0 𝑀 superscript subscript 𝑞 𝑖 0 𝑖 ℐ △ conditional-set subscript ~ 𝑞 𝑖 𝑀 subscript ~ 𝑞 𝑖 𝑖 delimited-[] subscript ~ 𝑛 0 \tilde{\mathcal{D}}_{0}=\{(q_{i}^{(0)},M(q_{i}^{(0)})):i\in{\mathcal{I}}\}% \overset{\triangle}{=}\{(\tilde{q}_{i},M(\tilde{q}_{i})):i\in[\tilde{n}_{0}]\} over~ start_ARG caligraphic_D end_ARG start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT = { ( italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT start_POSTSUPERSCRIPT ( 0 ) end_POSTSUPERSCRIPT , italic_M ( italic_q start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT start_POSTSUPERSCRIPT ( 0 ) end_POSTSUPERSCRIPT ) ) : italic_i ∈ caligraphic_I } over△ start_ARG = end_ARG { ( over~ start_ARG italic_q end_ARG start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT , italic_M ( over~ start_ARG italic_q end_ARG start_POSTSUBSCRIPT italic_i end_POSTSUBSCRIPT ) ) : italic_i ∈ [ over~ start_ARG italic_n end_ARG start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT ] } . Then it can be shown that 𝒟 ~ 0 ∣ ℐ ∼ i . i . d . P 0 \tilde{\mathcal{D}}_{0}\mid{\mathcal{I}}\overset{\rm i.i.d.}{\sim}P_{0} over~ start_ARG caligraphic_D end_ARG start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT ∣ caligraphic_I start_OVERACCENT roman_i . roman_i . roman_d . end_OVERACCENT start_ARG ∼ end_ARG roman_P start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT .

\tilde{T}_{(\tilde{n}_{0}+1)}=+\infty over~ start_ARG italic_T end_ARG start_POSTSUBSCRIPT ( over~ start_ARG italic_n end_ARG start_POSTSUBSCRIPT 0 end_POSTSUBSCRIPT + 1 ) end_POSTSUBSCRIPT = + ∞ . Then we set the threshold τ ^ α subscript ^ 𝜏 𝛼 \hat{\tau}_{\alpha} over^ start_ARG italic_τ end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT to be T ~ ( k ^ ) subscript ~ 𝑇 ^ 𝑘 \tilde{T}_{(\hat{k})} over~ start_ARG italic_T end_ARG start_POSTSUBSCRIPT ( over^ start_ARG italic_k end_ARG ) end_POSTSUBSCRIPT , with k ^ ^ 𝑘 \hat{k} over^ start_ARG italic_k end_ARG satisfies

Then we are able to control the Type I error as follows.

With probability at least 1 − δ 1 𝛿 1-\delta 1 - italic_δ , the constructed classifier f ^ α ( q , M ( q ) ) = 𝕀 ( η ^ ( q , M ( q ) ) > T ~ ( k ^ ) ) subscript ^ 𝑓 𝛼 𝑞 𝑀 𝑞 𝕀 ^ 𝜂 𝑞 𝑀 𝑞 subscript ~ 𝑇 ^ 𝑘 \hat{f}_{\alpha}(q,M(q))={\mathbb{I}}(\hat{\eta}(q,M(q))>\tilde{T}_{(\hat{k})}) over^ start_ARG italic_f end_ARG start_POSTSUBSCRIPT italic_α end_POSTSUBSCRIPT ( italic_q , italic_M ( italic_q ) ) = blackboard_I ( over^ start_ARG italic_η end_ARG ( italic_q , italic_M ( italic_q ) ) > over~ start_ARG italic_T end_ARG start_POSTSUBSCRIPT ( over^ start_ARG italic_k end_ARG ) end_POSTSUBSCRIPT ) has type I error below α 𝛼 \alpha italic_α , i.e.

4 Experiments

In this section, we empirically investigate FactTest in addressing the hallucination problem of LLMs, focusing on the following questions: Q1: Can FactTest improve the accuracy and lead to more factual LLMs? Q2: Can FactTest effectively control the Type I error? Q3: Can FactTest generalize well when covariate shifts exist?

4.1 Experimental Setups

Datasets. Following R-Tuning (Zhang et al., 2023 ) , we conduct our experiments on the knowledge-extensive QA tasks, which can then be categorized into two generation tasks. More details about the datasets can be referred to Appendix B.1 .

Question-Answering: Given a question, the model directly predicts its answer, which is one sentence with tokens no longer than 15. We include ParaRel (Elazar et al., 2021 ) and HotpotQA (Yang et al., 2018 ) . For experiments considering distirbution shifts, we utilize ParaRel-OOD as the testing dataset.

Multiple-Choice: Given a question with several choices, the model chooses one option among A, B and C. We include WiCE (Kamoi et al., 2023 ) and FEVER (Thorne et al., 2018a ) .

Certainty Score Functions. We can either fit a prediction model to predict the certainty of a given question or use any off-the-shelf certainty estimation function to serve as the score function η ^ ^ 𝜂 \hat{\eta} over^ start_ARG italic_η end_ARG . Particularly, in this paper, we introduce three entropy-based certainty functions. Details about the score functions and equations are deferred to Appendix B.2 .

Vanilla Entropy (VE) : We query the model M 𝑀 M italic_M k 𝑘 k italic_k times and calculate the entropy across k 𝑘 k italic_k answers.

where p ( M ( q ) j | q ) 𝑝 conditional 𝑀 subscript 𝑞 𝑗 𝑞 p(M(q)_{j}|q) italic_p ( italic_M ( italic_q ) start_POSTSUBSCRIPT italic_j end_POSTSUBSCRIPT | italic_q ) is the frequency of a predicted answer M ( q ) j 𝑀 subscript 𝑞 𝑗 M(q)_{j} italic_M ( italic_q ) start_POSTSUBSCRIPT italic_j end_POSTSUBSCRIPT given a question q 𝑞 q italic_q .

Semantic Entropy (SE) : Kuhn et al. ( 2023 ) measures uncertainty in natural language generation by accounting for the probability distribution over distinct meanings rather than individual token sequences.

Kernel Language Entropy (KLE) : Nikitin et al. ( 2024 ) quantifies uncertainty by using semantic similarity kernels between generated answers, allowing for a more nuanced estimation of uncertainty. Notably, this function does not apply to multiple-choice datasets and we only employ it on ParaRel and HotpotQA.

Models. In main experiments, we focus on distribution-free settings, where models that require fine-tuning will violate this assumption. We implement seven variants of FactTest , utilizing three score functions, and compare their performances against baseline pretrained models. The baseline model, denoted as Pretrained , involves evaluating the original pretrained checkpoints on the entire test set without any modifications. In contrast, FactTest models are assessed solely on questions for which they can confidently provide answers. Among them, FactTest -ve k subscript FactTest -ve 𝑘 \text{{FactTest}-ve}_{k} smallcaps_FactTest -ve start_POSTSUBSCRIPT italic_k end_POSTSUBSCRIPT , FactTest -se k subscript FactTest -se 𝑘 \text{{FactTest}-se}_{k} smallcaps_FactTest -se start_POSTSUBSCRIPT italic_k end_POSTSUBSCRIPT and FactTest -kle k subscript FactTest -kle 𝑘 \text{{FactTest}-kle}_{k} smallcaps_FactTest -kle start_POSTSUBSCRIPT italic_k end_POSTSUBSCRIPT means utilizing vanilla entropy, semantic entropy and kernal language entropy as score functions, respectively, where k 𝑘 k italic_k means we sample k 𝑘 k italic_k outputs for a given question.

To facilitate comparison with training-based methods, we randomly split our training dataset, allocating half for instruction-tuning and the remaining half to construct the calibration dataset. We choose semantic entropy as the score function and generate 15 outputs. This variant is denoted as FactTest -t. For comparative analysis, we include R-Tuning (Zhang et al., 2023 ) as our primary baseline, evaluating it on the subset of questions that it is willing to answer. We also consider Finetune-All and Finetune-Half , which undergo instruction-tuning using the entire and half of the original training dataset, respectively, and are evaluated on the entire test set.

To assess the applicability of our framework on black-box APIs, we further implement FactTest on GPT-4o Mini (OpenAI, 2024 ) and compare the resulting performances.

Metrics. For models that could only output either the answer or an unknown expression, we evaluate the questions that our model is willing to answer. The accuracy is calculated as follows:

Besides, we also include Type I error, or False Positive Rate (FPR), and Type II error, or False Negative Rate (FNR), as our evaluation metrics. Notably, our method enforces an upper bound of Type I error, that is, the probability of predicting an uncertain question as a certain one.

Implementation. We choose OpenLLaMA-3B, OpenLLaMA-7B, OpenLLaMA-13B (Geng & Liu, 2023 ) , and LLaMA-7B, LLaMA-13B (Touvron et al., 2023 ) as the base models in our experiments. The temperature is set to 0 0 for evaluation and 0.7 0.7 0.7 0.7 for calculating score functions. We follow Zhang et al. ( 2023 ) to use LMFlow (Diao et al., 2023 ) to conduct instruction tuning, setting epoch to 1 and learning rate to 2 e − 5 2 superscript 𝑒 5 2e^{-5} 2 italic_e start_POSTSUPERSCRIPT - 5 end_POSTSUPERSCRIPT . All the experiments are implemented on 4 Nvidia H100-80GB GPUs.

4.2 Main Experimental Results

We first conduct in-distribution experiments on question-answering and multiple choice datasets ParaRel, HotpotQA, WiCE and FEVER.

Main Performance. The accuracy results are presented in Table 1 , where the significance level α 𝛼 \alpha italic_α for FactTest is set to 0.05. Additional experimental results for other significance levels (e.g., α = 0.10 𝛼 0.10 \alpha=0.10 italic_α = 0.10 ) are provided in Appendix C.1 . Analysis of the results reveals that FactTest significantly outperforms pretrained models by a substantial margin in terms of accuracy on the questions it is willing to answer, compared to baselines that respond to all questions indiscriminately. Notably, FactTest can yield an over 40% accuracy improvement on ParaRel, WiCE and FEVER. These results demonstrate that FactTest is more reliable when making predictions and is capable of refusing unknown answers.