- How it works

"Christmas Offer"

Terms & conditions.

As the Christmas season is upon us, we find ourselves reflecting on the past year and those who we have helped to shape their future. It’s been quite a year for us all! The end of the year brings no greater joy than the opportunity to express to you Christmas greetings and good wishes.

At this special time of year, Research Prospect brings joyful discount of 10% on all its services. May your Christmas and New Year be filled with joy.

We are looking back with appreciation for your loyalty and looking forward to moving into the New Year together.

"Claim this offer"

In unfamiliar and hard times, we have stuck by you. This Christmas, Research Prospect brings you all the joy with exciting discount of 10% on all its services.

Offer valid till 5-1-2024

We love being your partner in success. We know you have been working hard lately, take a break this holiday season to spend time with your loved ones while we make sure you succeed in your academics

Discount code: RP0996Y

Reliability and Validity – Definitions, Types & Examples

Published by Alvin Nicolas at August 16th, 2021 , Revised On October 26, 2023

A researcher must test the collected data before making any conclusion. Every research design needs to be concerned with reliability and validity to measure the quality of the research.

What is Reliability?

Reliability refers to the consistency of the measurement. Reliability shows how trustworthy is the score of the test. If the collected data shows the same results after being tested using various methods and sample groups, the information is reliable. If your method has reliability, the results will be valid.

Example: If you weigh yourself on a weighing scale throughout the day, you’ll get the same results. These are considered reliable results obtained through repeated measures.

Example: If a teacher conducts the same math test of students and repeats it next week with the same questions. If she gets the same score, then the reliability of the test is high.

What is the Validity?

Validity refers to the accuracy of the measurement. Validity shows how a specific test is suitable for a particular situation. If the results are accurate according to the researcher’s situation, explanation, and prediction, then the research is valid.

If the method of measuring is accurate, then it’ll produce accurate results. If a method is reliable, then it’s valid. In contrast, if a method is not reliable, it’s not valid.

Example: Your weighing scale shows different results each time you weigh yourself within a day even after handling it carefully, and weighing before and after meals. Your weighing machine might be malfunctioning. It means your method had low reliability. Hence you are getting inaccurate or inconsistent results that are not valid.

Example: Suppose a questionnaire is distributed among a group of people to check the quality of a skincare product and repeated the same questionnaire with many groups. If you get the same response from various participants, it means the validity of the questionnaire and product is high as it has high reliability.

Most of the time, validity is difficult to measure even though the process of measurement is reliable. It isn’t easy to interpret the real situation.

Example: If the weighing scale shows the same result, let’s say 70 kg each time, even if your actual weight is 55 kg, then it means the weighing scale is malfunctioning. However, it was showing consistent results, but it cannot be considered as reliable. It means the method has low reliability.

Internal Vs. External Validity

One of the key features of randomised designs is that they have significantly high internal and external validity.

Internal validity is the ability to draw a causal link between your treatment and the dependent variable of interest. It means the observed changes should be due to the experiment conducted, and any external factor should not influence the variables .

Example: age, level, height, and grade.

External validity is the ability to identify and generalise your study outcomes to the population at large. The relationship between the study’s situation and the situations outside the study is considered external validity.

Also, read about Inductive vs Deductive reasoning in this article.

Looking for reliable dissertation support?

We hear you.

- Whether you want a full dissertation written or need help forming a dissertation proposal, we can help you with both.

- Get different dissertation services at ResearchProspect and score amazing grades!

Threats to Interval Validity

Threats of external validity, how to assess reliability and validity.

Reliability can be measured by comparing the consistency of the procedure and its results. There are various methods to measure validity and reliability. Reliability can be measured through various statistical methods depending on the types of validity, as explained below:

Types of Reliability

Types of validity.

As we discussed above, the reliability of the measurement alone cannot determine its validity. Validity is difficult to be measured even if the method is reliable. The following type of tests is conducted for measuring validity.

Does your Research Methodology Have the Following?

- Great Research/Sources

- Perfect Language

- Accurate Sources

If not, we can help. Our panel of experts makes sure to keep the 3 pillars of Research Methodology strong.

How to Increase Reliability?

- Use an appropriate questionnaire to measure the competency level.

- Ensure a consistent environment for participants

- Make the participants familiar with the criteria of assessment.

- Train the participants appropriately.

- Analyse the research items regularly to avoid poor performance.

How to Increase Validity?

Ensuring Validity is also not an easy job. A proper functioning method to ensure validity is given below:

- The reactivity should be minimised at the first concern.

- The Hawthorne effect should be reduced.

- The respondents should be motivated.

- The intervals between the pre-test and post-test should not be lengthy.

- Dropout rates should be avoided.

- The inter-rater reliability should be ensured.

- Control and experimental groups should be matched with each other.

How to Implement Reliability and Validity in your Thesis?

According to the experts, it is helpful if to implement the concept of reliability and Validity. Especially, in the thesis and the dissertation, these concepts are adopted much. The method for implementation given below:

Frequently Asked Questions

What is reliability and validity in research.

Reliability in research refers to the consistency and stability of measurements or findings. Validity relates to the accuracy and truthfulness of results, measuring what the study intends to. Both are crucial for trustworthy and credible research outcomes.

What is validity?

Validity in research refers to the extent to which a study accurately measures what it intends to measure. It ensures that the results are truly representative of the phenomena under investigation. Without validity, research findings may be irrelevant, misleading, or incorrect, limiting their applicability and credibility.

What is reliability?

Reliability in research refers to the consistency and stability of measurements over time. If a study is reliable, repeating the experiment or test under the same conditions should produce similar results. Without reliability, findings become unpredictable and lack dependability, potentially undermining the study’s credibility and generalisability.

What is reliability in psychology?

In psychology, reliability refers to the consistency of a measurement tool or test. A reliable psychological assessment produces stable and consistent results across different times, situations, or raters. It ensures that an instrument’s scores are not due to random error, making the findings dependable and reproducible in similar conditions.

What is test retest reliability?

Test-retest reliability assesses the consistency of measurements taken by a test over time. It involves administering the same test to the same participants at two different points in time and comparing the results. A high correlation between the scores indicates that the test produces stable and consistent results over time.

How to improve reliability of an experiment?

- Standardise procedures and instructions.

- Use consistent and precise measurement tools.

- Train observers or raters to reduce subjective judgments.

- Increase sample size to reduce random errors.

- Conduct pilot studies to refine methods.

- Repeat measurements or use multiple methods.

- Address potential sources of variability.

What is the difference between reliability and validity?

Reliability refers to the consistency and repeatability of measurements, ensuring results are stable over time. Validity indicates how well an instrument measures what it’s intended to measure, ensuring accuracy and relevance. While a test can be reliable without being valid, a valid test must inherently be reliable. Both are essential for credible research.

Are interviews reliable and valid?

Interviews can be both reliable and valid, but they are susceptible to biases. The reliability and validity depend on the design, structure, and execution of the interview. Structured interviews with standardised questions improve reliability. Validity is enhanced when questions accurately capture the intended construct and when interviewer biases are minimised.

Are IQ tests valid and reliable?

IQ tests are generally considered reliable, producing consistent scores over time. Their validity, however, is a subject of debate. While they effectively measure certain cognitive skills, whether they capture the entirety of “intelligence” or predict success in all life areas is contested. Cultural bias and over-reliance on tests are also concerns.

Are questionnaires reliable and valid?

Questionnaires can be both reliable and valid if well-designed. Reliability is achieved when they produce consistent results over time or across similar populations. Validity is ensured when questions accurately measure the intended construct. However, factors like poorly phrased questions, respondent bias, and lack of standardisation can compromise their reliability and validity.

You May Also Like

A confounding variable can potentially affect both the suspected cause and the suspected effect. Here is all you need to know about accounting for confounding variables in research.

Content analysis is used to identify specific words, patterns, concepts, themes, phrases, or sentences within the content in the recorded communication.

Discourse analysis is an essential aspect of studying a language. It is used in various disciplines of social science and humanities such as linguistic, sociolinguistics, and psycholinguistic.

As Featured On

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

Splash Sol LLC

- How It Works

Reliability In Psychology Research: Definitions & Examples

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Reliability in psychology research refers to the reproducibility or consistency of measurements. Specifically, it is the degree to which a measurement instrument or procedure yields the same results on repeated trials. A measure is considered reliable if it produces consistent scores across different instances when the underlying thing being measured has not changed.

Reliability ensures that responses are consistent across times and occasions for instruments like questionnaires . Multiple forms of reliability exist, including test-retest, inter-rater, and internal consistency.

For example, if people weigh themselves during the day, they would expect to see a similar reading. Scales that measured weight differently each time would be of little use.

The same analogy could be applied to a tape measure that measures inches differently each time it is used. It would not be considered reliable.

If findings from research are replicated consistently, they are reliable. A correlation coefficient can be used to assess the degree of reliability. If a test is reliable, it should show a high positive correlation.

Of course, it is unlikely the same results will be obtained each time as participants and situations vary. Still, a strong positive correlation between the same test results indicates reliability.

Reliability is important because unreliable measures introduce random error that attenuates correlations and makes it harder to detect real relationships.

Ensuring high reliability for key measures in psychology research helps boost the sensitivity, validity, and replicability of studies. Estimating and reporting reliable evidence is considered an important methodological practice.

There are two types of reliability: internal and external.

- Internal reliability refers to how consistently different items within a single test measure the same concept or construct. It ensures that a test is stable across its components.

- External reliability measures how consistently a test produces similar results over repeated administrations or under different conditions. It ensures that a test is stable over time and situations.

Some key aspects of reliability in psychology research include:

- Test-retest reliability : The consistency of scores for the same person across two or more separate administrations of the same measurement procedure over time. High test-retest reliability suggests the measure provides a stable, reproducible score.

- Interrater reliability : The level of agreement in scores on a measure between different raters or observers rating the same target. High interrater reliability suggests the ratings are objective and not overly influenced by rater subjectivity or bias.

- Internal consistency reliability : The degree to which different test items or parts of an instrument that measure the same construct yield similar results. Analyzed statistically using Cronbach’s alpha, a high value suggests the items measure the same underlying concept.

Test-Retest Reliability

The test-retest method assesses the external consistency of a test. Examples of appropriate tests include questionnaires and psychometric tests. It measures the stability of a test over time.

A typical assessment would involve giving participants the same test on two separate occasions. If the same or similar results are obtained, then external reliability is established.

Here’s how it works:

- A test or measurement is administered to participants at one point in time.

- After a certain period, the same test is administered again to the same participants without any intervention or treatment in between.

- The scores from the two administrations are then correlated using a statistical method, often Pearson’s correlation.

- A high correlation between the scores from the two test administrations indicates good test-retest reliability, suggesting the test yields consistent results over time.

This method is especially useful for tests that measure stable traits or characteristics that aren’t expected to change over short periods.

The disadvantage of the test-retest method is that it takes a long time for results to be obtained. The reliability can be influenced by the time interval between tests and any events that might affect participants’ responses during this interval.

Beck et al. (1996) studied the responses of 26 outpatients on two separate therapy sessions one week apart, they found a correlation of .93 therefore demonstrating high test-restest reliability of the depression inventory.

This is an example of why reliability in psychological research is necessary, if it wasn’t for the reliability of such tests some individuals may not be successfully diagnosed with disorders such as depression and consequently will not be given appropriate therapy.

The timing of the test is important; if the duration is too brief, then participants may recall information from the first test, which could bias the results.

Alternatively, if the duration is too long, it is feasible that the participants could have changed in some important way which could also bias the results.

The test-retest method assesses the external consistency of a test. This refers to the degree to which different raters give consistent estimates of the same behavior. Inter-rater reliability can be used for interviews.

Inter-Rater Reliability

Inter-rater reliability, often termed inter-observer reliability, refers to the extent to which different raters or evaluators agree in assessing a particular phenomenon, behavior, or characteristic. It’s a measure of consistency and agreement between individuals scoring or evaluating the same items or behaviors.

High inter-rater reliability indicates that the findings or measurements are consistent across different raters, suggesting the results are not due to random chance or subjective biases of individual raters.

Statistical measures, such as Cohen’s Kappa or the Intraclass Correlation Coefficient (ICC), are often employed to quantify the level of agreement between raters, helping to ensure that findings are objective and reproducible.

Ensuring high inter-rater reliability is essential, especially in studies involving subjective judgment or observations, as it provides confidence that the findings are replicable and not heavily influenced by individual rater biases.

Note it can also be called inter-observer reliability when referring to observational research. Here, researchers observe the same behavior independently (to avoid bias) and compare their data. If the data is similar, then it is reliable.

Where observer scores do not significantly correlate, then reliability can be improved by:

- Train observers in the observation techniques and ensure everyone agrees with them.

- Ensuring behavior categories have been operationalized. This means that they have been objectively defined.

For example, if two researchers are observing ‘aggressive behavior’ of children at nursery they would both have their own subjective opinion regarding what aggression comprises.

In this scenario, they would be unlikely to record aggressive behavior the same, and the data would be unreliable.

However, if they were to operationalize the behavior category of aggression, this would be more objective and make it easier to identify when a specific behavior occurs.

For example, while “aggressive behavior” is subjective and not operationalized, “pushing” is objective and operationalized. Thus, researchers could count how many times children push each other over a certain duration of time.

Internal Consistency Reliability

Internal consistency reliability refers to how well different items on a test or survey that are intended to measure the same construct produce similar scores.

For example, a questionnaire measuring depression may have multiple questions tapping issues like sadness, changes in sleep and appetite, fatigue, and loss of interest. The assumption is that people’s responses across these different symptom items should be fairly consistent.

Cronbach’s alpha is a common statistic used to quantify internal consistency reliability. It calculates the average inter-item correlations among the test items. Values range from 0 to 1, with higher values indicating greater internal consistency. A good rule of thumb is that alpha should generally be above .70 to suggest adequate reliability.

An alpha of .90 for a depression questionnaire, for example, means there is a high average correlation between respondents’ scores on the different symptom items.

This suggests all the items are measuring the same underlying construct (depression) in a consistent manner. It taps the unidimensionality of the scale – evidence it is measuring one thing.

If some items were unrelated to others, the average inter-item correlations would be lower, resulting in a lower alpha. This would indicate the presence of multiple dimensions in the scale, rather than a unified single concept.

So, in summary, high internal consistency reliability evidenced through high Cronbach’s alpha provides support for the fact that various test items successfully tap into the same latent variable the researcher intends to measure. It suggests the items meaningfully cohere together to reliably measure that construct.

Split-Half Method

The split-half method assesses the internal consistency of a test, such as psychometric tests and questionnaires.

There, it measures the extent to which all parts of the test contribute equally to what is being measured.

The split-half approach provides another method of quantifying internal consistency by taking advantage of the natural variation when a single test is divided in half.

It’s somewhat cumbersome to implement but avoids limitations associated with Cronbach’s alpha. However, alpha remains much more widely used in practice due to its relative ease of calculation.

- A test or questionnaire is split into two halves, typically by separating even-numbered items from odd-numbered items, or first-half items vs. second-half.

- Each half is scored separately, and the scores are correlated using a statistical method, often Pearson’s correlation.

- The correlation between the two halves gives an indication of the test’s reliability. A higher correlation suggests better reliability.

- To adjust for the test’s shortened length (because we’ve split it in half), the Spearman-Brown prophecy formula is often applied to estimate the reliability of the full test based on the split-half reliability.

The reliability of a test could be improved by using this method. For example, any items on separate halves of a test with a low correlation (e.g., r = .25) should either be removed or rewritten.

The split-half method is a quick and easy way to establish reliability. However, it can only be effective with large questionnaires in which all questions measure the same construct. This means it would not be appropriate for tests that measure different constructs.

For example, the Minnesota Multiphasic Personality Inventory has sub scales measuring differently behaviors such as depression, schizophrenia, social introversion. Therefore the split-half method was not be an appropriate method to assess reliability for this personality test.

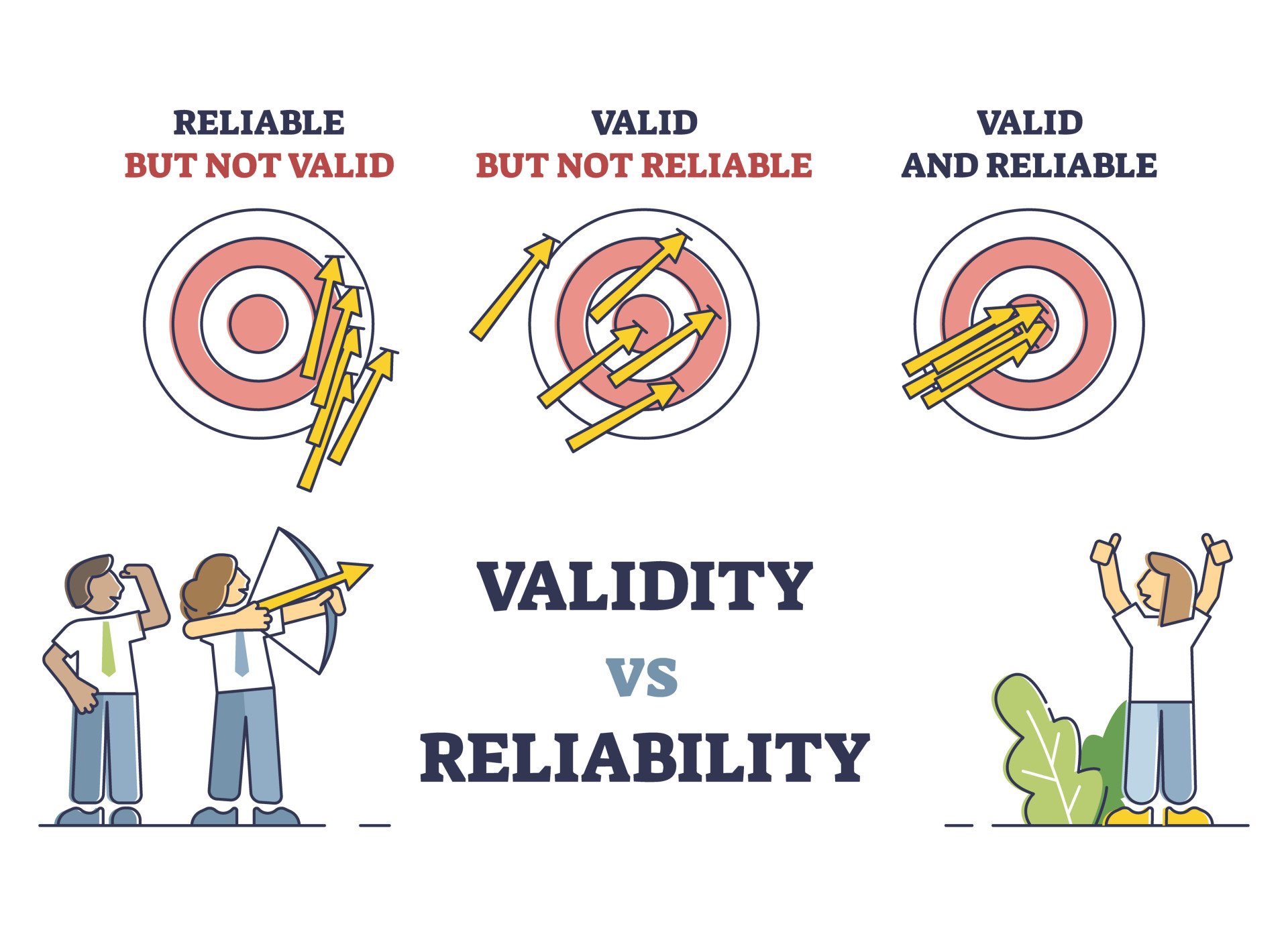

Validity vs. Reliability In Psychology

In psychology, validity and reliability are fundamental concepts that assess the quality of measurements.

- Validity refers to the degree to which a measure accurately assesses the specific concept, trait, or construct that it claims to be assessing. It refers to the truthfulness of the measure.

- Reliability refers to the overall consistency, stability, and repeatability of a measurement. It is concerned with how much random error might be distorting scores or introducing unwanted “noise” into the data.

A key difference is that validity refers to what’s being measured, while reliability refers to how consistently it’s being measured.

An unreliable measure cannot be truly valid because if a measure gives inconsistent, unpredictable scores, it clearly isn’t measuring the trait or quality it aims to measure in a truthful, systematic manner. Establishing reliability provides the foundation for determining the measure’s validity.

A pivotal understanding is that reliability is a necessary but not sufficient condition for validity.

It means a test can be reliable, consistently producing the same results, without being valid, or accurately measuring the intended attribute.

However, a valid test, one that truly measures what it purports to, must be reliable. In the pursuit of rigorous psychological research, both validity and reliability are indispensable.

Ideally, researchers strive for high scores on both -Validity to make sure you’re measuring the correct construct and reliability to make sure you’re measuring it accurately and precisely. The two qualities are independent but both crucial elements of strong measurement procedures.

Beck, A. T., Steer, R. A., & Brown, G. K. (1996). Manual for the beck depression inventory The Psychological Corporation. San Antonio , TX.

Clifton, J. D. W. (2020). Managing validity versus reliability trade-offs in scale-building decisions. Psychological Methods, 25 (3), 259–270. https:// doi.org/10.1037/met0000236

Guttman, L. (1945). A basis for analyzing test-retest reliability. Psychometrika, 10 (4), 255–282. https://doi.org/10.1007/BF02288892

Hathaway, S. R., & McKinley, J. C. (1943). Manual for the Minnesota Multiphasic Personality Inventory . New York: Psychological Corporation.

Jannarone, R. J., Macera, C. A., & Garrison, C. Z. (1987). Evaluating interrater agreement through “case-control” sampling. Biometrics, 43 (2), 433–437. https://doi.org/10.2307/2531825

LeBreton, J. M., & Senter, J. L. (2008). Answers to 20 questions about interrater reliability and interrater agreement. Organizational Research Methods, 11 (4), 815–852. https://doi.org/10.1177/1094428106296642

Watkins, M. W., & Pacheco, M. (2000). Interobserver agreement in behavioral research: Importance and calculation. Journal of Behavioral Education, 10 , 205–212

IMAGES

VIDEO