- Privacy Policy

Home » Research Gap – Types, Examples and How to Identify

Research Gap – Types, Examples and How to Identify

Table of Contents

Research Gap

Definition:

Research gap refers to an area or topic within a field of study that has not yet been extensively researched or is yet to be explored. It is a question, problem or issue that has not been addressed or resolved by previous research.

How to Identify Research Gap

Identifying a research gap is an essential step in conducting research that adds value and contributes to the existing body of knowledge. Research gap requires critical thinking, creativity, and a thorough understanding of the existing literature . It is an iterative process that may require revisiting and refining your research questions and ideas multiple times.

Here are some steps that can help you identify a research gap:

- Review existing literature: Conduct a thorough review of the existing literature in your research area. This will help you identify what has already been studied and what gaps still exist.

- Identify a research problem: Identify a specific research problem or question that you want to address.

- Analyze existing research: Analyze the existing research related to your research problem. This will help you identify areas that have not been studied, inconsistencies in the findings, or limitations of the previous research.

- Brainstorm potential research ideas : Based on your analysis, brainstorm potential research ideas that address the identified gaps.

- Consult with experts: Consult with experts in your research area to get their opinions on potential research ideas and to identify any additional gaps that you may have missed.

- Refine research questions: Refine your research questions and hypotheses based on the identified gaps and potential research ideas.

- Develop a research proposal: Develop a research proposal that outlines your research questions, objectives, and methods to address the identified research gap.

Types of Research Gap

There are different types of research gaps that can be identified, and each type is associated with a specific situation or problem. Here are the main types of research gaps and their explanations:

Theoretical Gap

This type of research gap refers to a lack of theoretical understanding or knowledge in a particular area. It can occur when there is a discrepancy between existing theories and empirical evidence or when there is no theory that can explain a particular phenomenon. Identifying theoretical gaps can lead to the development of new theories or the refinement of existing ones.

Empirical Gap

An empirical gap occurs when there is a lack of empirical evidence or data in a particular area. It can happen when there is a lack of research on a specific topic or when existing research is inadequate or inconclusive. Identifying empirical gaps can lead to the development of new research studies to collect data or the refinement of existing research methods to improve the quality of data collected.

Methodological Gap

This type of research gap refers to a lack of appropriate research methods or techniques to answer a research question. It can occur when existing methods are inadequate, outdated, or inappropriate for the research question. Identifying methodological gaps can lead to the development of new research methods or the modification of existing ones to better address the research question.

Practical Gap

A practical gap occurs when there is a lack of practical applications or implementation of research findings. It can occur when research findings are not implemented due to financial, political, or social constraints. Identifying practical gaps can lead to the development of strategies for the effective implementation of research findings in practice.

Knowledge Gap

This type of research gap occurs when there is a lack of knowledge or information on a particular topic. It can happen when a new area of research is emerging, or when research is conducted in a different context or population. Identifying knowledge gaps can lead to the development of new research studies or the extension of existing research to fill the gap.

Examples of Research Gap

Here are some examples of research gaps that researchers might identify:

- Theoretical Gap Example : In the field of psychology, there might be a theoretical gap related to the lack of understanding of the relationship between social media use and mental health. Although there is existing research on the topic, there might be a lack of consensus on the mechanisms that link social media use to mental health outcomes.

- Empirical Gap Example : In the field of environmental science, there might be an empirical gap related to the lack of data on the long-term effects of climate change on biodiversity in specific regions. Although there might be some studies on the topic, there might be a lack of data on the long-term effects of climate change on specific species or ecosystems.

- Methodological Gap Example : In the field of education, there might be a methodological gap related to the lack of appropriate research methods to assess the impact of online learning on student outcomes. Although there might be some studies on the topic, existing research methods might not be appropriate to assess the complex relationships between online learning and student outcomes.

- Practical Gap Example: In the field of healthcare, there might be a practical gap related to the lack of effective strategies to implement evidence-based practices in clinical settings. Although there might be existing research on the effectiveness of certain practices, they might not be implemented in practice due to various barriers, such as financial constraints or lack of resources.

- Knowledge Gap Example: In the field of anthropology, there might be a knowledge gap related to the lack of understanding of the cultural practices of indigenous communities in certain regions. Although there might be some research on the topic, there might be a lack of knowledge about specific cultural practices or beliefs that are unique to those communities.

Examples of Research Gap In Literature Review, Thesis, and Research Paper might be:

- Literature review : A literature review on the topic of machine learning and healthcare might identify a research gap in the lack of studies that investigate the use of machine learning for early detection of rare diseases.

- Thesis : A thesis on the topic of cybersecurity might identify a research gap in the lack of studies that investigate the effectiveness of artificial intelligence in detecting and preventing cyber attacks.

- Research paper : A research paper on the topic of natural language processing might identify a research gap in the lack of studies that investigate the use of natural language processing techniques for sentiment analysis in non-English languages.

How to Write Research Gap

By following these steps, you can effectively write about research gaps in your paper and clearly articulate the contribution that your study will make to the existing body of knowledge.

Here are some steps to follow when writing about research gaps in your paper:

- Identify the research question : Before writing about research gaps, you need to identify your research question or problem. This will help you to understand the scope of your research and identify areas where additional research is needed.

- Review the literature: Conduct a thorough review of the literature related to your research question. This will help you to identify the current state of knowledge in the field and the gaps that exist.

- Identify the research gap: Based on your review of the literature, identify the specific research gap that your study will address. This could be a theoretical, empirical, methodological, practical, or knowledge gap.

- Provide evidence: Provide evidence to support your claim that the research gap exists. This could include a summary of the existing literature, a discussion of the limitations of previous studies, or an analysis of the current state of knowledge in the field.

- Explain the importance: Explain why it is important to fill the research gap. This could include a discussion of the potential implications of filling the gap, the significance of the research for the field, or the potential benefits to society.

- State your research objectives: State your research objectives, which should be aligned with the research gap you have identified. This will help you to clearly articulate the purpose of your study and how it will address the research gap.

Importance of Research Gap

The importance of research gaps can be summarized as follows:

- Advancing knowledge: Identifying research gaps is crucial for advancing knowledge in a particular field. By identifying areas where additional research is needed, researchers can fill gaps in the existing body of knowledge and contribute to the development of new theories and practices.

- Guiding research: Research gaps can guide researchers in designing studies that fill those gaps. By identifying research gaps, researchers can develop research questions and objectives that are aligned with the needs of the field and contribute to the development of new knowledge.

- Enhancing research quality: By identifying research gaps, researchers can avoid duplicating previous research and instead focus on developing innovative research that fills gaps in the existing body of knowledge. This can lead to more impactful research and higher-quality research outputs.

- Informing policy and practice: Research gaps can inform policy and practice by highlighting areas where additional research is needed to inform decision-making. By filling research gaps, researchers can provide evidence-based recommendations that have the potential to improve policy and practice in a particular field.

Applications of Research Gap

Here are some potential applications of research gap:

- Informing research priorities: Research gaps can help guide research funding agencies and researchers to prioritize research areas that require more attention and resources.

- Identifying practical implications: Identifying gaps in knowledge can help identify practical applications of research that are still unexplored or underdeveloped.

- Stimulating innovation: Research gaps can encourage innovation and the development of new approaches or methodologies to address unexplored areas.

- Improving policy-making: Research gaps can inform policy-making decisions by highlighting areas where more research is needed to make informed policy decisions.

- Enhancing academic discourse: Research gaps can lead to new and constructive debates and discussions within academic communities, leading to more robust and comprehensive research.

Advantages of Research Gap

Here are some of the advantages of research gap:

- Identifies new research opportunities: Identifying research gaps can help researchers identify areas that require further exploration, which can lead to new research opportunities.

- Improves the quality of research: By identifying gaps in current research, researchers can focus their efforts on addressing unanswered questions, which can improve the overall quality of research.

- Enhances the relevance of research: Research that addresses existing gaps can have significant implications for the development of theories, policies, and practices, and can therefore increase the relevance and impact of research.

- Helps avoid duplication of effort: Identifying existing research can help researchers avoid duplicating efforts, saving time and resources.

- Helps to refine research questions: Research gaps can help researchers refine their research questions, making them more focused and relevant to the needs of the field.

- Promotes collaboration: By identifying areas of research that require further investigation, researchers can collaborate with others to conduct research that addresses these gaps, which can lead to more comprehensive and impactful research outcomes.

Disadvantages of Research Gap

While research gaps can be advantageous, there are also some potential disadvantages that should be considered:

- Difficulty in identifying gaps: Identifying gaps in existing research can be challenging, particularly in fields where there is a large volume of research or where research findings are scattered across different disciplines.

- Lack of funding: Addressing research gaps may require significant resources, and researchers may struggle to secure funding for their work if it is perceived as too risky or uncertain.

- Time-consuming: Conducting research to address gaps can be time-consuming, particularly if the research involves collecting new data or developing new methods.

- Risk of oversimplification: Addressing research gaps may require researchers to simplify complex problems, which can lead to oversimplification and a failure to capture the complexity of the issues.

- Bias : Identifying research gaps can be influenced by researchers’ personal biases or perspectives, which can lead to a skewed understanding of the field.

- Potential for disagreement: Identifying research gaps can be subjective, and different researchers may have different views on what constitutes a gap in the field, leading to disagreements and debate.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Chapter Summary & Overview – Writing Guide...

Research Results Section – Writing Guide and...

References in Research – Types, Examples and...

Figures in Research Paper – Examples and Guide

Tables in Research Paper – Types, Creating Guide...

Data Verification – Process, Types and Examples

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Bridging the gap between research, policy, and practice: Lessons learned from academic–public partnerships in the CTSA network

Amytis towfighi, allison zumberge orechwa, tomás j aragón, marc atkins, arleen f brown, olveen carrasquillo, savanna carson, paula fleisher, erika gustafson, deborah k herman, moira inkelas, daniella meeker, doriane c miller, rachelle paul-brutus, michael b potter, sarah s ritner, brendaly rodriguez, anne skinner, hal f yee jr.

- Author information

- Article notes

- Copyright and License information

Address for Correspondence: A. Towfighi, MD, 1100 N State Street, A4E, Los Angeles, CA, 90033, USA. Email: [email protected]

Amytis Towfighi is Chair and Allison Zumberge Orechwa is Vice-Chair.

Sarah S. Rittner's name has been corrected. Additionally, a note stating the first and second authors' roles has been added. A corrigendum detailing these changes has also been published (doi: 10.1017/cts.2020.495 ).

Received 2019 Nov 5; Revised 2020 Mar 4; Accepted 2020 Mar 4; Collection date 2020 Jun.

This is an Open Access article, distributed under the terms of the Creative Commons Attribution licence ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted re-use, distribution, and reproduction in any medium, provided the original work is properly cited.

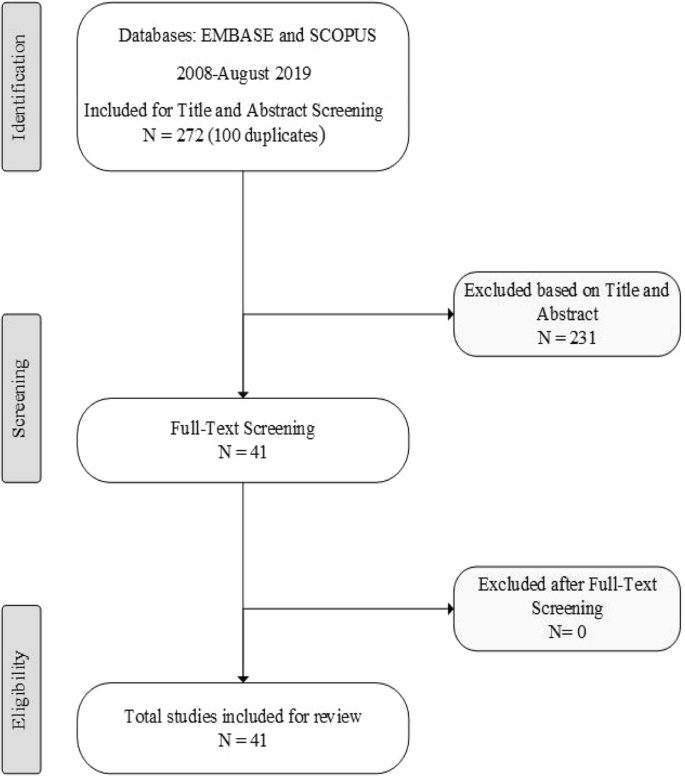

A primary barrier to translation of clinical research discoveries into care delivery and population health is the lack of sustainable infrastructure bringing researchers, policymakers, practitioners, and communities together to reduce silos in knowledge and action. As National Institutes of Healthʼs (NIH) mechanism to advance translational research, Clinical and Translational Science Award (CTSA) awardees are uniquely positioned to bridge this gap. Delivering on this promise requires sustained collaboration and alignment between research institutions and public health and healthcare programs and services. We describe the collaboration of seven CTSA hubs with city, county, and state healthcare and public health organizations striving to realize this vision together. Partnership representatives convened monthly to identify key components, common and unique themes, and barriers in academic–public collaborations. All partnerships aligned the activities of the CTSA programs with the needs of the city/county/state partners, by sharing resources, responding to real-time policy questions and training needs, promoting best practices, and advancing community-engaged research, and dissemination and implementation science to narrow the knowledge-to-practice gap. Barriers included competing priorities, differing timelines, bureaucratic hurdles, and unstable funding. Academic–public health/health system partnerships represent a unique and underutilized model with potential to enhance community and population health.

Keywords: Translational research, policy-relevant research, implementation science, community engagement, public health

Introduction

The translation of research discoveries from “bench to bedside” and into improved health is slow and inefficient [ 1 ]. The attempt to bridge science, policy, and practice has been described as a “valley of death,” reflecting few successful enduring outcomes [ 2 ]. Federal investment in basic science and efficacy research dwarfs the investment in health quality, dissemination, and outcomes research [ 3 ]. Although social determinants of health account for approximately 60% of health outcomes [ 4 ], the United States spends a significantly lower percentage of its gross domestic product (GDP) on social services as compared to similar countries with better health outcomes [ 5 ], and only 5% of U.S. national health expenditures are allocated to population-wide approaches to health promotion [ 6 ]. Widespread adoption of evidence into policy and practice is hampered when academic institutions undertake science in controlled settings and conditions. Additionally, evidence-based practices resulting from academic studies often result in limited dissemination even within academic circles. Yet, public agencies, including safety-net healthcare systems and departments of public health, must respond to and implement evidence-based policies and health promotion services for populations facing higher burdens of health and healthcare disparities.

More researchers are turning to dissemination and implementation science (D&I) methods to more effectively bridge the research-to-practice gap [ 7 ]. Yet, despite a century of empirical research to advance the translation of research to practice, considerable barriers remain, especially for advancing public health policy and practice [ 8 ].

A primary challenge to addressing the research-policy-practice gap is the lack of sustainable infrastructure bringing researchers, policymakers, practitioners, and communities together to: (1) align the research enterprise with public and population health priorities; (2) bridge healthcare, public health, mental health, and related sectors; (3) engage health systems in research; and (4) develop innovative solutions for health systems. Without a formal mechanism to effectively engage the community, academicians, and public health and healthcare agencies, research fails to address the need among most public health and healthcare agencies to increase the quality of services with existing resources.

Institutions with Clinical and Translational Science Awards (CTSAs) are uniquely positioned to bridge this gap and contribute to care delivery, translation of research into interventions that improve the health of communities, and public health innovation. In 2006, National Institutes of Health (NIH) launched the CTSA Program to support a national network of medical research institutions, or “hubs,” that provide infrastructure at their local universities and other affiliated academic partners to advance clinical and translational research and population health. Hubs support research across disciplines and promote team-based science closely integrated with patients and communities. Their education and training programs aim to create the next generation of translational scientists who are “boundary crossers” and “systems thinkers” [ 9 ]. Through their collaboration with communities, hubs are uniquely situated to identify local health priorities, as well as the resources and expertise to catalyze research in those areas. The CTSA network holds great promise for bridging the research-policy-practice gap.

A number of CTSA hubs have a major emphasis on partnering with city, county, and state health organizations to drive innovations in clinical care and translate research into practical interventions that improve community and population health. We will describe examples from seven CTSA hubs in four cities – Los Angeles, Chicago, Miami, and San Francisco – that have activated their resources toward research, effective service delivery, policy development, implementation, and program evaluation.

Synergy Paper Collaboration

With support from the CTSA Coordinating Center through a “Synergy paper” mechanism, representatives from the seven CTSA hubs and public health/health system partners participated in monthly teleconferences to collaborate on developing a manuscript on this shared topic. The earlier teleconferences included a brief overview by participants of their existing academic–public health/health system partnerships and discussions on shared experiences, lessons learned, and future directions. This led to more in-depth conversations, addressing common themes and both mutual and unique barriers to achieving goals. As the linkage to respective health systems was crucial to this evaluation, authors from each CTSA hub collaborated closely with key public health and health system representatives and received written comments and feedback to integrate into the manuscript. After the elicitation and information sharing processes, members categorized critical factors, challenges, and opportunities for improvement and strategized on recommendations. As a group, members summarized activities and assessed similarities.

CTSA-Public Health System and Health Department Partnerships

The areas of focus spanned the translational spectrum from creation of evidence-based guidelines (T2) to translation to communities (T4) (Table 1 ). Focus areas included direct research support, program evaluation, implementation research, infrastructure and expertise in data sharing, analytics, and health information technology, community needs assessments, educating or conducting interventions with community health workers (CHWs), community professional development, dissemination science, and policy setting.

Partnership activities by city and translational stage

Chicago is the third largest city in the United States, with a population of 2.7 million. Approximately 50% of the population is non-white, with one in five people born outside of the United States and 36% speaking a language other than English at home. Twenty percent of the people in Chicago are living in poverty, which includes one in three children. The three CTSA programs in Chicago, at Northwestern University, the University of Chicago, and the University of Illinois at Chicago, formed a formal collaboration over a decade ago to advance community-engaged research across the Chicagoland region. This collaboration, the Chicago Consortium for Community Engagement (C3 ), is composed of representatives from the community engagement teams of each CTSA, the Chicago Department of Public Health (CDPH), and AllianceChicago, a nonprofit that provides research support to over 60 Federally Qualified Health Centers throughout Chicago and nationally.

In the city of Chicago, there is up to a 17-year gap in life expectancy between community areas that is closely correlated with economic status and race. The CDPH joined C3 in 2016 concurrent with the release of Healthy Chicago 2.0 , a citywide, 4-year strategic plan to promote health equity for Chicagoʼs over 2.5 million diverse residents (CDPH HC 2.0) [ 10 ]. The report is a blueprint for establishing and implementing policies and services that prioritize residents and communities with the greatest need. CDPH and the C3 recognized that the success of Healthy Chicago 2.0 would depend, in part, on strengthening the relationship between communities and academic institutions to advance a public health research agenda.

Activities of the C3 include (1) facilitating and supporting university-based research and evaluation of CDPH-sponsored and community-based programs (four to date); (2) jointly developing mechanisms to facilitate dissemination of research opportunities and findings to community audiences; (3) aligning Clinical and Translational Science Institute (CTSI) seed funding opportunities with Healthy Chicago 2.0 priority areas; (4) facilitating collaborations with community-based organizations and community health centers; (5) collaboratively developing and delivering capacity-building workshops on community-engaged research and dissemination strategies; and (6) improving community partner and member understanding of and interest in research. Most notably, the partnership resulted in a new CDPH Office of Research and Evaluation whose lead staff position is jointly funded by the three Chicago CTSA hubs. She is currently serving on 11 CTSI research projects and center advisory boards.

The C3 meetings allow for discussion of data analytics related to The Chicago Health Atlas (ChicagoHealthAtlas) that provides public health data for the city of Chicago and aggregated community area data based on Healthy Chicago 2.0 indicators. This provides a unique opportunity to consider social determinants of health by, for example, promoting research examining medical center electronic medical record data in relation to Chicago community-level data at each CTSA program. Moreover, ongoing involvement of CDPH leadership in these discussions provides an opportunity to promote research that will inform Healthy Chicago 2025, the blueprint for Chicago healthcare policy and practices (HealthyChicago2025). Examples include recent discussions with CPDH epidemiologists to add questions regarding attitudes about research participation to the Chicago Health Survey; plans for the Chicago-based roll out of the NIH All of Us research initiative, and local efforts by the three Chicago CTSA programs to drive broad participation in a new local multi-institutional research portal. Lastly, the collaboration includes discussions with representatives from the Alliance for Health Equity (AllianceforHealthEquity), a collaborative of over 30 nonprofit hospitals, health departments, and community organizations, that completed a collaborative Community Health Needs Assessment for Chicago and Suburban Cook County to allow partners to collectively identify strategic priorities.

Los Angeles

Los Angeles is the most populous county in the nation, with 10 million residents, and more people live in Los Angeles County (LAC) than in 42 states. Three quarters of the countyʼs residents are non-white, more than 30% of residents were born outside the United States, nearly one in five is below the federal poverty line, approximately one in 5 lack health insurance, and many speak a language other than English at home. The Los Angeles County Department of Health Services (LAC-DHS), the second-largest municipal health system in the United States, provides care to 700,000 patients annually through 4 hospitals, 19 comprehensive ambulatory care centers, and a network of community clinics. Many physicians serving the DHS facilities are also faculty members at the University of Southern California (USC) and University of California Los Angeles (UCLA), and DHS hospitals are training sites for physicians at USC and UCLA. The leadership of both the UCLA and USC CTSA hubs work in tandem with the DHS Chief Medical Officer to identify areas of intersection between academic research and the health system.

The parties invest resources in pilot funding for these areas of mutual interest and into two DHS-wide service cores – implementation science and clinical research informatics. Working closely with the DHS Research Oversight Board on policy and procedure development, the DHS Informatics and Analytics Core established new research informatics infrastructure, serving a county-wide clinical data warehouse and supporting 23 research pilot projects to date. The Innovation and Implementation Core facilitates multidisciplinary team science, deploys research methods that are feasible and acceptable in a safety-net health system, supports bidirectional mentoring and training, and develops new academic and public health leaders who can leverage the strengths of both systems. To date, the 18 projects supported by the Innovation and Implementation Core have affected the care provided by over 270 clinicians and outcomes of over 80,000 patients. An exemplary project supported by both cores is a teleretinal screening program that increased diabetic retinopathy screening rates from 41% to 60% and decreased ophthalmology visit wait times from 158 to 17 days [ 11 ]. To incubate and advance such multidisciplinary projects, the USC/UCLA/DHS partnership has created an intramural pilot funding program for projects that test interventions to enhance quality, efficiency, and patient-centeredness of care provided by LAC-DHS. Proposals are evaluated on these criteria, as well as promise for addressing translational gaps in healthcare delivery and health disparities, alignment with delivery system goals, and system-wide scalability. Six pilot grants have been awarded since 2016, addressing topics such as substance use disorders in the county jail, antimicrobial prophylaxis after surgery, and occupational therapy interventions for diabetes.

The Healthy Aging Initiative is an example of a collaborative effort between the UCLA and SC CTSAs, LAC-DHS, LAC Department of Public Health (LAC-DPH), the City of Los Angeles Department on Aging, California State University, and diverse community stakeholders. The initiative aims to support sustainable change in communities to allow middle-aged and older adults to stay healthy, live independently and safely, with timely, appropriate access to quality health care, social support, and services.

In addition, the Community Engagement cores at both Los Angeles (LA) hubs partner with DHS, DPH, and other LA County health departments in broad-ranging community-facing activities, including community health worker training and outreach, research education workshops based on community priorities, and peer navigation interventions.

Home to over 6 million people, the South Florida region is the largest major metropolitan area in the State of Florida. Miami-Dade County is unique in that 69% of the county is Hispanic, 20% of persons lack health insurance, and 53% were born outside the US [ 12 ]. Since 2012, the Miami CTSI – comprising University of Miami, Jackson Memorial Health System, and Miami VA Healthcare System – has partnered with the Florida Department of Health (FLDOH) to educate and mobilize at-risk communities via the capacity building of culturally and linguistically diverse CHWs. Recognizing that CHWs serve a vital role in bridging at-risk communities and formal healthcare, in 2010, FLDOH established a Community Health Workers Taskforce (now called the Florida Community Health Worker Coalition (FLCHWC) and incorporated as a nonprofit in 2015). By 2015, the Coalition had developed a formal credentialing pathway for CHWs in the state. As a key member of the task force, the Miami CTSI provided considerable and essential input into that process. Since then, the Miami CTSI has helped develop CHW educational programs that meet training requirements on core competencies and electives for CHW certification or renewal. These programs developed in partnership with training centers, clinics, local health planning agencies, and the FLDOH are aimed at expanding the local CHW healthcare workforceʼs capacity to address health conditions related to health disparities (e.g., social determinants of health, communication skills, motivational interviewing, and oral and mental health awareness among others).

The Miami CTSI has been partnering with the FLDOH to develop condition-specific or disease-specific training in response to emergent public health concerns of local county and state health departments. In 2016, when the Zika epidemic in Latin America arrived in Florida, the Miami CTSI developed a Zika/vector-borne disease prevention training module for CHWs that were delivered in both English and Spanish across Miami/Dade County in a short timeframe. That partnership also facilitated a Zika Research Grant Initiative that awarded 12 Florida Department of Health (DOH) grants to University of Miami investigators. Totaling over $13M, the grants focused on vaccine development, new diagnostic testing or therapeutics, and dynamic change team science. Another example was in 2018 when the Miami CTSI also worked with the FLCHWC and the FLDOH in developing opioid epidemic awareness modules for CHWs. The Miami CTSI has also worked with the FLDOH around HIV workforce development. The training modules that the Miami CTSI helped develop are now offered by the FLDOH. In turn, various University of Miami CTSI sponsored research projects now have their CHWs undergo the FLDOH HIV training, which the Miami CTSI initially helped develop.

The Miami CTSI also partners with the FLDOH and the Health Council of South Florida to perform community health needs assessments and shares data with the One Florida Clinical Research Consortium (spearheaded by the University of Florida CTSA). The FLDOH is a critical stakeholder in this consortium.

San Francisco

San Francisco is a county and city under unitary governance, with an ethnically diverse population of about 850,000 residents. It has many health sector assets, including a local public health department, a health sciences university (University of California, San Francisco [UCSF]), hospitals and health systems, and robust community-based organizations. Nonetheless, San Francisco has prominent health disparities. For example, relative to whites, hospitalization rates for diabetes are seven times higher among African Americans and twice as high among Latinos [ 13 ]. The vision of the San Francisco CTSI Community Engagement and Health Policy Program is to use an innovative Systems Based Participatory Research model which integrates community-based, practice-based, and policy research methods to advance health equity in the San Francisco Bay Area. This program strengthens the ability of academicians, the community, and Department of Public Health to conduct stakeholder engaged research through several strategies. First, the San Francisco Health Improvement Partnership (SFHIP) is a collaboration between academic, public, and community health organizations of San Francisco, an ethnically diverse city with 850,000 residents. It was formed in 2010 “to promote health equity using a novel collective impact model blending community engagement with policy change” [ 13 ]. Three backbone organizations – the San Francisco Department of Public Health, the University of California San Francisco CTSI, and the San Francisco Hospital Council – engage ethnic-based community health coalitions, schools, faith communities, and other sectors on public health initiatives. Using small seed grants from the UCSF CTSI, working groups with diverse membership develop feasible, scalable, sustainable evidence-based interventions, especially policy, and structural interventions that promote improving longer-term health outcomes. The partnership also includes community health needs assessments and a comprehensive, online data repository of local population health indicators. Results of past initiatives have been powerful. For example, the development of policy and educational interventions to reduce consumption of sugar-sweetened beverages led to new policies and legislation. These included warning labels on advertisements, a new “soda tax,” new filtered tap water stations at parks and other venues in low-income neighborhoods, and movement toward healthy beverage policies at UCSF, Kaiser Permanente, and other large hospitals. They also developed environmental solutions for reducing disparities in alcohol-related health and safety problems. As a result, they developed an alcohol outlet mapping tool that powers health research, routine blood alcohol testing in a trauma center, and influenced a new state ban on the sale of powdered alcohol, to name a few outcomes. This initiative was spearheaded by community members in neighborhoods affected by high rates of alcohol-related violence, health problems, and public nuisance activities, in collaboration with the San Francisco Police Department and other stakeholders. Using the SFHIP model, UCSF CTSI supported the development of the San Francisco Cancer Initiative, which provided science that has been used to support major community-based policy initiatives such as the banning of menthol cigarettes in San Francisco and more targeted clinical initiatives such as an effort to increase colorectal cancer screening and follow-up activities in local community health centers [ 14 ]. UCSF CTSI also has supported the San Francisco Department of Public Health in the development of its Healthy Cities Initiative, funded by Bloomberg Philanthropies, which seeks to link geocoded electronic health records data across multiple health systems with other neighborhood data to identify community-based strategies to address population health challenges across the city.

Critical Factors and Facilitators

The participating hubs share some foundational similarities and facilitators, although their specific goals and activities are diverse. Across multiple cities, numerous factors were commonly recognized as critical to the success of the partnerships (Table 2 ). First and foremost, in all locales, the needs of the departments of public health and health services shaped the activities of the CTSA hubs. All partnerships were driven by the priorities of the front-line care providers, patients, and/or the public at large, reflecting the specific goals of each health department. Projects originated with problems as identified by healthcare system leaders and clinicians, public health officials, and/or community members. For example, the USC and UCLA CTSAs in Los Angeles collaborated with the LAC-DHS to use implementation science methods to develop, implement, and evaluate sustainable solutions to health system priorities. In San Francisco, the UCSF CTSI initiated the SFHIP program, but leadership and funding responsibilities were turned over to the San Francisco Department of Public Health to ensure that community stakeholders drove the agenda. The CTSAs provided value to the public health/health systems by serving as conveners; offering expertise in informatics, community health needs assessments, implementation, evaluation, and dissemination; providing education and technical support; collaborating on policy development (whether organizational or governmental policy); and leveraging relationships with community organizations.

Key critical factors, facilitators, and barriers

CTSA, Clinical and Translational Science Award.

Since the partnerships developed in response to the public health/health systems’ needs, their goals and activities varied. While the Miami partnership focused on developing workforce capacity, the San Francisco partnership collaborated on policy changes, and the Chicago and Los Angeles partnerships concentrated on building research infrastructure and fostering collaborative research opportunities aligned with public health and health system priorities. By using the academic tools of community-engaged research, healthcare delivery science, implementation, and dissemination research in real-world settings, the partnerships are primed for disruptive innovations in healthcare.

Second, each health department had at least one designated “champion” that helped prioritize partnership activities and advocated for the partnerships to promote tangible and immediate real-life impact. For example, the LAC-DHS Chief Medical Officer has been an enthusiastic champion for the Los Angeles partnership. He co-wrote the pilot funding opportunity request for application (RFA) and offered detailed feedback to each applicant. He was instrumental in establishing and facilitating operations and policy development for the two service cores. His perspective and influence have been critical for initiating the program, refining the program each year, and promoting the research resources available to DHS clinicians. In addition, the UCLA hub created a population health program that is co-led by the Director of Chronic Disease and Injury Prevention within the LAC Department of Public Health. In Chicago, the ongoing involvement of health department leadership with the three CTSA programs through their C3 collaboration promoted a substantial shift in C3 priorities and activities to align more closely with health department programs and practices. This ultimately led to an agreement for the CTSA programs to jointly fund a new position at the health agency to serve as a liaison between the health department and the CTSA programs, despite a city-wide hiring freeze due to statewide budget constraints. In Florida, a Centers for Disease Control and Prevention Policy, Systems and Environment Change grant to the DOH Comprehensive Cancer Control Program created a staff position that was critical to establishing consistent community engagement in developing the capacity of the Florida CHW Coalition to create a credentialing program, on-going statewide involvement in promoting CHWs, and elevate the entire south Florida regionʼs effort to incorporate CHWs in prevention practice and access to care. The rest of the state learned from Miamiʼs efforts, and Miami was strengthened with the support of the statewide coalition. The staff member was able to devote three-quarters of her time to Coalition development, which unfortunately did not continue once the grant ended.

On the academic side, CTSA principal investigators and senior administrators also dedicated significant time and effort to the initiatives beyond monetary resources. CTSA leadership collaborated with the public health/health system champions to set the vision for the initiative, viewed the partnership as a priority for their hub, and exerted the influence needed to drive initiatives forward.

Third, the CTSAs needed the capacity to respond rapidly to key stakeholders and requests. The partnerships have been particularly effective when they have been nimble and responsive to the evolving needs of the local health departments, health systems, and communities. For example, in Miami, the CTSA core trained CHWs and was primed to respond with additional disease-specific training in the setting of the Zika outbreak. The UCLA CTSA offered scientific expertise to the Department of Public Health regarding vaping and e-cigarettes.

Fourth, partnerships can ensure that the communityʼs voice is heard. By leveraging CTSAs’ Community Engagement Cores, and the longstanding partnerships between public health/healthcare systems and community organizations, the communityʼs priorities and concerns can be brought to light. In another example, the UCSF CTSA leveraged long-term trusting relationships with community groups to engage in reducing disparities in alcohol-related harms. Similarly, in Chicago, the Department of Public Health provided the CTSA representatives with an early view of a new citywide health initiative, Healthy Chicago 2025, to initiate ongoing CTSA involvement in planning and implementation. By being responsive to initiatives and priorities, CTSA goals can be harmonized with partners’ operational objectives.

Fifth, as the healthcare landscape in the United States evolves, these partnerships offer opportunities to enhance translation of evidence to practice, study the effects of various payment models, and inform policy.

Other critical factors and facilitators included a common commitment among all parties to address local health disparities; funding in the form of pilot grants tailored to the needs of the public partners, which several CTSA hubs offered, and maturity of the partnership. In Los Angeles, responsiveness to the pilot funding opportunity improved with each iteration of the funding cycle. In all cities, longer relationships increased trust among the partners.

Lessons Learned, Barriers, Gaps, and Challenges

Numerous barriers have become evident in the infancy of these academic/public health/health system partnerships (Table 2 ). When evaluating the programs’ experiences, several themes emerged around challenges and the solutions employed to overcome them.

First, there are often competing priorities between the public health/health system and academic partners. All partnerships addressed this by finding areas where the public partners’ priorities aligned with academic expertise. In Miami, they developed disease-specific training in response to emergent public health concerns of local county and state health departments. In Los Angeles, the CTSA pilot funding criteria and prioritization topics were co-developed with DHS. In Chicago, seed funding projects required alignment with C3/CDPH priorities.

Second, partners’ timelines often differ substantially. The public health/healthcare system cannot adjust the pace to accommodate traditional academic endeavors. Individuals making operational decisions typically do not have the luxury of time to collect pilot data and study intervention implementation and outcomes using conventional research timelines. They are given directives to implement changes broadly and swiftly. Nevertheless, integration with academic endeavors can be achieved. One example is emphasizing underutilized research methods in implementation and improvement designed to generate both locally applicable and generalizable knowledge. Another example is embedding academicians in the public health or healthcare system, to ensure that they are involved in the design, planning, implementation, evaluation, and dissemination of initiatives. Academicians may be frustrated by hasty implementation and limitations in evaluation of outcomes, yet public health and health systems do want to base their decisions on good science. Funding cycles and grant review criteria are not consistent with business timelines and priority setting and often do not value the emerging scientific methods that are designed for learning in systems (e.g., implementation science, improvement science, design science). It is possible to undertake rigorous science that balances the competing operational needs and culture between health departments and universities when these partners focus on appropriate methods and problem-solving. In addition, researchers may have difficulty maintaining their academic credentials, gauged by grant portfolios and publication records. This is an important issue for CTSA program leadership locally and nationally, to advance changes in university tenure policies to encourage and promote health services, community-based, and community-engaged research [ 15 ]. To that end, sustained and systematic collaboration with local health departments can alleviate logistical barriers to community-engaged research to fulfill the CTSA mandate to promote research that informs policy and practice.

Third, it is critical to skillfully navigate bureaucratic hurdles when working with government entities. Several CTSAs have found it particularly effective to appoint a liaison to the public health/healthcare system. Liaisons acted as bridges between partners, drawing on expertise in multiple areas and access to resources across the partnershipʼs sites. As employees of health departments, often with dual appointments at the partnering university, liaisons understand the needs of health departments on an intimate level. With their connections and operational experience, they can act as navigators and advisors to academicians. For example, in Chicago, the new lead of Research and Evaluation at CDPH and co-chair of C3 helps researchers identify funding opportunities, disseminate research findings, and broker relationships. In addition, she serves on the CTSI community governance bodies for all three Chicago CTSIs. In Los Angeles, each of the CTSAs (UCLA and USC) appointed as their liaison an academician who practices in the DHS system. Moreover, the DHS Chief Medical Officer not only served as a supporter and champion internally but was also on the advisory committees for both USC and UCLA CTSA hubs, supporting a bidirectional strategic relationship. This is reflected in infrastructure for data services and provider workgroups promoting institutionally tailored evidence-based practices and tools [ 16 , 17 ]. In Miami, a trusted staff member served as the primary and long-term point of contact for communication channels and helped train a larger workforce of CHWs as an extension of the liaison model. UCSF explored creating a joint position and subsequently developed “Navigator” roles.

Agreements that make programs sustainable often have to be approved by politicians and health department leaders, and the process for obtaining approval may be complex and time consuming. A strategy for addressing the bureaucratic hurdles is to leverage the tools developed in other partnerships. We have compiled resources, including a Request for Proposals and a position description, that may be helpful to others developing similar collaborations (see Supplementary Materials). In cases where longstanding educational partnerships and agreements are in place, agreements and policies devoted to supporting translational research may build upon relationships and roles that establish faculty in leadership positions that advance research.

Fourth, unstable funding threatens the success of these partnerships. Funding is a critical factor in developing informatics and research infrastructure, workforce development, and research and evaluation. Key positions such as the liaison between the CTSI and the public health/health system should be prioritized to ensure the success of these partnerships. Strategies to address this barrier include leveraging existing resources, applying for funding from diverse sources, and being creative with resource utilization. On the other hand, mechanisms and policies for accepting funding from grants into operating budgets can also prove challenging. Three of the four LAC-DHS hospitals have an established research foundation to administer grant funding for clinician-researchers; however, these entities do not have contact with the healthcare budgeting organizations that would support resources for information technology, space, or support staff. The unpredictability of research funding is reflected in the absence of investment or awareness of procedures for accepting relatively small funds for investigator-initiated awards.

Fifth, for CTSIs collaborating with public entities, navigating a political landscape represents unique challenges. Examples include policy initiatives that could threaten corporations and well-funded industries; projects that span various public entities’ purviews (e.g., Public Health vs. Health Services vs. Mental Health); responding to politicians’ priorities; and shifting gears when administrations change. Partnerships that rely heavily on a single influential champion without associated agreements, policies, and procedures are vulnerable to leadership changes. Strong stakeholder engagement and a well-developed infrastructure are critical to ensuring the success of navigating the political sphere and sustainability.

Finally, academicians’ tools may not be well-suited to the public health systems’ needs. For example, in our Los Angeles partnership, although the UCLA and USC CTSIs had knowledge and expertise in implementation science, LAC-DHS was more interested in health delivery science, execution, operationalization, and evaluation. Rather than detailed evaluation of facilitators and barriers of implementation, they desired broad and swift implementation of interventions that reduced resource utilization while improving quality of care. Academicians have typically used an incremental approach, which often requires additional resources; whereas, LAC-DHS was more interested in disruptive approaches. We found that the best way to address the lack of alignment between the needs and the academic tools was to connect researchers with leaders in the public healthcare system early in the process of proposal development and to connect researchers with methodologists who focus on applied science in public delivery systems. Other potential solutions include expanding educational offerings for academicians, providing mentored hands-on experience, embedding researchers in public health/healthcare settings, training health department leaders in research, training community members in results dissemination, and offering incentives for cost-saving.

Unique CTSA hub collaborations with city, county, and state health organizations are driving innovations in health service delivery and population health in four urban cities. A common element among all partnerships was the CTSA hubs’ alignment of activities with the needs of the city/county partners. Other critical factors included having designated “champions” in health departments, CTSAs’ ability to respond quickly to evolving needs, and a common commitment to addressing local health disparities. Most programs encountered similar barriers, including competing priorities, different timelines, bureaucratic hurdles, and unstable funding. The academic–public partnerships have explored numerous strategies to addressing these barriers. These partnerships offer a model for innovatively disrupting healthcare and enhancing population health.

Finding areas of common ground is key. While universities and public health/healthcare systems differ in their priorities, timelines, and modus operandi, successful partnerships are poised to answer some of the critical questions in health policy, including how to deliver critical services to populations in a cost-effective manner and how to address the needs of the public. Many of these challenges are not unique to partnerships between academic centers and public systems. Some of the experiences apply equally to academic medical centers that are increasingly acquiring large private healthcare organizations without an established culture of education and research. If CTSA programs are to have a substantive impact on population health, significant expansion beyond academic medical centers is needed to address the full range of social determinants of health (e.g., housing instability, concentrated poverty, chronic unemployment). Public health departments are ideal partners to consider the bidirectional relation of social determinants and health disparities [ 18 ].

Limitations

First, this manuscript focused on partnerships between CTSAs and public entities such as Departments of Public Health or Departments of Health Services. Yet, CTSAs also have broad-ranging activities engaging communities. Second, public health and health systems have extensive collaborations with researchers and local, national, and international foundations, beyond the CTSAs. PCORnet, for example, has funded nine Clinical Research Networks; several include collaborations between universities and public health systems. Although these partnerships have been impactful, they are beyond the scope of this paper. Third, we have detailed the experiences of seven CTSAs in four large metropolitan areas. These findings and experiences may not be generalizable to other settings, particularly nonurban areas. Fourth, while we provided the experience of seven CTSAs, other CTSAs may have partnerships with their local city/county/state health departments. Rather than providing a comprehensive review of all CTSA/public health/health system partnerships, our hope was to stimulate more discussion around these partnerships.

Future Directions

There are several ways in which collaborations among CTSA programs and public sector health departments can be optimized. First, CTSA programs can prioritize opportunities for workforce development on policy-relevant research through sponsored internships and practica for graduate students and faculty. For students, these training opportunities could be aligned with core program goals across CTSA-affiliate programs in health-related fields (e.g., medicine, public health, psychology, dentistry) to provide a community perspective and promote an awareness of public sector needs early in training. For faculty, innovative funding opportunities could be modeled on sabbatical leave of absences perhaps aligned with pilot seed funding for promising research proposals.

In addition, formal lines of communication between health departments and CTSA program leadership could be encouraged by National Center for Advancing Translational Sciences (NCATS) in RFA announcements and program reviews. Encouraging each CTSA program to have at least one public sector representative on external advisory boards could also expedite cross-channel communication. Prioritizing rapid and consistent communication could help to bridge the gap between biomedical researchers and public health/health system leadership. This is especially important for early-stage research to encourage an appreciation for community resources and needs and to anticipate common barriers to implementation research [ 19 ]. In addition, ongoing feedback across CTSA and health department leadership could provide new opportunities for bi-directional exchanges that can lead to new research opportunities as well as adaptations in ongoing research to improve community-level outcomes.

A related challenge to sustaining changes is the paucity of focus on execution and operationalization. Historically, a missing link has been failure to acknowledge and address the challenges lying between an idea or proven intervention and its implementation. Randomized trials in controlled academic settings can, at best, be considered proofs-of-concept in other settings. In addition to implementation science, a key focus should be on improvements in effective operational management and culture change. The DHS-USC-UCLA partnership has worked to close this gap by hiring, coaching, and empowering multiple academically trained physicians from both the UCLA and USC CTSI hubs by the DHS. These academically trained health services researchers have become key DHS leaders and operational managers within the clinical care delivery system. Second, the partnership has used behavioral economics as an efficient and effective culture change tool in healthcare delivery. The sustainability and retention of these types of programs and partnerships may be less financial and more cultural—a “tipping point” may require organizational dissemination and incentive alignment from the top down to cultivate operational mechanisms and durable pathways to success.

Overall, the goal is to promote research that informs health policy and to encourage health policy that is informed by research. These collaborations show that this goal is best accomplished by a strategic alliance of CTSA programs and health departments. As is evident from the examples of these four cities, new opportunities for shared data and resources emerge from ongoing discussions of shared priorities. The health departments benefit by allocation of CTSA program trainees and funding, and the CTSA programs gain valuable insight and access into community health and health system needs and resources. Ultimately, the alliances promote the overall goal of translational science to inform and improve population health.

Acknowledgments

The authors wish to thank the public officials, researchers, administrators, champions, liaisons, and community members who contributed to the success of each partnership. We also wish to thank the staff at the Center for Leading Innovation and Collaboration (CLIC) for their support in developing this manuscript.

This work was funded in part by the University of Rochester CLIC, under Grant U24TR002260. CLIC is the coordinating center for the CTSA Program, funded by the NCATS at the National Institutes of Health (NIH). This work was also supported by grants UL1TR001855, UL1TR001881, UL1TR002736, UL1TR001872, UL1TR001422, UL1TR000050, UL1TR002389 from NCATS. This work is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Supplementary material

For supplementary material accompanying this paper visit http://dx.doi.org/10.1017/cts.2020.23.

click here to view supplementary material

Disclosures

The authors have no conflicts of interest to declare.

- 1. Woolf SH. The meaning of translational research and why it matters. Journal of the American Medical Association 2008; 299: 211–213. [ DOI ] [ PubMed ] [ Google Scholar ]

- 2. Meslin EM, Blasimme A, Cambon-Thomsen A. Mapping the translational science policy ‘valley of death’. Clinical and Translational Medicine 2013; 2: 14. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 3. Glasgow RE, et al. National institutes of health approaches to dissemination and implementation science: current and future directions. American Journal of Public Health 2012; 102: 1274–1281. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 4. McGovern L, Miller G, Hughes-Cromwick P. The Relative Contribution of Multiple Determinants to Health Outcomes. Health Affairs Health Policy Briefs. Princeton, NJ: Robert Wood Johnson Foundation, 2014. [ Google Scholar ]

- 5. Bradley EH, Taylor LA. The American Health Care Paradox: Why Spending More is Getting Us Less. New York, NY: PublicAffairs, 2013. [ Google Scholar ]

- 6. U.S. Department of Health & Human Services. FY2016 Budget in Brief – Centers for Medicare & Medicaid Services Overview [Internet], 2015. [cited Oct 28, 2019]. ( https://www.hhs.gov/about/budget/fy2016/budget-in-brief/index.html )

- 7. Brownson R, Fielding J, Green L. Building capacity for evidenced-based public health: reconciling the pulls of practice and the push of research. Annual Review of Public Health 2018; 39: 27–53. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 8. Estabrooks PA, Brownson RC, Pronk NP. Dissemination and implementation science for public health professionals: an overview and call to action. Preventing Chronic Disease 2018; 15: E162. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 9. Gilliland CT, et al. The fundamental characteristics of a translational scientist. ACS Pharmacology & Translational Science 2019; 2: 213–216. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 10. Dircksen JC, et al. Healthy Chicago 2.0: Partnering to Improve Health Equity. City of Chicago, March 2016.

- 11. Daskivich LP, et al. Implementation and evaluation of a large-scale teleretinal diabetic retinopathy screening program in the Los Angeles county department of health services. JAMA Internal Medicine 2017; 177: 642–649. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 12. United States Census Bureau. QuickFacts Miami-Dade County, Florida [Internet], 2018. [cited Oct 28, 2019]. ( https://www.census.gov/quickfacts/fact/table/miamidadecountyflorida/PST045218 )

- 13. Grumbach K, et al. Achieving health equity through community engagement in translating evidence to policy: the San Francisco Health Improvement Partnership, 2010–2016. Preventing Chronic Disease 2017; 14: E27. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 14. Hiatt RA, et al. The San Francisco cancer initiative: a community effort to reduce the population burden of cancer. Health Affairs (Millwood) 2018; 37: 54–61. [ DOI ] [ PubMed ] [ Google Scholar ]

- 15. Marrero DG, et al. Promotion and tenure for community-engaged research: an examination of promotion and tenure support for community-engaged research at three universities collaborating through a Clinical and Translational Science Award. Clinical and Translational Science 2013; 6: 204–208. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 16. Soni SM, Giboney P, Yee HF. Development and implementation of expected practices to reduce inappropriate variations in clinical practice. JAMA 2016; 315: 2163–2164. [ DOI ] [ PubMed ] [ Google Scholar ]

- 17. Barnett ML, et al. A health planʼs formulary led to reduced use of extended-release opioids but did not lower overall opioid use. Health Affairs 2018; 37: 1509–1516. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 18. Marmot MG, Bell R. Action on health disparities in the United States: commission on social determinants of health. JAMA 2009; 301: 1169–1171. [ DOI ] [ PubMed ] [ Google Scholar ]

- 19. Dodson EA, Baker EA, Brownson RC. Use of evidence-based interventions in state health departments: a qualitative assessment of barriers and solutions. Journal of Public Health Management & Practice 2010; 16: E9–E15. [ DOI ] [ PubMed ] [ Google Scholar ]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

- View on publisher site

- PDF (400.6 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

The Research Gap (Literature Gap)

I f you’re just starting out in research, chances are you’ve heard about the elusive research gap (also called a literature gap). In this post, we’ll explore the tricky topic of research gaps. We’ll explain what a research gap is, look at the four most common types of research gaps, and unpack how you can go about finding a suitable research gap for your dissertation, thesis or research project.

Overview: Research Gap 101

- What is a research gap

- Four common types of research gaps

- Practical examples

- How to find research gaps

- Recap & key takeaways

What (exactly) is a research gap?

Well, at the simplest level, a research gap is essentially an unanswered question or unresolved problem in a field, which reflects a lack of existing research in that space. Alternatively, a research gap can also exist when there’s already a fair deal of existing research, but where the findings of the studies pull in different directions , making it difficult to draw firm conclusions.

For example, let’s say your research aims to identify the cause (or causes) of a particular disease. Upon reviewing the literature, you may find that there’s a body of research that points toward cigarette smoking as a key factor – but at the same time, a large body of research that finds no link between smoking and the disease. In that case, you may have something of a research gap that warrants further investigation.

Now that we’ve defined what a research gap is – an unanswered question or unresolved problem – let’s look at a few different types of research gaps.

Types of research gaps

While there are many different types of research gaps, the four most common ones we encounter when helping students at Grad Coach are as follows:

- The classic literature gap

- The disagreement gap

- The contextual gap, and

- The methodological gap

Need a helping hand?

1. The Classic Literature Gap

First up is the classic literature gap. This type of research gap emerges when there’s a new concept or phenomenon that hasn’t been studied much, or at all. For example, when a social media platform is launched, there’s an opportunity to explore its impacts on users, how it could be leveraged for marketing, its impact on society, and so on. The same applies for new technologies, new modes of communication, transportation, etc.

Classic literature gaps can present exciting research opportunities , but a drawback you need to be aware of is that with this type of research gap, you’ll be exploring completely new territory . This means you’ll have to draw on adjacent literature (that is, research in adjacent fields) to build your literature review, as there naturally won’t be very many existing studies that directly relate to the topic. While this is manageable, it can be challenging for first-time researchers, so be careful not to bite off more than you can chew.

2. The Disagreement Gap

As the name suggests, the disagreement gap emerges when there are contrasting or contradictory findings in the existing research regarding a specific research question (or set of questions). The hypothetical example we looked at earlier regarding the causes of a disease reflects a disagreement gap.

Importantly, for this type of research gap, there needs to be a relatively balanced set of opposing findings . In other words, a situation where 95% of studies find one result and 5% find the opposite result wouldn’t quite constitute a disagreement in the literature. Of course, it’s hard to quantify exactly how much weight to give to each study, but you’ll need to at least show that the opposing findings aren’t simply a corner-case anomaly .

3. The Contextual Gap

The third type of research gap is the contextual gap. Simply put, a contextual gap exists when there’s already a decent body of existing research on a particular topic, but an absence of research in specific contexts .

For example, there could be a lack of research on:

- A specific population – perhaps a certain age group, gender or ethnicity

- A geographic area – for example, a city, country or region

- A certain time period – perhaps the bulk of the studies took place many years or even decades ago and the landscape has changed.

The contextual gap is a popular option for dissertations and theses, especially for first-time researchers, as it allows you to develop your research on a solid foundation of existing literature and potentially even use existing survey measures.

Importantly, if you’re gonna go this route, you need to ensure that there’s a plausible reason why you’d expect potential differences in the specific context you choose. If there’s no reason to expect different results between existing and new contexts, the research gap wouldn’t be well justified. So, make sure that you can clearly articulate why your chosen context is “different” from existing studies and why that might reasonably result in different findings.

4. The Methodological Gap

Last but not least, we have the methodological gap. As the name suggests, this type of research gap emerges as a result of the research methodology or design of existing studies. With this approach, you’d argue that the methodology of existing studies is lacking in some way , or that they’re missing a certain perspective.

For example, you might argue that the bulk of the existing research has taken a quantitative approach, and therefore there is a lack of rich insight and texture that a qualitative study could provide. Similarly, you might argue that existing studies have primarily taken a cross-sectional approach , and as a result, have only provided a snapshot view of the situation – whereas a longitudinal approach could help uncover how constructs or variables have evolved over time.

Practical Examples

Let’s take a look at some practical examples so that you can see how research gaps are typically expressed in written form. Keep in mind that these are just examples – not actual current gaps (we’ll show you how to find these a little later!).

Context: Healthcare

Despite extensive research on diabetes management, there’s a research gap in terms of understanding the effectiveness of digital health interventions in rural populations (compared to urban ones) within Eastern Europe.

Context: Environmental Science

While a wealth of research exists regarding plastic pollution in oceans, there is significantly less understanding of microplastic accumulation in freshwater ecosystems like rivers and lakes, particularly within Southern Africa.

Context: Education

While empirical research surrounding online learning has grown over the past five years, there remains a lack of comprehensive studies regarding the effectiveness of online learning for students with special educational needs.

As you can see in each of these examples, the author begins by clearly acknowledging the existing research and then proceeds to explain where the current area of lack (i.e., the research gap) exists.

How To Find A Research Gap

Now that you’ve got a clearer picture of the different types of research gaps, the next question is of course, “how do you find these research gaps?” .

Well, we cover the process of how to find original, high-value research gaps in a separate post . But, for now, I’ll share a basic two-step strategy here to help you find potential research gaps.

As a starting point, you should find as many literature reviews, systematic reviews and meta-analyses as you can, covering your area of interest. Additionally, you should dig into the most recent journal articles to wrap your head around the current state of knowledge. It’s also a good idea to look at recent dissertations and theses (especially doctoral-level ones). Dissertation databases such as ProQuest, EBSCO and Open Access are a goldmine for this sort of thing. Importantly, make sure that you’re looking at recent resources (ideally those published in the last year or two), or the gaps you find might have already been plugged by other researchers.

Once you’ve gathered a meaty collection of resources, the section that you really want to focus on is the one titled “ further research opportunities ” or “further research is needed”. In this section, the researchers will explicitly state where more studies are required – in other words, where potential research gaps may exist. You can also look at the “ limitations ” section of the studies, as this will often spur ideas for methodology-based research gaps.

By following this process, you’ll orient yourself with the current state of research , which will lay the foundation for you to identify potential research gaps. You can then start drawing up a shortlist of ideas and evaluating them as candidate topics . But remember, make sure you’re looking at recent articles – there’s no use going down a rabbit hole only to find that someone’s already filled the gap 🙂

Let’s Recap

We’ve covered a lot of ground in this post. Here are the key takeaways:

- A research gap is an unanswered question or unresolved problem in a field, which reflects a lack of existing research in that space.

- The four most common types of research gaps are the classic literature gap, the disagreement gap, the contextual gap and the methodological gap.

- To find potential research gaps, start by reviewing recent journal articles in your area of interest, paying particular attention to the FRIN section .

If you’re keen to learn more about research gaps and research topic ideation in general, be sure to check out the rest of the Grad Coach Blog . Alternatively, if you’re looking for 1-on-1 support with your dissertation, thesis or research project, be sure to check out our private coaching service .

You Might Also Like:

How To Choose A Tutor For Your Dissertation

Hiring the right tutor for your dissertation or thesis can make the difference between passing and failing. Here’s what you need to consider.

5 Signs You Need A Dissertation Helper

Discover the 5 signs that suggest you need a dissertation helper to get unstuck, finish your degree and get your life back.

Writing A Dissertation While Working: A How-To Guide

Struggling to balance your dissertation with a full-time job and family? Learn practical strategies to achieve success.

How To Review & Understand Academic Literature Quickly

Learn how to fast-track your literature review by reading with intention and clarity. Dr E and Amy Murdock explain how.

Dissertation Writing Services: Far Worse Than You Think

Thinking about using a dissertation or thesis writing service? You might want to reconsider that move. Here’s what you need to know.

📄 FREE TEMPLATES

Research Topic Ideation

Proposal Writing

Literature Review

Methodology & Analysis

Academic Writing

Referencing & Citing

Apps, Tools & Tricks

The Grad Coach Podcast

43 Comments

This post is REALLY more than useful, Thank you very very much

Very helpful specialy, for those who are new for writing a research! So thank you very much!!

I found it very helpful article. Thank you.

it very good but what need to be clear with the concept is when di we use research gap before we conduct aresearch or after we finished it ,or are we propose it to be solved or studied or to show that we are unable to cover so that we let it to be studied by other researchers ?

Just at the time when I needed it, really helpful.

Very helpful and well-explained. Thank you

VERY HELPFUL

We’re very grateful for your guidance, indeed we have been learning a lot from you , so thank you abundantly once again.

hello brother could you explain to me this question explain the gaps that researchers are coming up with ?

Am just starting to write my research paper. your publication is very helpful. Thanks so much

How to cite the author of this?

your explanation very help me for research paper. thank you

Very important presentation. Thanks.

Very helpful indeed

Best Ideas. Thank you.

I found it’s an excellent blog to get more insights about the Research Gap. I appreciate it!

Kindly explain to me how to generate good research objectives.

This is very helpful, thank you

How to tabulate research gap

Very helpful, thank you.

Thanks a lot for this great insight!

This is really helpful indeed!