What Is Operant Conditioning? Definition and Examples

- Archaeology

:max_bytes(150000):strip_icc():format(webp)/CVinney_Headshot-1-5b6ced71c9e77c00508aedfd.jpg)

- Ph.D., Psychology, Fielding Graduate University

- M.A., Psychology, Fielding Graduate University

- B.A., Film Studies, Cornell University

Operant conditioning occurs when an association is made between a particular behavior and a consequence for that behavior. This association is built upon the use of reinforcement and/or punishment to encourage or discourage behavior. Operant conditioning was first defined and studied by behavioral psychologist B.F. Skinner, who conducted several well-known operant conditioning experiments with animal subjects.

Key Takeaways: Operant Conditioning

- Operant conditioning is the process of learning through reinforcement and punishment.

- In operant conditioning, behaviors are strengthened or weakened based on the consequences of that behavior.

- Operant conditioning was defined and studied by behavioral psychologist B.F. Skinner.

B.F. Skinner was a behaviorist , which means he believed that psychology should be limited to the study of observable behaviors. While other behaviorists, like John B. Watson, focused on classical conditioning, Skinner was more interested in the learning that happened through operant conditioning.

He observed that in classical conditioning responses tend to be triggered by innate reflexes that occur automatically. He called this kind of behavior respondent . He distinguished respondent behavior from operant behavior . Operant behavior was the term Skinner used to describe a behavior that is reinforced by the consequences that follow it. Those consequences play an important role in whether or not a behavior is performed again.

Skinner’s ideas were based on Edward Thorndike’s law of effect, which stated that behavior that elicits positive consequences will probably be repeated, while behavior that elicits negative consequences will probably not be repeated. Skinner introduced the concept of reinforcement into Thorndike’s ideas, specifying that behavior that is reinforced will probably be repeated (or strengthened).

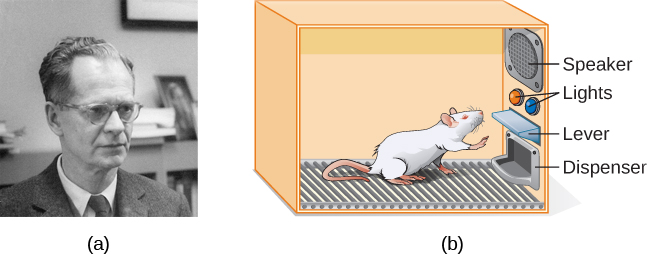

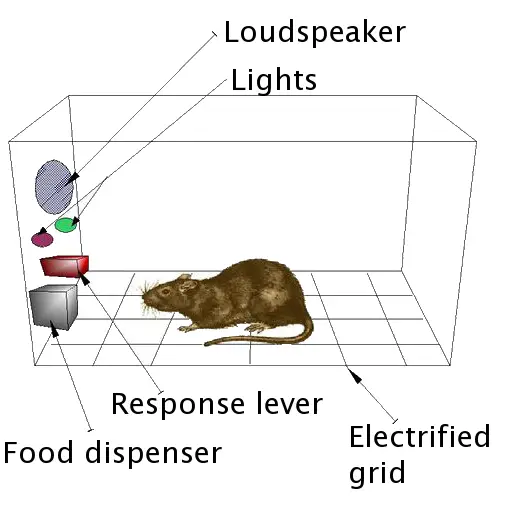

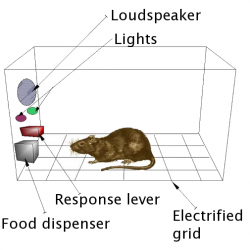

To study operant conditioning, Skinner conducted experiments using a “Skinner Box,” a small box that had a lever at one end that would provide food or water when pressed. An animal, like a pigeon or rat, was placed in the box where it was free to move around. Eventually the animal would press the lever and be rewarded. Skinner found that this process resulted in the animal pressing the lever more frequently. Skinner would measure learning by tracking the rate of the animal’s responses when those responses were reinforced.

Reinforcement and Punishment

Through his experiments, Skinner identified the different kinds of reinforcement and punishment that encourage or discourage behavior.

Reinforcement

Reinforcement that closely follows a behavior will encourage and strengthen that behavior. There are two types of reinforcement:

- Positive reinforcement occurs when a behavior results in a favorable outcome, e.g. a dog receiving a treat after obeying a command, or a student receiving a compliment from the teacher after behaving well in class. These techniques increase the likelihood that the individual will repeat the desired behavior in order to receive the reward again.

- Negative reinforcement occurs when a behavior results in the removal of an unfavorable experience, e.g. an experimenter ceasing to give a monkey electric shocks when the monkey presses a certain lever. In this case, the lever-pressing behavior is reinforced because the monkey will want to remove the unfavorable electric shocks again.

In addition, Skinner identified two different kinds of reinforcers.

- Primary reinforcers naturally reinforce behavior because they are innately desirable, e.g. food.

- Conditioned reinforcers reinforce behavior not because they are innately desirable, but because we learn to associate them with primary reinforcers. For example, Paper money is not innately desirable, but it can be used to acquire innately desirable goods, such as food and shelter.

Punishment is the opposite of reinforcement. When punishment follows a behavior, it discourages and weakens that behavior. There are two kinds of punishment.

- Positive punishment (or punishment by application) occurs when a behavior is followed by an unfavorable outcome, e.g. a parent spanking a child after the child uses a curse word.

- Negative punishment (or punishment by removal) occurs when a behavior leads to the removal of something favorable, e.g. a parent who denies a child their weekly allowance because the child has misbehaved.

Although punishment is still widely used, Skinner and many other researchers found that punishment is not always effective. Punishment can suppress a behavior for a time, but the undesired behavior tends to come back in the long run. Punishment can also have unwanted side effects. For example, a child who is punished by a teacher may become uncertain and fearful because they don’t know exactly what to do to avoid future punishments.

Instead of punishment, Skinner and others suggested reinforcing desired behaviors and ignoring unwanted behaviors. Reinforcement tells an individual what behavior is desired, while punishment only tells the individual what behavior isn’t desired.

Behavior Shaping

Operant conditioning can lead to increasingly complex behaviors through shaping , also referred to as the “method of approximations.” Shaping happens in a step-by-step fashion as each part of a more intricate behavior is reinforced. Shaping starts by reinforcing the first part of the behavior. Once that piece of the behavior is mastered, reinforcement only happens when the second part of the behavior occurs. This pattern of reinforcement is continued until the entire behavior is mastered.

For example, when a child is taught to swim, she may initially be praised just for getting in the water. She is praised again when she learns to kick, and again when she learns specific arm strokes. Finally, she is praised for propelling herself through the water by performing a specific stroke and kicking at the same time. Through this process, an entire behavior has been shaped.

Schedules of Reinforcement

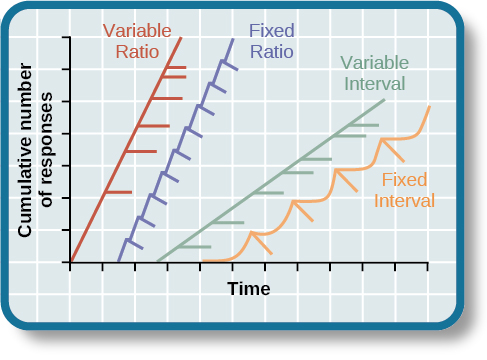

In the real world, behavior is not constantly reinforced. Skinner found that the frequency of reinforcement can impact how quickly and how successfully one learns a new behavior. He specified several reinforcement schedules, each with different timing and frequencies.

- Continuous reinforcement occurs when a particular response follows each and every performance of a given behavior. Learning happens rapidly with continuous reinforcement. However, if reinforcement is stopped, the behavior will quickly decline and ultimately stop altogether, which is referred to as extinction.

- Fixed-ratio schedules reward behavior after a specified number of responses. For example, a child may get a star after every fifth chore they complete. On this schedule, the response rate slows right after the reward is delivered.

- Variable-ratio schedules vary the number of behaviors required to get a reward. This schedule leads to a high rate of responses and is also hard to extinguish because its variability maintains the behavior. Slot machines use this kind of reinforcement schedule.

- Fixed-interval schedules provide a reward after a specific amount of time passes. Getting paid by the hour is one example of this kind of reinforcement schedule. Much like the fixed-ratio schedule, the response rate increases as the reward approaches but slows down right after the reward is received.

- Variable-interval schedules vary the amount of time between rewards. For example, a child who receives an allowance at various times during the week as long as they’ve exhibited some positive behaviors is on a variable-interval schedule. The child will continue to exhibit positive behavior in anticipation of eventually receiving their allowance.

Examples of Operant Conditioning

If you’ve ever trained a pet or taught a child, you have likely used operant conditioning in your own life. Operant conditioning is still frequently used in various real-world circumstances, including in the classroom and in therapeutic settings.

For example, a teacher might reinforce students doing their homework regularly by periodically giving pop quizzes that ask questions similar to recent homework assignments. Also, if a child throws a temper tantrum to get attention, the parent can ignore the behavior and then acknowledge the child again once the tantrum has ended.

Operant conditioning is also used in behavior modification , an approach to the treatment of numerous issues in adults and children, including phobias, anxiety, bedwetting, and many others. One way behavior modification can be implemented is through a token economy , in which desired behaviors are reinforced by tokens in the form of digital badges, buttons, chips, stickers, or other objects. Eventually these tokens can be exchanged for real rewards.

While operant conditioning can explain many behaviors and is still widely used, there are several criticisms of the process. First, operant conditioning is accused of being an incomplete explanation for learning because it neglects the role of biological and cognitive elements.

In addition, operant conditioning is reliant upon an authority figure to reinforce behavior and ignores the role of curiosity and an individual's ability to make his or her own discoveries. Critics object to operant conditioning's emphasis on controlling and manipulating behavior, arguing that they can lead to authoritarian practices. Skinner believed that environments naturally control behavior, however, and that people can choose to use that knowledge for good or ill.

Finally, because Skinner’s observations about operant conditioning relied on experiments with animals, he is criticized for extrapolating from his animal studies to make predictions about human behavior. Some psychologists believe this kind of generalization is flawed because humans and non-human animals are physically and cognitively different.

- Cherry, Kendra. “What is Operant Conditioning and How Does it Work?” Verywell Mind , 2 October 2018. https://www.verywellmind.com/operant-conditioning-a2-2794863

- Crain, William. Theories of Development: Concepts and Applications. 5th ed., Pearson Prentice Hall. 2005.

- Goldman, Jason G. “What is Operant Conditioning? (And How Does It Explain Driving Dogs?)” Scientific American , 13 December 2012. https://blogs.scientificamerican.com/thoughtful-animal/what-is-operant-conditioning-and-how-does-it-explain-driving-dogs/

- McLeod, Saul. “Skinner – Operant Conditioning.” Simply Psychology , 21 January 2018. https://www.simplypsychology.org/operant-conditioning.html#class

- What Is the Law of Effect in Psychology?

- What Is Behaviorism in Psychology?

- What Is the Premack Principle? Definition and Examples

- What Is Classical Conditioning?

- What Is an Unconditioned Response?

- What Is a Conditioned Response?

- What Is Survivor's Guilt? Definition and Examples

- Understanding Social Identity Theory and Its Impact on Behavior

- Biography of Ivan Pavlov, Father of Classical Conditioning

- What Is Deindividuation in Psychology? Definition and Examples

- What Is Gender Socialization? Definition and Examples

- What Is Social Loafing? Definition and Examples

- What Is Cognitive Bias? Definition and Examples

- What Is the Zeigarnik Effect? Definition and Examples

- What Is Social Facilitation? Definition and Examples

- What Is Decision Fatigue? Definition and Examples

Operant Conditioning (Examples + Research)

If you're on this page, you're probably researching B.F. Skinner and his work on operant conditioning! You might be surprised to see how much conditioning you go through each day! We are conditioned to behave in certain ways every day. Our brains naturally gravitate toward the things that bring us pleasure and back away from things that bring us pain. When we connect our behaviors to pleasure and pain, we become conditioned.

When people are subjected to reinforcements (pleasure) and punishments (pain), they undergo operant conditioning. This article will describe operant conditioning, how it works, and how different schedules of reinforcement can increase the rate at which subjects perform a certain behavior.

What is Operant Conditioning?

Operant conditioning is a system of learning that happens by changing external variables called 'punishments' and 'rewards.' Throughout time and repetition, learning happens when an association is created between a certain behavior and the consequence of that behavior (good or bad).

You might also hear this concept as “instrumental conditioning” or “Skinnerian conditioning.” This second term comes from BF Skinner , the behaviorist who discovered operant conditioning through this work with pigeons.

He created what is now known as the “Skinner box,” a device that contained a lever, disc, or other mechanism. Something would occur when the levers were pulled or the discs were pressed. Food would appear, lights would flash, the floor would become electric, etc.

Skinner placed pigeons inside these boxes to record their responses based on whether or not they were conditioned to the responses that occurred after completing a certain task.

Based on how the pigeons understood the consequences of their actions, and changes to their behavior, Skinner developed the idea of operant conditioning.

How Does Operant Conditioning Work?

We can unearth the definition of operant conditioning by breaking it down. Skinner defined an operant as any "active behavior that operates upon the environment to generate consequences." You get a big hug whenever you tell your mother she looks pretty. That compliment is an operant.

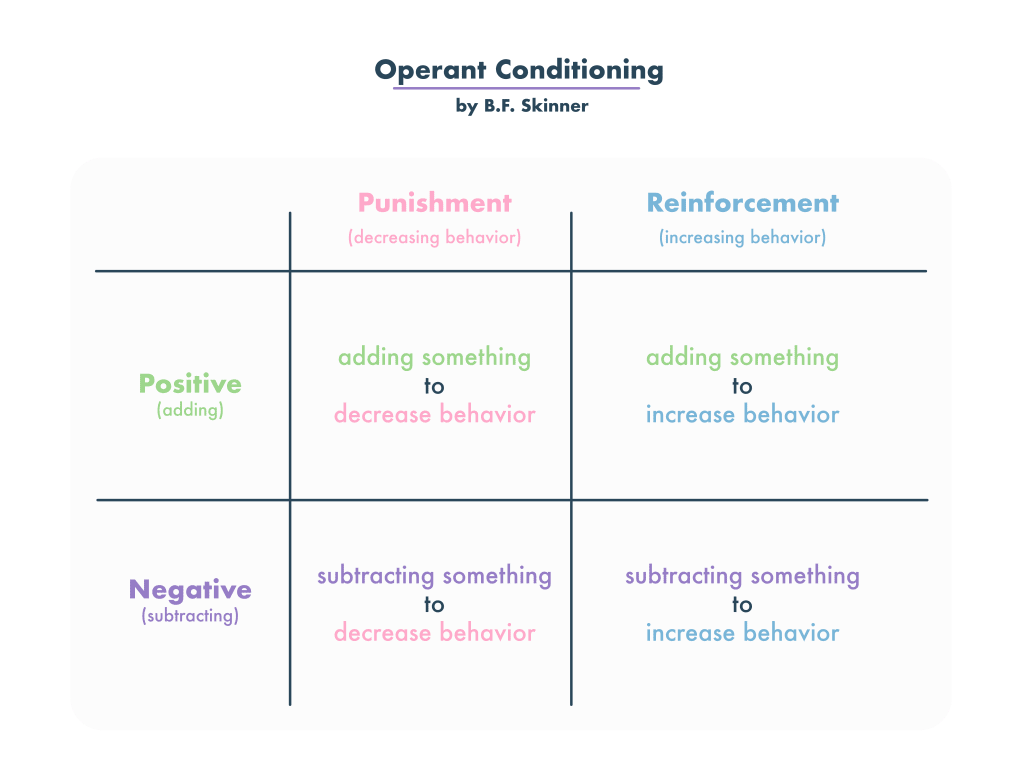

In operant conditioning, you can change two variables to achieve two goals.

The variables you can change are adding a stimulus or removing a stimulus.

The goals you can achieve are increasing a behavior or decreasing a behavior.

Depending on what goal you're trying to achieve and how you manipulate the variable, there are four methods of operant conditioning:

- Positive Reinforcement

- Negative Reinforcement

- Positive Punishment

- Negative Punishment

|

| Increase Behavior | Decrease Behavior |

|---|---|---|

| Add Stimulus | ||

Remove Stimulus |

|

Remembering operant conditioning types can be difficult, but here's a simple cheat sheet to help you.

Reinforcement is increasing a behavior.

Punishment is decreasing behavior.

The positive prefix means you're adding a stimulus.

The negative prefix means you're removing the stimulus.

Reinforcement

Positive reinforcement sounds redundant - isn’t all reinforcement positive? In psychology, “positive” doesn’t exactly mean what you think it means. The term “positive reinforcement” simply refers to the idea that you have added stimulus to increase a behavior. Dessert after finishing your chores is positive reinforcement.

Negative reinforcement is the removal of a stimulus to reinforce a behavior. It’s not always a negative experience. Removing debt from your account is considered negative reinforcement. A night without chores is also a negative reinforcement.

Under the umbrella of negative reinforcement are escape and active avoidance. These types of negative reinforcement condition your behavior through the threat or existence of “bad” stimuli.

Escape Learning

Escape learning is a crucial adaptive mechanism that enables a subject to minimize or prevent exposure to aversive stimuli. By understanding the dynamics of escape learning, we can gain insights into how organisms, including humans, respond to threatening or harmful situations. In Martin Seligman's experiments with dogs, the principle illustrated how the dogs learned to change their behavior to escape a negative stimulus. This form of learning highlights the ways in which adverse conditions can motivate behaviors that alleviate discomfort or pain.

Active Avoidance Learning

Active avoidance learning is not just a theoretical concept; it has real-world applications in understanding our daily behaviors and decision-making processes. By recognizing the patterns in which we actively avoid negative stimuli, therapists and educators can design interventions to help individuals address anxieties or phobias. For instance, we actively prevent discomfort by putting on a coat to avoid the cold. Recognizing these patterns provides a foundational understanding of how humans often make proactive choices based on past experiences to avoid potential future discomforts. This proactive behavior adjustment plays a significant role in shaping our daily decisions and habits.

Escape and active avoidance learning are integral to understanding human behavior. They offer insights into how we navigate our environment, respond to threats, and proactively shape our actions to avoid potential negative outcomes.

In Operant Conditioning, Punishment is described as changing a stimulus to decrease the likelihood of a behavior. Like reinforcement, there are two types of punishment: positive and negative.

Positive punishment is not a positive experience - it discourages the subject from repeating their behaviors by adding stimulus.

In The Big Bang Theory, Sheldon and the gang try and devise a plan to avoid getting off-topic. They decide to introduce a positive punishment to discourage that behavior.

The characters decide to put pieces of duct tape on their arms. When one of them gets off-topic, another person in the group would rip the duct tape off that person’s arm as a form of operant conditioning. Adding that painful feeling makes their scheme a form of positive punishment.

Negative punishment takes something away from the subject to help discourage behavior. If your parents ever took away your access to video games or toys because you were behaving badly, they were using negative punishment to discourage you from bad behavior.

Measuring Response and Extinction Rates

Getting spanked for bad behavior once will not stop you from trying to get away with bad behavior. Feeling cold outside and warmer once you put on a coat will not teach you to put on a coat every time you go outside.

Researchers use two measurements to determine the effectiveness of different operant conditioning schedules: response rate and extinction rate.

The Response Rate is how often the subject performs the behavior to receive the reinforcement.

The Extinction Rate is quite different. If the subject doesn’t trust that they will get a reinforcement for their behavior or does not make the connection between the behavior and the consequence, they are likely to quit performing the behavior. The extinction rate is when that behavior ends after reinforcements are not given.

Schedules of Reinforcement

How fast does operant conditioning happen? Can you manipulate response and extinction rates? The answer varies based on when and why you receive your reinforcement.

Skinner understood this. Throughout his research, he observed that the timing and frequency of reinforcement or punishment greatly impacted how quickly the subject learned to perform or refrain from a behavior. These factors also make an impact on response rate.

The different times and frequencies in which reinforcement is delivered can be identified by one of many schedules of reinforcement. Let’s look at those different schedules and how effective they are.

Continuous reinforcement

If you think about the simplest form of operant conditioning, you are probably thinking of continuous reinforcement. When the subject performs a behavior, they earn a reinforcement. This occurs every single time.

While the response rate is fairly high initially, extinction occurs when continuous reinforcement stops. If you earn dessert every time you clean your room, you will clean your room when you want dessert. But if you clean your room and don’t earn dessert one day, you will lose trust in the reinforcement, and the behavior will likely stop.

The next four reinforcement schedules are called partial reinforcement. Reinforcements are not delivered every single time a behavior is performed. Instead, reinforcements are distributed based on the amount of behaviors performed or time passed.

Fixed ratio reinforcement

“Ratio” refers to the amount of responses. “Fixed” refers to a consistent amount. Put them together, and you get a schedule of reinforcement with a consistent amount of responses. Rewards programs often use fixed ratio reinforcement schedules to encourage customers to return . For every ten smoothies, you get one free.

Every time you spend $100, you get $20 off on your next purchase. The free smoothie and reduced purchases are both reinforcements distributed after a consistent amount of behaviors. It could take a subject two years or two weeks to reach that tenth smoothie - either way, the reinforcement is distributed after that tenth purchase.

The rate of response becomes more rapid as subjects endure fixed ratio reinforcement. Think about people in sales who work on commission. They know they will get a $1,000 paycheck for every five items they sell - you can bet that they are pushing hard to sell those five items and earn that reinforcement faster.

Fixed interval reinforcement

Whereas “ratio” refers to the amount of responses, “interval” refers to the timing of the response. Subjects receive reinforcement after a certain amount of time has passed. You experience fixed interval reinforcement when you receive a paycheck on the 15th and 30th of every month, regardless of how often you perform a behavior.

The response rate is typically slower in situations with fixed interval reinforcement. Subjects know they will receive a reward no matter how often they behave . People in jobs with steady and consistent paychecks are often less likely to push hard and sell more products because they know they will get the same paycheck no matter how many items they sell. Other factors, like bonuses or verbal reprimands, may impact their motivation, but those extra factors don’t exist in pure fixed interval reinforcement.

Variable ratio reinforcement

When discussing reinforcement schedules, “variable” refers to something that varies after a reinforcement is given.

Let’s go back to the example of the rewards card. On a variable ratio reinforcement schedule, the subject would receive their first free smoothie after buying ten smoothies. Once they get that first free smoothie, they only have to buy seven for another free smoothie. After that reinforcement is distributed, the subject has to buy 15 smoothies to get a free smoothie. The ratio of reinforcement is variable.

This type of schedule isn’t always used because it can be confusing - in many cases, the subject does not know how many smoothies they must purchase before getting their free one.

However, response rates are high for this type of schedule. The reinforcement is dependent on the subject’s behavior. They know they are one step closer to their reward by performing one more behavior. If they don’t get the reinforcement, they can perform one more behavior and again become one step closer to getting the reinforcement.

Think of slot machines. You never know how often you must pull the level before winning the jackpot. But you know you are one step closer to winning with every pull . At some point, if you just keep pulling, you will win the jackpot and receive a big reinforcement.

Variable interval reinforcement

The final reinforcement schedule identified by Skinner was that of variable interval reinforcement. By now, you can probably guess what this means. Variable interval reinforcement occurs when reinforcements are distributed after a certain amount of time has passed, but this amount varies after each reinforcement is distributed.

In this example, let’s say you work at a retail store. At any given time, secret shoppers enter the store. If you behave correctly and sell the right items to the secret shopper, the higher-ups give you a bonus.

This could happen anytime as long as you are performing the behavior. This schedule keeps people on their toes, encouraging a high response rate and low extinction rate.

FAQs About Operant Conditioning

Is operant conditioning trial and error.

Not exactly, although trial and error helped psychologists recognize operant conditioning. Through trial and error, it was discovered that reinforcements and rewards helped behaviors stick. These reinforcements (praise, treats, etc.) are the key to behaviors being performed and even repeated.

Is Operant Conditioning Behaviorism?

Behaviorism is an approach to psychology; think of operant conditioning as a theory under the umbrella of behaviorism. B.F. Skinner is considered one of the most important Behaviorists in the history of psychology. For decades, theories like operant conditioning and classical conditioning have helped shape how people approach behavior.

Differences Between Operant Conditioning vs. Classical Conditioning

Classical conditioning ties existing behaviors (like salivating) to stimuli (like a bell). “Classical Connects.” Operant conditioning trains an animal or human to perform or refrain from certain behaviors. You don’t train a dog to salivate, but you can train a dog to sit by giving him treats when he sits.

Operant Conditioning vs. Instrumental Conditioning

Operant conditioning and instrumental conditioning refer to the same process. You are more likely to hear the term "operant conditioning" in psychology and "instrumental conditioning" in economics! However, they differ from another type of conditioning: classical conditioning.

Can Operant Conditioning Be Used in the Classroom?

Yes! Intentionally rewarding students for their behavior is a form of operant conditioning. If students receive praise every time they get an A , they are likelier to strive for an A on their tests and quizzes.

Everyday Examples of Operant Conditioning

You can probably think of ways you have used operant conditioning on yourself, your child, or your pets! Reddit users see operant conditioning in video games and pet training, ...

Post from iurichibaBR in r/FFBE (Final Fantasy Brave Exvius)

When you think about FFBE, what's the first thing that comes to mind? Most of you would probably answer CRYSTALS, PULLING, RAINBOWS, EVE! That's a clear example of Operant Conditioning. You wanna play the game every day and get that daily summons because you know you may get something awesome! And that's also why the Rainbow rates are low -- if you won them too frequently, it would lose its effect.

A cute example of operant conditioning from Narwahl_in_spaze in r/ABA (Applied Behavior Analysis)

Post from barbiegoingbad in r/Diabla

Now that Mary knows the basketball player is in the game for fame, she uses this to her advantage. Every time he does something desirable, she uses this as a reinforcement for him to continue and upgrade this behavior . After their first date went well, they went to an event together. She knows he wants adulation and to feel important, so she puts the spotlight on him and makes him look good in front of others whenever he goes out of his way to provide for her. This subconsciously makes him feel good, so he continues to provide her with what she wants and needs (in her case, gifts, money, and affection.)

Using Operant Conditioning On Yourself

We are used to operant conditioning forms set up by the natural world or authority figures. But you can also use operant conditioning on yourself or with accountability .

Here’s how you can do it yourself. You set up a fixed ratio reinforcement schedule: for every 10 note cards you write or memorize, you give yourself an hour of video games. You can set up a fixed interval reinforcement schedule: after every week of finals, you take a vacation.

Accountabilibuddies are best for setting up variable ratio and variable interval reinforcement schedules. That way, you don’t know when the reinforcement is coming. Tell your buddy to give you your video game controller back after a random amount of note cards that you write. Or, ask them to walk into your room at random intervals. If you’re studying, they hand you a beer. If you’re not, no reinforcement.

Related posts:

- Variable Interval Reinforcement Schedule (Examples)

- Fixed Ratio Reinforcement Schedule (Examples)

- Skinner’s Box Experiment (Behaviorism Study)

- Schedules of Reinforcement (Examples)

- Fixed Interval Reinforcement Schedule (Examples)

Reference this article:

About The Author

Operant Conditioning

Classical Conditioning

Observational Learning

Latent Learning

Experiential Learning

The Little Albert Study

Bobo Doll Experiment

Spacing Effect

Von Restorff Effect

PracticalPie.com is a participant in the Amazon Associates Program. As an Amazon Associate we earn from qualifying purchases.

Follow Us On:

Youtube Facebook Instagram X/Twitter

Psychology Resources

Developmental

Personality

Relationships

Psychologists

Serial Killers

Psychology Tests

Personality Quiz

Memory Test

Depression test

Type A/B Personality Test

© PracticalPsychology. All rights reserved

Privacy Policy | Terms of Use

- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- Games & Quizzes

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

- Introduction

Operant versus classical conditioning

Methods and applications.

operant conditioning

Our editors will review what you’ve submitted and determine whether to revise the article.

- WebMD - What is Operant Conditioning?

- National Center for Biotechnology Information - PubMed Central - Operant Conditioning

- Open Text WSU - Principles of Learning and Behavior - Operant Conditioning

- Frontiers - How Much of Language Acquisition Does Operant Conditioning Explain?

- Verywell Mind - What Is Operant Conditioning?

- Academia - Operant Conditioning: Edward Thorndike

- Simply Psychology - Operant Conditioning: What It Is, How It Works, and Examples

- The University of Hawaiʻi Pressbooks - Operant Conditioning

- Open Library Publishing Platform - Psychology, Communication, and the Canadian Workplace - Operant Conditioning

- Khan Academy - Classical and operant conditioning article

- Table Of Contents

operant conditioning , in psychology and the study of human and animal behaviour , a mechanism of learning through which humans and animals come to perform or to avoid performing certain behaviours in response to the presence or absence of certain environmental stimuli. The behaviours are voluntary—that is, the human or animal subjects decide whether to perform them—and reversible—that is, once a stimulus that results in a given behaviour is removed, the behaviour may disappear. Operant conditioning thus demonstrates that organisms may be guided by consequences, whether positive or negative, in the behaviours they produce.

Operant conditioning differs from classical conditioning , in which subjects produce involuntary and reflexive responses related to a biological stimulus and an associated neutral stimulus. For example, in experiments based on the work of the Russian physiologist Ivan Pavlov (1849–1936), dogs can be classically conditioned to salivate in response to a bell. Food is presented to a dog at the sounding of a bell, the dog salivates involuntarily in response to the food, and over time the animal comes to associate food with the bell ringing. Eventually, the dog salivates involuntarily in response to the ringing bell when food is not present.

Operant conditioning, in contrast, involves learning to do something to obtain or avoid a given result. For example, through operant conditioning a dog can be taught to offer a paw to receive a food treat. The main distinction between the two conditioning methods is thus the kind of reaction that results. Classical conditioning involves involuntary reactions to a stimulus , whereas operant conditioning involves a change in behaviour to either gain a reward or avoid punishment.

The study of operant conditioning began with the work of the American psychologist Edward L. Thorndike (1874–1949). In 1905 Thorndike formulated the law of effect , which states that, given a certain stimulus, animals repeat behavioral responses with positive (desired) results while avoiding behaviours with negative (unwanted) results.

The American psychologist B.F. Skinner (1904–90) built on Thorndike’s law of effect and formalized the process of operant conditioning, which he understood to be the explanatory basis of human behaviour ( see behaviourism ). In the 1930s he invented the so-called Skinner box, a cage with a closely controlled environment that included no stimuli other than those under study. He placed animals such as rats or pigeons in the box and provided stimuli and rewards to elicit certain behaviours such as pressing a bar or pecking at a light.

Operant conditioning is dependent upon behaviour enhancers and behaviour suppressors. Behaviour enhancers encourage a desired action, whereas behaviour suppressors discourage an undesired action. Both behaviour enhancers and behaviour suppressors can be either positive or negative. In this context , the terms positive and negative do not represent value judgments; they instead refer to stimuli that are added or present (positive) or removed or absent (negative). Thus, an enhancer may be the addition of a desired consequence or the removal of an undesired consequence, and a suppressor may be the addition of an undesired consequence or the removal of a desired consequence.

The notions of positive and negative enhancement or suppression inform five possible strategies for accomplishing operant conditioning. Positive reinforcement (enhancement) occurs when the subject receives a reward for a desired behaviour. An example is when a dog gets a treat for doing a trick. Negative reinforcement is the absence or removal of an annoying or harmful stimulus when a desired action is performed. An example is using a loud alarm as an incentive to get out of bed in the morning. Positive punishment (suppression) happens when a subject performs an undesired behaviour and receives a negative stimulus. Thus, students who talk too much in class may be required to sit next to the teacher’s desk. Negative punishment occurs when a subject performs an undesired behaviour and a positive stimulus is removed. Thus, teenagers may be punished for bad behaviour by removal of their driving privileges.

Extinction takes place when a behaviour is no longer rewarded, and its occurrences gradually decline in number until it is no longer performed. The subject may initially repeat the behaviour with greater frequency in an attempt to receive a reward, then perform it less frequently, and then eventually stop. For example, people who tell unwanted, off-colour jokes are more likely to stop their behaviour if they consistently receive no attention, positive or negative, after telling such a joke.

Another component of operant conditioning is the reinforcement schedule, of which there are two kinds. Interval schedules reward behaviour after a given amount of time has passed since the previous instance of the behaviour. Ratio schedules require the organism to complete a certain number of repetitions of the behaviour before receiving the reward.

Besides the study of human and animal motivations and behaviours, operant conditioning has many applications. It underlies techniques used in animal training, pedagogy , parenting, and psychotherapy .

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Operant Conditioning

OpenStaxCollege

[latexpage]

Learning Objectives

By the end of this section, you will be able to:

- Define operant conditioning

- Explain the difference between reinforcement and punishment

- Distinguish between reinforcement schedules

The previous section of this chapter focused on the type of associative learning known as classical conditioning. Remember that in classical conditioning, something in the environment triggers a reflex automatically, and researchers train the organism to react to a different stimulus. Now we turn to the second type of associative learning, operant conditioning . In operant conditioning, organisms learn to associate a behavior and its consequence ( [link] ). A pleasant consequence makes that behavior more likely to be repeated in the future. For example, Spirit, a dolphin at the National Aquarium in Baltimore, does a flip in the air when her trainer blows a whistle. The consequence is that she gets a fish.

| Classical Conditioning | Operant Conditioning | |

|---|---|---|

| Conditioning approach | An unconditioned stimulus (such as food) is paired with a neutral stimulus (such as a bell). The neutral stimulus eventually becomes the conditioned stimulus, which brings about the conditioned response (salivation). | The target behavior is followed by reinforcement or punishment to either strengthen or weaken it, so that the learner is more likely to exhibit the desired behavior in the future. |

| Stimulus timing | The stimulus occurs immediately before the response. | The stimulus (either reinforcement or punishment) occurs soon after the response. |

Psychologist B. F. Skinner saw that classical conditioning is limited to existing behaviors that are reflexively elicited, and it doesn’t account for new behaviors such as riding a bike. He proposed a theory about how such behaviors come about. Skinner believed that behavior is motivated by the consequences we receive for the behavior: the reinforcements and punishments. His idea that learning is the result of consequences is based on the law of effect, which was first proposed by psychologist Edward Thorndike . According to the law of effect , behaviors that are followed by consequences that are satisfying to the organism are more likely to be repeated, and behaviors that are followed by unpleasant consequences are less likely to be repeated (Thorndike, 1911). Essentially, if an organism does something that brings about a desired result, the organism is more likely to do it again. If an organism does something that does not bring about a desired result, the organism is less likely to do it again. An example of the law of effect is in employment. One of the reasons (and often the main reason) we show up for work is because we get paid to do so. If we stop getting paid, we will likely stop showing up—even if we love our job.

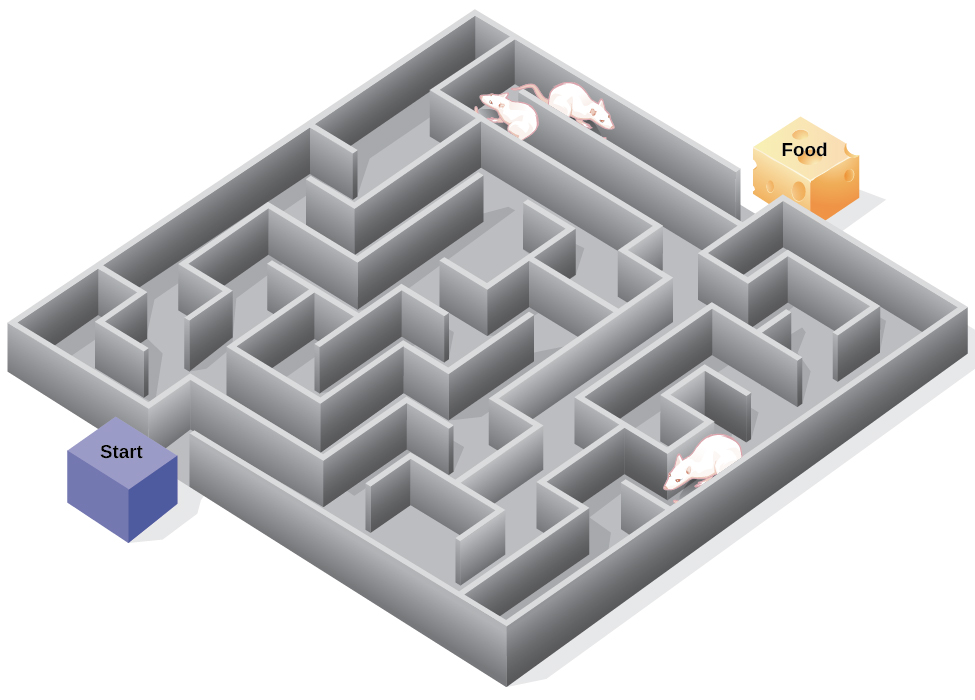

Working with Thorndike’s law of effect as his foundation, Skinner began conducting scientific experiments on animals (mainly rats and pigeons) to determine how organisms learn through operant conditioning (Skinner, 1938). He placed these animals inside an operant conditioning chamber, which has come to be known as a “Skinner box” ( [link] ). A Skinner box contains a lever (for rats) or disk (for pigeons) that the animal can press or peck for a food reward via the dispenser. Speakers and lights can be associated with certain behaviors. A recorder counts the number of responses made by the animal.

Watch this brief video clip to learn more about operant conditioning: Skinner is interviewed, and operant conditioning of pigeons is demonstrated.

In discussing operant conditioning, we use several everyday words—positive, negative, reinforcement, and punishment—in a specialized manner. In operant conditioning, positive and negative do not mean good and bad. Instead, positive means you are adding something, and negative means you are taking something away. Reinforcement means you are increasing a behavior, and punishment means you are decreasing a behavior. Reinforcement can be positive or negative, and punishment can also be positive or negative. All reinforcers (positive or negative) increase the likelihood of a behavioral response. All punishers (positive or negative) decrease the likelihood of a behavioral response. Now let’s combine these four terms: positive reinforcement, negative reinforcement, positive punishment, and negative punishment ( [link] ).

| Reinforcement | Punishment | |

|---|---|---|

| Positive | Something is to the likelihood of a behavior. | Something is to the likelihood of a behavior. |

| Negative | Something is to the likelihood of a behavior. | Something is to the likelihood of a behavior. |

REINFORCEMENT

The most effective way to teach a person or animal a new behavior is with positive reinforcement. In positive reinforcement , a desirable stimulus is added to increase a behavior.

For example, you tell your five-year-old son, Jerome, that if he cleans his room, he will get a toy. Jerome quickly cleans his room because he wants a new art set. Let’s pause for a moment. Some people might say, “Why should I reward my child for doing what is expected?” But in fact we are constantly and consistently rewarded in our lives. Our paychecks are rewards, as are high grades and acceptance into our preferred school. Being praised for doing a good job and for passing a driver’s test is also a reward. Positive reinforcement as a learning tool is extremely effective. It has been found that one of the most effective ways to increase achievement in school districts with below-average reading scores was to pay the children to read. Specifically, second-grade students in Dallas were paid $2 each time they read a book and passed a short quiz about the book. The result was a significant increase in reading comprehension (Fryer, 2010). What do you think about this program? If Skinner were alive today, he would probably think this was a great idea. He was a strong proponent of using operant conditioning principles to influence students’ behavior at school. In fact, in addition to the Skinner box, he also invented what he called a teaching machine that was designed to reward small steps in learning (Skinner, 1961)—an early forerunner of computer-assisted learning. His teaching machine tested students’ knowledge as they worked through various school subjects. If students answered questions correctly, they received immediate positive reinforcement and could continue; if they answered incorrectly, they did not receive any reinforcement. The idea was that students would spend additional time studying the material to increase their chance of being reinforced the next time (Skinner, 1961).

In negative reinforcement , an undesirable stimulus is removed to increase a behavior. For example, car manufacturers use the principles of negative reinforcement in their seatbelt systems, which go “beep, beep, beep” until you fasten your seatbelt. The annoying sound stops when you exhibit the desired behavior, increasing the likelihood that you will buckle up in the future. Negative reinforcement is also used frequently in horse training. Riders apply pressure—by pulling the reins or squeezing their legs—and then remove the pressure when the horse performs the desired behavior, such as turning or speeding up. The pressure is the negative stimulus that the horse wants to remove.

Many people confuse negative reinforcement with punishment in operant conditioning, but they are two very different mechanisms. Remember that reinforcement, even when it is negative, always increases a behavior. In contrast, punishment always decreases a behavior. In positive punishment , you add an undesirable stimulus to decrease a behavior. An example of positive punishment is scolding a student to get the student to stop texting in class. In this case, a stimulus (the reprimand) is added in order to decrease the behavior (texting in class). In negative punishment , you remove an aversive stimulus to decrease behavior. For example, when a child misbehaves, a parent can take away a favorite toy. In this case, a stimulus (the toy) is removed in order to decrease the behavior.

Punishment, especially when it is immediate, is one way to decrease undesirable behavior. For example, imagine your four-year-old son, Brandon, hit his younger brother. You have Brandon write 100 times “I will not hit my brother” (positive punishment). Chances are he won’t repeat this behavior. While strategies like this are common today, in the past children were often subject to physical punishment, such as spanking. It’s important to be aware of some of the drawbacks in using physical punishment on children. First, punishment may teach fear. Brandon may become fearful of the street, but he also may become fearful of the person who delivered the punishment—you, his parent. Similarly, children who are punished by teachers may come to fear the teacher and try to avoid school (Gershoff et al., 2010). Consequently, most schools in the United States have banned corporal punishment. Second, punishment may cause children to become more aggressive and prone to antisocial behavior and delinquency (Gershoff, 2002). They see their parents resort to spanking when they become angry and frustrated, so, in turn, they may act out this same behavior when they become angry and frustrated. For example, because you spank Brenda when you are angry with her for her misbehavior, she might start hitting her friends when they won’t share their toys.

While positive punishment can be effective in some cases, Skinner suggested that the use of punishment should be weighed against the possible negative effects. Today’s psychologists and parenting experts favor reinforcement over punishment—they recommend that you catch your child doing something good and reward her for it.

In his operant conditioning experiments, Skinner often used an approach called shaping. Instead of rewarding only the target behavior, in shaping , we reward successive approximations of a target behavior. Why is shaping needed? Remember that in order for reinforcement to work, the organism must first display the behavior. Shaping is needed because it is extremely unlikely that an organism will display anything but the simplest of behaviors spontaneously. In shaping, behaviors are broken down into many small, achievable steps. The specific steps used in the process are the following:

Shaping is often used in teaching a complex behavior or chain of behaviors. Skinner used shaping to teach pigeons not only such relatively simple behaviors as pecking a disk in a Skinner box, but also many unusual and entertaining behaviors, such as turning in circles, walking in figure eights, and even playing ping pong; the technique is commonly used by animal trainers today. An important part of shaping is stimulus discrimination. Recall Pavlov’s dogs—he trained them to respond to the tone of a bell, and not to similar tones or sounds. This discrimination is also important in operant conditioning and in shaping behavior.

Here is a brief video of Skinner’s pigeons playing ping pong.

It’s easy to see how shaping is effective in teaching behaviors to animals, but how does shaping work with humans? Let’s consider parents whose goal is to have their child learn to clean his room. They use shaping to help him master steps toward the goal. Instead of performing the entire task, they set up these steps and reinforce each step. First, he cleans up one toy. Second, he cleans up five toys. Third, he chooses whether to pick up ten toys or put his books and clothes away. Fourth, he cleans up everything except two toys. Finally, he cleans his entire room.

PRIMARY AND SECONDARY REINFORCERS

Rewards such as stickers, praise, money, toys, and more can be used to reinforce learning. Let’s go back to Skinner’s rats again. How did the rats learn to press the lever in the Skinner box? They were rewarded with food each time they pressed the lever. For animals, food would be an obvious reinforcer.

What would be a good reinforce for humans? For your daughter Sydney, it was the promise of a toy if she cleaned her room. How about Joaquin, the soccer player? If you gave Joaquin a piece of candy every time he made a goal, you would be using a primary reinforcer . Primary reinforcers are reinforcers that have innate reinforcing qualities. These kinds of reinforcers are not learned. Water, food, sleep, shelter, sex, and touch, among others, are primary reinforcers. Pleasure is also a primary reinforcer. Organisms do not lose their drive for these things. For most people, jumping in a cool lake on a very hot day would be reinforcing and the cool lake would be innately reinforcing—the water would cool the person off (a physical need), as well as provide pleasure.

A secondary reinforcer has no inherent value and only has reinforcing qualities when linked with a primary reinforcer. Praise, linked to affection, is one example of a secondary reinforcer, as when you called out “Great shot!” every time Joaquin made a goal. Another example, money, is only worth something when you can use it to buy other things—either things that satisfy basic needs (food, water, shelter—all primary reinforcers) or other secondary reinforcers. If you were on a remote island in the middle of the Pacific Ocean and you had stacks of money, the money would not be useful if you could not spend it. What about the stickers on the behavior chart? They also are secondary reinforcers.

Sometimes, instead of stickers on a sticker chart, a token is used. Tokens, which are also secondary reinforcers, can then be traded in for rewards and prizes. Entire behavior management systems, known as token economies, are built around the use of these kinds of token reinforcers. Token economies have been found to be very effective at modifying behavior in a variety of settings such as schools, prisons, and mental hospitals. For example, a study by Cangi and Daly (2013) found that use of a token economy increased appropriate social behaviors and reduced inappropriate behaviors in a group of autistic school children. Autistic children tend to exhibit disruptive behaviors such as pinching and hitting. When the children in the study exhibited appropriate behavior (not hitting or pinching), they received a “quiet hands” token. When they hit or pinched, they lost a token. The children could then exchange specified amounts of tokens for minutes of playtime.

Parents and teachers often use behavior modification to change a child’s behavior. Behavior modification uses the principles of operant conditioning to accomplish behavior change so that undesirable behaviors are switched for more socially acceptable ones. Some teachers and parents create a sticker chart, in which several behaviors are listed ( [link] ). Sticker charts are a form of token economies, as described in the text. Each time children perform the behavior, they get a sticker, and after a certain number of stickers, they get a prize, or reinforcer. The goal is to increase acceptable behaviors and decrease misbehavior. Remember, it is best to reinforce desired behaviors, rather than to use punishment. In the classroom, the teacher can reinforce a wide range of behaviors, from students raising their hands, to walking quietly in the hall, to turning in their homework. At home, parents might create a behavior chart that rewards children for things such as putting away toys, brushing their teeth, and helping with dinner. In order for behavior modification to be effective, the reinforcement needs to be connected with the behavior; the reinforcement must matter to the child and be done consistently.

Time-out is another popular technique used in behavior modification with children. It operates on the principle of negative punishment. When a child demonstrates an undesirable behavior, she is removed from the desirable activity at hand ( [link] ). For example, say that Sophia and her brother Mario are playing with building blocks. Sophia throws some blocks at her brother, so you give her a warning that she will go to time-out if she does it again. A few minutes later, she throws more blocks at Mario. You remove Sophia from the room for a few minutes. When she comes back, she doesn’t throw blocks.

There are several important points that you should know if you plan to implement time-out as a behavior modification technique. First, make sure the child is being removed from a desirable activity and placed in a less desirable location. If the activity is something undesirable for the child, this technique will backfire because it is more enjoyable for the child to be removed from the activity. Second, the length of the time-out is important. The general rule of thumb is one minute for each year of the child’s age. Sophia is five; therefore, she sits in a time-out for five minutes. Setting a timer helps children know how long they have to sit in time-out. Finally, as a caregiver, keep several guidelines in mind over the course of a time-out: remain calm when directing your child to time-out; ignore your child during time-out (because caregiver attention may reinforce misbehavior); and give the child a hug or a kind word when time-out is over.

REINFORCEMENT SCHEDULES

Remember, the best way to teach a person or animal a behavior is to use positive reinforcement. For example, Skinner used positive reinforcement to teach rats to press a lever in a Skinner box. At first, the rat might randomly hit the lever while exploring the box, and out would come a pellet of food. After eating the pellet, what do you think the hungry rat did next? It hit the lever again, and received another pellet of food. Each time the rat hit the lever, a pellet of food came out. When an organism receives a reinforcer each time it displays a behavior, it is called continuous reinforcement . This reinforcement schedule is the quickest way to teach someone a behavior, and it is especially effective in training a new behavior. Let’s look back at the dog that was learning to sit earlier in the chapter. Now, each time he sits, you give him a treat. Timing is important here: you will be most successful if you present the reinforcer immediately after he sits, so that he can make an association between the target behavior (sitting) and the consequence (getting a treat).

Watch this video clip where veterinarian Dr. Sophia Yin shapes a dog’s behavior using the steps outlined above.

Once a behavior is trained, researchers and trainers often turn to another type of reinforcement schedule—partial reinforcement. In partial reinforcement , also referred to as intermittent reinforcement, the person or animal does not get reinforced every time they perform the desired behavior. There are several different types of partial reinforcement schedules ( [link] ). These schedules are described as either fixed or variable, and as either interval or ratio. Fixed refers to the number of responses between reinforcements, or the amount of time between reinforcements, which is set and unchanging. Variable refers to the number of responses or amount of time between reinforcements, which varies or changes. Interval means the schedule is based on the time between reinforcements, and ratio means the schedule is based on the number of responses between reinforcements.

| Reinforcement Schedule | Description | Result | Example |

|---|---|---|---|

| Fixed interval | Reinforcement is delivered at predictable time intervals (e.g., after 5, 10, 15, and 20 minutes). | Moderate response rate with significant pauses after reinforcement | Hospital patient uses patient-controlled, doctor-timed pain relief |

| Variable interval | Reinforcement is delivered at unpredictable time intervals (e.g., after 5, 7, 10, and 20 minutes). | Moderate yet steady response rate | Checking Facebook |

| Fixed ratio | Reinforcement is delivered after a predictable number of responses (e.g., after 2, 4, 6, and 8 responses). | High response rate with pauses after reinforcement | Piecework—factory worker getting paid for every x number of items manufactured |

| Variable ratio | Reinforcement is delivered after an unpredictable number of responses (e.g., after 1, 4, 5, and 9 responses). | High and steady response rate | Gambling |

Now let’s combine these four terms. A fixed interval reinforcement schedule is when behavior is rewarded after a set amount of time. For example, June undergoes major surgery in a hospital. During recovery, she is expected to experience pain and will require prescription medications for pain relief. June is given an IV drip with a patient-controlled painkiller. Her doctor sets a limit: one dose per hour. June pushes a button when pain becomes difficult, and she receives a dose of medication. Since the reward (pain relief) only occurs on a fixed interval, there is no point in exhibiting the behavior when it will not be rewarded.

With a variable interval reinforcement schedule , the person or animal gets the reinforcement based on varying amounts of time, which are unpredictable. Say that Manuel is the manager at a fast-food restaurant. Every once in a while someone from the quality control division comes to Manuel’s restaurant. If the restaurant is clean and the service is fast, everyone on that shift earns a $20 bonus. Manuel never knows when the quality control person will show up, so he always tries to keep the restaurant clean and ensures that his employees provide prompt and courteous service. His productivity regarding prompt service and keeping a clean restaurant are steady because he wants his crew to earn the bonus.

With a fixed ratio reinforcement schedule , there are a set number of responses that must occur before the behavior is rewarded. Carla sells glasses at an eyeglass store, and she earns a commission every time she sells a pair of glasses. She always tries to sell people more pairs of glasses, including prescription sunglasses or a backup pair, so she can increase her commission. She does not care if the person really needs the prescription sunglasses, Carla just wants her bonus. The quality of what Carla sells does not matter because her commission is not based on quality; it’s only based on the number of pairs sold. This distinction in the quality of performance can help determine which reinforcement method is most appropriate for a particular situation. Fixed ratios are better suited to optimize the quantity of output, whereas a fixed interval, in which the reward is not quantity based, can lead to a higher quality of output.

In a variable ratio reinforcement schedule , the number of responses needed for a reward varies. This is the most powerful partial reinforcement schedule. An example of the variable ratio reinforcement schedule is gambling. Imagine that Sarah—generally a smart, thrifty woman—visits Las Vegas for the first time. She is not a gambler, but out of curiosity she puts a quarter into the slot machine, and then another, and another. Nothing happens. Two dollars in quarters later, her curiosity is fading, and she is just about to quit. But then, the machine lights up, bells go off, and Sarah gets 50 quarters back. That’s more like it! Sarah gets back to inserting quarters with renewed interest, and a few minutes later she has used up all her gains and is $10 in the hole. Now might be a sensible time to quit. And yet, she keeps putting money into the slot machine because she never knows when the next reinforcement is coming. She keeps thinking that with the next quarter she could win $50, or $100, or even more. Because the reinforcement schedule in most types of gambling has a variable ratio schedule, people keep trying and hoping that the next time they will win big. This is one of the reasons that gambling is so addictive—and so resistant to extinction.

In operant conditioning, extinction of a reinforced behavior occurs at some point after reinforcement stops, and the speed at which this happens depends on the reinforcement schedule. In a variable ratio schedule, the point of extinction comes very slowly, as described above. But in the other reinforcement schedules, extinction may come quickly. For example, if June presses the button for the pain relief medication before the allotted time her doctor has approved, no medication is administered. She is on a fixed interval reinforcement schedule (dosed hourly), so extinction occurs quickly when reinforcement doesn’t come at the expected time. Among the reinforcement schedules, variable ratio is the most productive and the most resistant to extinction. Fixed interval is the least productive and the easiest to extinguish ( [link] ).

Skinner (1953) stated, “If the gambling establishment cannot persuade a patron to turn over money with no return, it may achieve the same effect by returning part of the patron’s money on a variable-ratio schedule” (p. 397).

Skinner uses gambling as an example of the power and effectiveness of conditioning behavior based on a variable ratio reinforcement schedule. In fact, Skinner was so confident in his knowledge of gambling addiction that he even claimed he could turn a pigeon into a pathological gambler (“Skinner’s Utopia,” 1971). Beyond the power of variable ratio reinforcement, gambling seems to work on the brain in the same way as some addictive drugs. The Illinois Institute for Addiction Recovery (n.d.) reports evidence suggesting that pathological gambling is an addiction similar to a chemical addiction ( [link] ). Specifically, gambling may activate the reward centers of the brain, much like cocaine does. Research has shown that some pathological gamblers have lower levels of the neurotransmitter (brain chemical) known as norepinephrine than do normal gamblers (Roy, et al., 1988). According to a study conducted by Alec Roy and colleagues, norepinephrine is secreted when a person feels stress, arousal, or thrill; pathological gamblers use gambling to increase their levels of this neurotransmitter. Another researcher, neuroscientist Hans Breiter, has done extensive research on gambling and its effects on the brain. Breiter (as cited in Franzen, 2001) reports that “Monetary reward in a gambling-like experiment produces brain activation very similar to that observed in a cocaine addict receiving an infusion of cocaine” (para. 1). Deficiencies in serotonin (another neurotransmitter) might also contribute to compulsive behavior, including a gambling addiction.

It may be that pathological gamblers’ brains are different than those of other people, and perhaps this difference may somehow have led to their gambling addiction, as these studies seem to suggest. However, it is very difficult to ascertain the cause because it is impossible to conduct a true experiment (it would be unethical to try to turn randomly assigned participants into problem gamblers). Therefore, it may be that causation actually moves in the opposite direction—perhaps the act of gambling somehow changes neurotransmitter levels in some gamblers’ brains. It also is possible that some overlooked factor, or confounding variable, played a role in both the gambling addiction and the differences in brain chemistry.

COGNITION AND LATENT LEARNING

Although strict behaviorists such as Skinner and Watson refused to believe that cognition (such as thoughts and expectations) plays a role in learning, another behaviorist, Edward C. Tolman , had a different opinion. Tolman’s experiments with rats demonstrated that organisms can learn even if they do not receive immediate reinforcement (Tolman & Honzik, 1930; Tolman, Ritchie, & Kalish, 1946). This finding was in conflict with the prevailing idea at the time that reinforcement must be immediate in order for learning to occur, thus suggesting a cognitive aspect to learning.

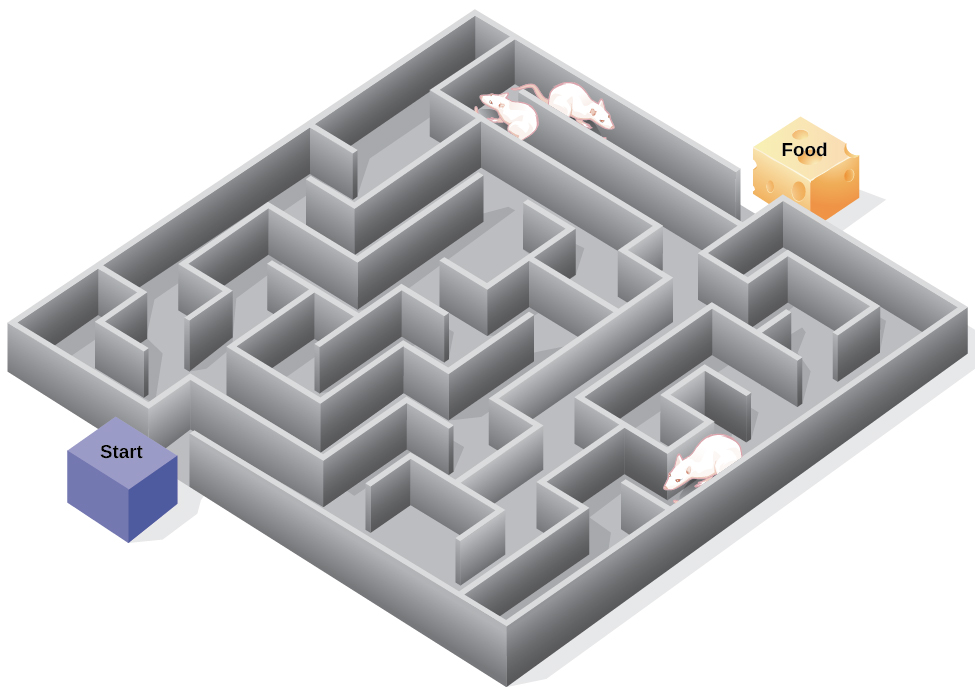

In the experiments, Tolman placed hungry rats in a maze with no reward for finding their way through it. He also studied a comparison group that was rewarded with food at the end of the maze. As the unreinforced rats explored the maze, they developed a cognitive map : a mental picture of the layout of the maze ( [link] ). After 10 sessions in the maze without reinforcement, food was placed in a goal box at the end of the maze. As soon as the rats became aware of the food, they were able to find their way through the maze quickly, just as quickly as the comparison group, which had been rewarded with food all along. This is known as latent learning : learning that occurs but is not observable in behavior until there is a reason to demonstrate it.

Latent learning also occurs in humans. Children may learn by watching the actions of their parents but only demonstrate it at a later date, when the learned material is needed. For example, suppose that Ravi’s dad drives him to school every day. In this way, Ravi learns the route from his house to his school, but he’s never driven there himself, so he has not had a chance to demonstrate that he’s learned the way. One morning Ravi’s dad has to leave early for a meeting, so he can’t drive Ravi to school. Instead, Ravi follows the same route on his bike that his dad would have taken in the car. This demonstrates latent learning. Ravi had learned the route to school, but had no need to demonstrate this knowledge earlier.

Have you ever gotten lost in a building and couldn’t find your way back out? While that can be frustrating, you’re not alone. At one time or another we’ve all gotten lost in places like a museum, hospital, or university library. Whenever we go someplace new, we build a mental representation—or cognitive map—of the location, as Tolman’s rats built a cognitive map of their maze. However, some buildings are confusing because they include many areas that look alike or have short lines of sight. Because of this, it’s often difficult to predict what’s around a corner or decide whether to turn left or right to get out of a building. Psychologist Laura Carlson (2010) suggests that what we place in our cognitive map can impact our success in navigating through the environment. She suggests that paying attention to specific features upon entering a building, such as a picture on the wall, a fountain, a statue, or an escalator, adds information to our cognitive map that can be used later to help find our way out of the building.

Watch this video to learn more about Carlson’s studies on cognitive maps and navigation in buildings.

Operant conditioning is based on the work of B. F. Skinner. Operant conditioning is a form of learning in which the motivation for a behavior happens after the behavior is demonstrated. An animal or a human receives a consequence after performing a specific behavior. The consequence is either a reinforcer or a punisher. All reinforcement (positive or negative) increases the likelihood of a behavioral response. All punishment (positive or negative) decreases the likelihood of a behavioral response. Several types of reinforcement schedules are used to reward behavior depending on either a set or variable period of time.

Review Questions

________ is when you take away a pleasant stimulus to stop a behavior.

- positive reinforcement

- negative reinforcement

- positive punishment

- negative punishment

Which of the following is not an example of a primary reinforcer?

Rewarding successive approximations toward a target behavior is ________.

Slot machines reward gamblers with money according to which reinforcement schedule?

- fixed ratio

- variable ratio

- fixed interval

- variable interval

Critical Thinking Questions

What is a Skinner box and what is its purpose?

A Skinner box is an operant conditioning chamber used to train animals such as rats and pigeons to perform certain behaviors, like pressing a lever. When the animals perform the desired behavior, they receive a reward: food or water.

What is the difference between negative reinforcement and punishment?

In negative reinforcement you are taking away an undesirable stimulus in order to increase the frequency of a certain behavior (e.g., buckling your seat belt stops the annoying beeping sound in your car and increases the likelihood that you will wear your seatbelt). Punishment is designed to reduce a behavior (e.g., you scold your child for running into the street in order to decrease the unsafe behavior.)

What is shaping and how would you use shaping to teach a dog to roll over?

Shaping is an operant conditioning method in which you reward closer and closer approximations of the desired behavior. If you want to teach your dog to roll over, you might reward him first when he sits, then when he lies down, and then when he lies down and rolls onto his back. Finally, you would reward him only when he completes the entire sequence: lying down, rolling onto his back, and then continuing to roll over to his other side.

Personal Application Questions

Explain the difference between negative reinforcement and punishment, and provide several examples of each based on your own experiences.

Think of a behavior that you have that you would like to change. How could you use behavior modification, specifically positive reinforcement, to change your behavior? What is your positive reinforcer?

Operant Conditioning Copyright © 2014 by OpenStaxCollege is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

- Mental Health

Operant Conditioning: What It Is and How It Works

What Is Operant Conditioning?

Operant conditioning, sometimes called instrumental conditioning or Skinnerian conditioning , is a method of learning that uses rewards and punishment to modify behavior. Through operant conditioning, behavior that is rewarded is likely to be repeated, while behavior that is punished is prone to happen less.

For example, when you are rewarded at work with a performance bonus for exceptional work, you will want to continue performing at a higher level in hopes of receiving another bonus in the future. Because this behavior was followed by a positive outcome, the behavior will likely be repeated.

The Operant Conditioning Theory

Operant conditioning was first described by psychologist B.F. Skinner. His theory was based on two assumptions. First, the cause of human behavior is something in a person’s environment. Second, the consequences of a behavior determine the possibility of it being repeated. Behaviors followed by a pleasant consequence are likely to be repeated and those followed by an unpleasant consequence are less likely to be repeated.

Through his experiments, Skinner identified three types of responses that followed behavior:

- Neutral responses. They are responses from the environment that produce no stimulus other than focusing attention. They neither increase nor decrease the probability of a behavior being repeated.

- Reinforcers. They are responses from the environment that increase the likelihood of a behavior being repeated. They can either be positive or negative.

- Punishers. These are negative operants that decrease the likelihood of a behavior. Punishment weakens behavior.

History of the theory

Though Skinner introduced the theory of operant conditioning, he was influenced by the work of another psychologist, Edward Lee Thorndike.

In 1905, Thorndike proposed a theory of behavior called the “law of effect.” It stated that if you behave in a certain way and you like the result of your behavior, you’re likely to behave that way again. If you don’t like the result of your behavior, you’re less likely to repeat it.

Thorndike put cats in a box to test his theory. If the cat found and pushed a lever, the box would open, and the cat would be rewarded with a piece of fish. The more they repeated this behavior, the more they were rewarded. So, the cats quickly learned to go right to the lever and push it.

The idea: positive results reinforce behaviors, making you more likely to repeat the same behaviors later on.

John B. Watson was another psychologist who influenced Skinner and his theory of operant conditioning. He studied behavior that could be observed and how that behavior could be controlled, as well as the ways that behaviors are learned. In fact, he coined the term “behaviorism,” a field of psychology focused on how things are learned.

When Skinner came along to advance this theory, he created his own box. In went pigeons and rats -- though not at the same time -- who quickly learned that certain behaviors brought them rewards of food.

He described his pigeons and rats as “free operants.” That meant they were free to behave how they wanted in their environment (the box). However, their behaviors were shaped or conditioned by what happened after their previous displays of those behaviors.

Operant Conditioning vs. Classical Conditioning

These two are very different. In operant conditioning, the results of your past behaviors have conditioned you to either repeat or avoid those behaviors. For example, your parents reward you for getting an ‘A’ on a test that requires you to study hard. As a result, you become more likely to study hard in the future in anticipation of more rewards.

Classical conditioning is used to train people or animals to respond automatically to certain triggers. The most famous example -- Pavlov’s dogs.

Ivan Pavlov was a Russian psychologist. He observed that dogs salivated when food was put in front of them. That’s natural, or what’s called an unconditioned response.

But then Pavlov noticed that the dogs began to salivate shortly before their food arrived, possibly because the sound of the food cart triggered their anticipation of mealtime. In his experiment, at mealtimes, he sounded a bell shortly before the food arrived. Eventually, the dogs began to salivate when they heard the bell. That was a trained, or conditioned, response to the sound of the bell.

You likely experience classical conditioning every day. How? Advertising. Companies use advertisements in hopes that you will associate something positive with their product, leading you to spend money on it.

Operant Behavior

In operant behavior, the way you choose to behave today is influenced by the consequences of that behavior in the past. Those consequences will either encourage and reinforce that behavior, or they will discourage and punish that behavior.

An example: When you were a kid, did you get sent to your room when you hit your sibling? That consequence, your parents hoped, would discourage you from doing that again.

Reinforcement and punishment in operant conditioning

Reinforcement and punishment are two ways to encourage or discourage behaviors. In the example above, the punishment of being sent to your room ideally will discourage you from behaving in the same way in the future.

But what if you behave in a way that your parents want to encourage, such as sharing toys with a younger sibling? Your parents can reinforce that behavior by rewarding you, perhaps with praise.

Reinforcement and punishment both can be positive or negative. Let’s take a quick look at each.

- Positive reinforcement. To encourage a behavior, something is added. For example, you earn money for going to work.

- Negative reinforcement. To encourage a behavior, something is taken away. For example, you can turn off your alarm if you get out of bed.

- Positive punishment. To discourage a behavior, something is added. For example, you get extra chores when you come home late for dinner.

- Negative punishment. To discourage a behavior, something is taken away. For example, your parents confiscate your favorite toy when you tell a lie.

Types of operant behaviors

B.F. Skinner divided behavior into two different types: respondent and operant.

Respondent behavior. This is the type of behavior that you can’t control. It’s Skinner’s term for what happened with Pavlov’s dogs -- when they heard a bell, they responded by salivating. It was a reflex, not a choice. People have respondent behaviors, too. If someone puts your favorite food in front of you, you likely will start salivating, just like Pavlov’s dogs.

Operant behavior. These are voluntary behaviors that you choose to do based on previous consequences. You choose to behave in a certain way to get an expected result. For example, you study hard in anticipation of a reward from your parents. Or if you get punished for talking back to your parents, you are more likely to choose not to do that in the future.

Positive Reinforcement

Positive reinforcement involves providing a pleasant stimulus to increase the likelihood of a behavior happening in the future. For example, if your child does chores without being asked, you can reward them by taking them to a park or giving them a treat.

Skinner used a hungry rat in a Skinner box to show how positive reinforcement works. The box contained a lever on the side, and as the rat moved about the box, it would accidentally knock the lever. Immediately after it did so, a food pellet would drop into a container next to the lever. The consequence of receiving food every time the rat hit the lever ensured that the animal repeated the action again and again.

Positive reinforcement doesn't have to involve tangible items. Instead, you can positively reinforce your child through:

- Giving a hug or pat on the back

- Give a thumbs-up

- Offering a special activity, such as playing a game or reading a book together

- Telling another adult how proud you are of your child’s behavior while your child is listening

- Praising them

- Giving a high five