- Search Search Please fill out this field.

What Is Econometrics?

Understanding econometrics.

- Limitations

- Econometrics FAQs

The Bottom Line

- Corporate Finance

- Financial Analysis

Econometrics: Definition, Models, and Methods

Adam Hayes, Ph.D., CFA, is a financial writer with 15+ years Wall Street experience as a derivatives trader. Besides his extensive derivative trading expertise, Adam is an expert in economics and behavioral finance. Adam received his master's in economics from The New School for Social Research and his Ph.D. from the University of Wisconsin-Madison in sociology. He is a CFA charterholder as well as holding FINRA Series 7, 55 & 63 licenses. He currently researches and teaches economic sociology and the social studies of finance at the Hebrew University in Jerusalem.

:max_bytes(150000):strip_icc():format(webp)/adam_hayes-5bfc262a46e0fb005118b414.jpg)

Econometrics is the use of statistical and mathematical models to develop theories or test existing hypotheses in economics and to forecast future trends from historical data. It subjects real-world data to statistical trials and then compares the results against the theory being tested.

Depending on whether you are interested in testing an existing theory or in using existing data to develop a new hypothesis, econometrics can be subdivided into two major categories: theoretical and applied. Those who routinely engage in this practice are commonly known as econometricians.

Key Takeaways

- Econometrics is the use of statistical methods to develop theories or test existing hypotheses in economics or finance.

- Econometrics relies on techniques such as regression models and null hypothesis testing.

- Econometrics can also be used to try to forecast future economic or financial trends.

- As with other statistical tools, econometricians should be careful not to infer a causal relationship from statistical correlation.

- Some economists have criticized the field of econometrics for prioritizing statistical models over economic reasoning.

Investopedia / Michela Buttignol

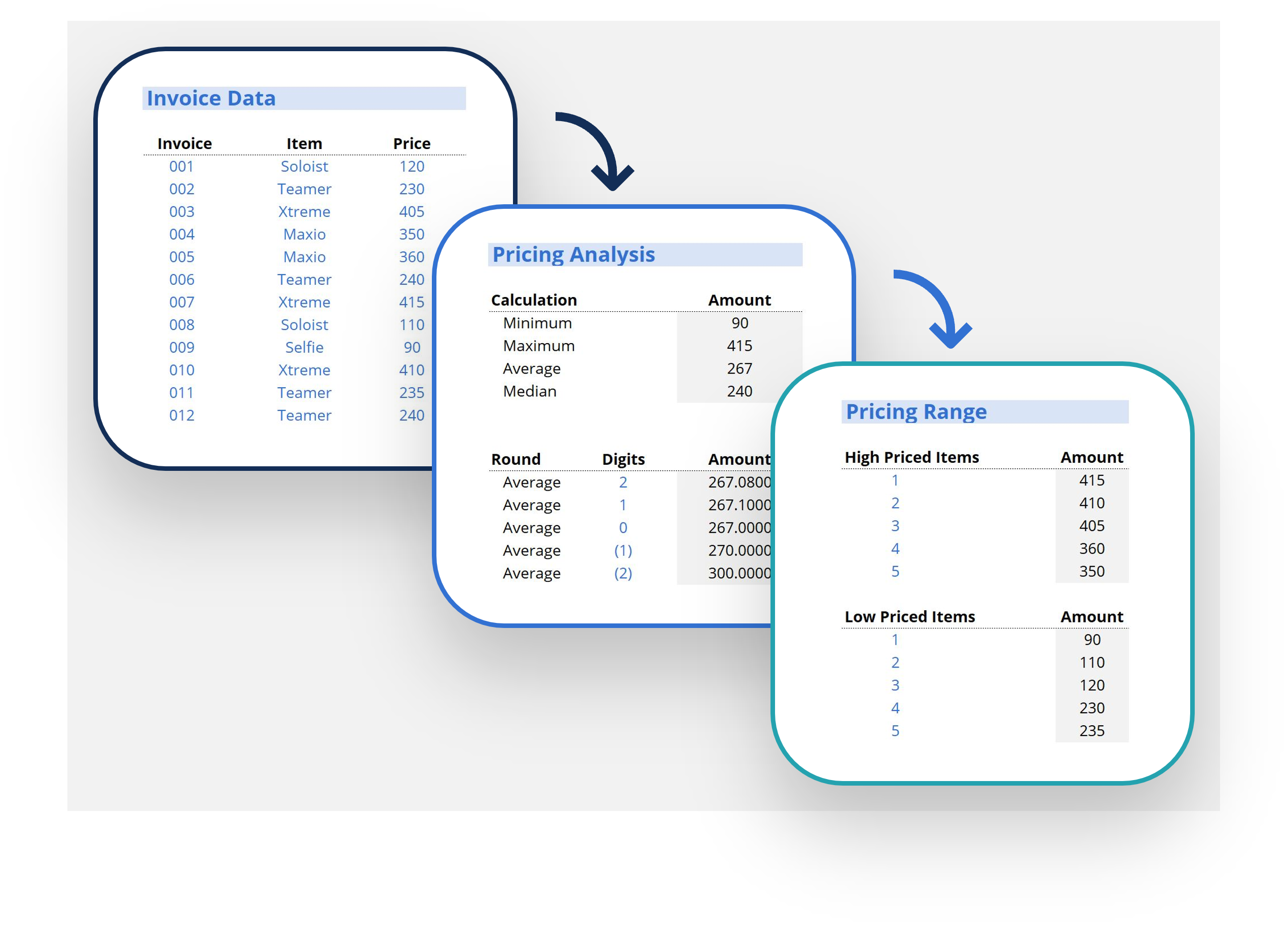

Econometrics analyzes data using statistical methods in order to test or develop economic theory. These methods rely on statistical inferences to quantify and analyze economic theories by leveraging tools such as frequency distributions , probability, and probability distributions , statistical inference, correlation analysis, simple and multiple regression analysis, simultaneous equations models, and time series methods.

Econometrics was pioneered by Lawrence Klein , Ragnar Frisch, and Simon Kuznets . All three won the Nobel Prize in economics for their contributions. Today, it is used regularly among academics as well as practitioners such as Wall Street traders and analysts.

An example of the application of econometrics is to study the income effect using observable data. An economist may hypothesize that as a person increases their income, their spending will also increase.

If the data show that such an association is present, a regression analysis can then be conducted to understand the strength of the relationship between income and consumption and whether or not that relationship is statistically significant—that is, it appears to be unlikely that it is due to chance alone.

Methods of Econometrics

The first step to econometric methodology is to obtain and analyze a set of data and define a specific hypothesis that explains the nature and shape of the set. This data may be, for example, the historical prices for a stock index, observations collected from a survey of consumer finances, or unemployment and inflation rates in different countries.

If you are interested in the relationship between the annual price change of the S&P 500 and the unemployment rate, you'd collect both sets of data. Then, you might test the idea that higher unemployment leads to lower stock market prices. In this example, stock market price would be the dependent variable and the unemployment rate is the independent or explanatory variable.

The most common relationship is linear, meaning that any change in the explanatory variable will have a positive correlation with the dependent variable. This relationship could be explored with a simple regression model, which amounts to generating a best-fit line between the two sets of data and then testing to see how far each data point is, on average, from that line.

Note that you can have several explanatory variables in your analysis—for example, changes to GDP and inflation in addition to unemployment in explaining stock market prices. When more than one explanatory variable is used, it is referred to as multiple linear regression . This is the most commonly used tool in econometrics.

Some economists, including John Maynard Keynes , have criticized econometricians for their over-reliance on statistical correlations in lieu of economic thinking.

Different Regression Models

There are several different regression models that are optimized depending on the nature of the data being analyzed and the type of question being asked. The most common example is the ordinary least squares (OLS) regression, which can be conducted on several types of cross-sectional or time-series data. If you're interested in a binary (yes-no) outcome—for instance, how likely you are to be fired from a job based on your productivity—you might use a logistic regression or a probit model. Today, econometricians have hundreds of models at their disposal.

Econometrics is now conducted using statistical analysis software packages designed for these purposes, such as STATA, SPSS, or R. These software packages can also easily test for statistical significance to determine the likelihood that correlations might arise by chance. R-squared , t-tests , p-values , and null-hypothesis testing are all methods used by econometricians to evaluate the validity of their model results.

Limitations of Econometrics

Econometrics is sometimes criticized for relying too heavily on the interpretation of raw data without linking it to established economic theory or looking for causal mechanisms. It is crucial that the findings revealed in the data are able to be adequately explained by a theory, even if that means developing your own theory of the underlying processes.

Regression analysis also does not prove causation, and just because two data sets show an association, it may be spurious. For example, drowning deaths in swimming pools increase with GDP. Does a growing economy cause people to drown? This is unlikely, but perhaps more people buy pools when the economy is booming. Econometrics is largely concerned with correlation analysis, and it is important to remember that correlation does not equal causation.

What Are Estimators in Econometrics?

An estimator is a statistic that is used to estimate some fact or measurement about a larger population. Estimators are frequently used in situations where it is not practical to measure the entire population. For example, it is not possible to measure the exact employment rate at any specific time, but it is possible to estimate unemployment based on a randomly-chosen sample of the population.

What Is Autocorrelation in Econometrics?

Autocorrelation measures the relationships between a single variable at different time periods. For this reason, it is sometimes called lagged correlation or serial correlation, since it is used to measure how the past value of a certain variable might predict future values of the same variable. Autocorrelation is a useful tool for traders, especially in technical analysis.

What Is Endogeneity in Econometrics?

An endogenous variable is a variable that is influenced by changes in another variable. Due to the complexity of economic systems, it is difficult to determine all of the subtle relationships between different factors, and some variables may be partially endogenous and partially exogenous. In econometric studies, the researchers must be careful to account for the possibility that the error term may be partially correlated with other variables.

Econometrics is a popular discipline that integrates statistical tools and modeling for economic data, and it is frequently used by policymakers to forecast the result of policy changes. Like with other statistical tools, there are many possibilities for error when econometric tools are used carelessly. Econometricians must be careful to justify their conclusions with sound reasoning as well as statistical inferences.

The Nobel Prize. " Simon Kuznets ."

The Nobel Prize. " Ragnar Frisch ."

The Nobel Prize. " Lawrence R. Klein ."

:max_bytes(150000):strip_icc():format(webp)/regression-4190330-ab4b9c8673074b01985883d2aae8b9b3.jpg)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

1.3 The Economists’ Tool Kit

Learning objectives.

- Explain how economists test hypotheses, develop economic theories, and use models in their analyses.

- Explain how the all-other-things unchanged (ceteris paribus) problem and the fallacy of false cause affect the testing of economic hypotheses and how economists try to overcome these problems.

- Distinguish between normative and positive statements.

Economics differs from other social sciences because of its emphasis on opportunity cost, the assumption of maximization in terms of one’s own self-interest, and the analysis of choices at the margin. But certainly much of the basic methodology of economics and many of its difficulties are common to every social science—indeed, to every science. This section explores the application of the scientific method to economics.

Researchers often examine relationships between variables. A variable is something whose value can change. By contrast, a constant is something whose value does not change. The speed at which a car is traveling is an example of a variable. The number of minutes in an hour is an example of a constant.

Research is generally conducted within a framework called the scientific method , a systematic set of procedures through which knowledge is created. In the scientific method, hypotheses are suggested and then tested. A hypothesis is an assertion of a relationship between two or more variables that could be proven to be false. A statement is not a hypothesis if no conceivable test could show it to be false. The statement “Plants like sunshine” is not a hypothesis; there is no way to test whether plants like sunshine or not, so it is impossible to prove the statement false. The statement “Increased solar radiation increases the rate of plant growth” is a hypothesis; experiments could be done to show the relationship between solar radiation and plant growth. If solar radiation were shown to be unrelated to plant growth or to retard plant growth, then the hypothesis would be demonstrated to be false.

If a test reveals that a particular hypothesis is false, then the hypothesis is rejected or modified. In the case of the hypothesis about solar radiation and plant growth, we would probably find that more sunlight increases plant growth over some range but that too much can actually retard plant growth. Such results would lead us to modify our hypothesis about the relationship between solar radiation and plant growth.

If the tests of a hypothesis yield results consistent with it, then further tests are conducted. A hypothesis that has not been rejected after widespread testing and that wins general acceptance is commonly called a theory . A theory that has been subjected to even more testing and that has won virtually universal acceptance becomes a law . We will examine two economic laws in the next two chapters.

Even a hypothesis that has achieved the status of a law cannot be proven true. There is always a possibility that someone may find a case that invalidates the hypothesis. That possibility means that nothing in economics, or in any other social science, or in any science, can ever be proven true. We can have great confidence in a particular proposition, but it is always a mistake to assert that it is “proven.”

Models in Economics

All scientific thought involves simplifications of reality. The real world is far too complex for the human mind—or the most powerful computer—to consider. Scientists use models instead. A model is a set of simplifying assumptions about some aspect of the real world. Models are always based on assumed conditions that are simpler than those of the real world, assumptions that are necessarily false. A model of the real world cannot be the real world.

We will encounter our first economic model in Chapter 35 “Appendix A: Graphs in Economics” . For that model, we will assume that an economy can produce only two goods. Then we will explore the model of demand and supply. One of the assumptions we will make there is that all the goods produced by firms in a particular market are identical. Of course, real economies and real markets are not that simple. Reality is never as simple as a model; one point of a model is to simplify the world to improve our understanding of it.

Economists often use graphs to represent economic models. The appendix to this chapter provides a quick, refresher course, if you think you need one, on understanding, building, and using graphs.

Models in economics also help us to generate hypotheses about the real world. In the next section, we will examine some of the problems we encounter in testing those hypotheses.

Testing Hypotheses in Economics

Here is a hypothesis suggested by the model of demand and supply: an increase in the price of gasoline will reduce the quantity of gasoline consumers demand. How might we test such a hypothesis?

Economists try to test hypotheses such as this one by observing actual behavior and using empirical (that is, real-world) data. The average retail price of gasoline in the United States rose from an average of $2.12 per gallon on May 22, 2005 to $2.88 per gallon on May 22, 2006. The number of gallons of gasoline consumed by U.S. motorists rose 0.3% during that period.

The small increase in the quantity of gasoline consumed by motorists as its price rose is inconsistent with the hypothesis that an increased price will lead to an reduction in the quantity demanded. Does that mean that we should dismiss the original hypothesis? On the contrary, we must be cautious in assessing this evidence. Several problems exist in interpreting any set of economic data. One problem is that several things may be changing at once; another is that the initial event may be unrelated to the event that follows. The next two sections examine these problems in detail.

The All-Other-Things-Unchanged Problem

The hypothesis that an increase in the price of gasoline produces a reduction in the quantity demanded by consumers carries with it the assumption that there are no other changes that might also affect consumer demand. A better statement of the hypothesis would be: An increase in the price of gasoline will reduce the quantity consumers demand, ceteris paribus. Ceteris paribus is a Latin phrase that means “all other things unchanged.”

But things changed between May 2005 and May 2006. Economic activity and incomes rose both in the United States and in many other countries, particularly China, and people with higher incomes are likely to buy more gasoline. Employment rose as well, and people with jobs use more gasoline as they drive to work. Population in the United States grew during the period. In short, many things happened during the period, all of which tended to increase the quantity of gasoline people purchased.

Our observation of the gasoline market between May 2005 and May 2006 did not offer a conclusive test of the hypothesis that an increase in the price of gasoline would lead to a reduction in the quantity demanded by consumers. Other things changed and affected gasoline consumption. Such problems are likely to affect any analysis of economic events. We cannot ask the world to stand still while we conduct experiments in economic phenomena. Economists employ a variety of statistical methods to allow them to isolate the impact of single events such as price changes, but they can never be certain that they have accurately isolated the impact of a single event in a world in which virtually everything is changing all the time.

In laboratory sciences such as chemistry and biology, it is relatively easy to conduct experiments in which only selected things change and all other factors are held constant. The economists’ laboratory is the real world; thus, economists do not generally have the luxury of conducting controlled experiments.

The Fallacy of False Cause

Hypotheses in economics typically specify a relationship in which a change in one variable causes another to change. We call the variable that responds to the change the dependent variable ; the variable that induces a change is called the independent variable . Sometimes the fact that two variables move together can suggest the false conclusion that one of the variables has acted as an independent variable that has caused the change we observe in the dependent variable.

Consider the following hypothesis: People wearing shorts cause warm weather. Certainly, we observe that more people wear shorts when the weather is warm. Presumably, though, it is the warm weather that causes people to wear shorts rather than the wearing of shorts that causes warm weather; it would be incorrect to infer from this that people cause warm weather by wearing shorts.

Reaching the incorrect conclusion that one event causes another because the two events tend to occur together is called the fallacy of false cause . The accompanying essay on baldness and heart disease suggests an example of this fallacy.

Because of the danger of the fallacy of false cause, economists use special statistical tests that are designed to determine whether changes in one thing actually do cause changes observed in another. Given the inability to perform controlled experiments, however, these tests do not always offer convincing evidence that persuades all economists that one thing does, in fact, cause changes in another.

In the case of gasoline prices and consumption between May 2005 and May 2006, there is good theoretical reason to believe the price increase should lead to a reduction in the quantity consumers demand. And economists have tested the hypothesis about price and the quantity demanded quite extensively. They have developed elaborate statistical tests aimed at ruling out problems of the fallacy of false cause. While we cannot prove that an increase in price will, ceteris paribus, lead to a reduction in the quantity consumers demand, we can have considerable confidence in the proposition.

Normative and Positive Statements

Two kinds of assertions in economics can be subjected to testing. We have already examined one, the hypothesis. Another testable assertion is a statement of fact, such as “It is raining outside” or “Microsoft is the largest producer of operating systems for personal computers in the world.” Like hypotheses, such assertions can be demonstrated to be false. Unlike hypotheses, they can also be shown to be correct. A statement of fact or a hypothesis is a positive statement .

Although people often disagree about positive statements, such disagreements can ultimately be resolved through investigation. There is another category of assertions, however, for which investigation can never resolve differences. A normative statement is one that makes a value judgment. Such a judgment is the opinion of the speaker; no one can “prove” that the statement is or is not correct. Here are some examples of normative statements in economics: “We ought to do more to help the poor.” “People in the United States should save more.” “Corporate profits are too high.” The statements are based on the values of the person who makes them. They cannot be proven false.

Because people have different values, normative statements often provoke disagreement. An economist whose values lead him or her to conclude that we should provide more help for the poor will disagree with one whose values lead to a conclusion that we should not. Because no test exists for these values, these two economists will continue to disagree, unless one persuades the other to adopt a different set of values. Many of the disagreements among economists are based on such differences in values and therefore are unlikely to be resolved.

Key Takeaways

- Economists try to employ the scientific method in their research.

- Scientists cannot prove a hypothesis to be true; they can only fail to prove it false.

- Economists, like other social scientists and scientists, use models to assist them in their analyses.

- Two problems inherent in tests of hypotheses in economics are the all-other-things-unchanged problem and the fallacy of false cause.

- Positive statements are factual and can be tested. Normative statements are value judgments that cannot be tested. Many of the disagreements among economists stem from differences in values.

Look again at the data in Table 1.1 “LSAT Scores and Undergraduate Majors” . Now consider the hypothesis: “Majoring in economics will result in a higher LSAT score.” Are the data given consistent with this hypothesis? Do the data prove that this hypothesis is correct? What fallacy might be involved in accepting the hypothesis?

Case in Point: Does Baldness Cause Heart Disease?

Mark Hunter – bald – CC BY-NC-ND 2.0.

A website called embarrassingproblems.com received the following email:

What did Dr. Margaret answer? Most importantly, she did not recommend that the questioner take drugs to treat his baldness, because doctors do not think that the baldness causes the heart disease. A more likely explanation for the association between baldness and heart disease is that both conditions are affected by an underlying factor. While noting that more research needs to be done, one hypothesis that Dr. Margaret offers is that higher testosterone levels might be triggering both the hair loss and the heart disease. The good news for people with early balding (which is really where the association with increased risk of heart disease has been observed) is that they have a signal that might lead them to be checked early on for heart disease.

Source: http://www.embarrassingproblems.com/problems/problempage230701.htm .

Answer to Try It! Problem

The data are consistent with the hypothesis, but it is never possible to prove that a hypothesis is correct. Accepting the hypothesis could involve the fallacy of false cause; students who major in economics may already have the analytical skills needed to do well on the exam.

Principles of Economics Copyright © 2016 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

- A-Z Publications

Annual Review of Economics

Volume 2, 2010, review article, hypothesis testing in econometrics.

- Joseph P. Romano 1 , Azeem M. Shaikh 2 , and Michael Wolf 3

- View Affiliations Hide Affiliations Affiliations: 1 Departments of Economics and Statistics, Stanford University, Stanford, California 94305; email: [email protected] 2 Department of Economics, University of Chicago, Chicago, Illinois 60637 3 Institute for Empirical Research in Economics, University of Zürich, CH-8006 Zürich, Switzerland

- Vol. 2:75-104 (Volume publication date September 2010) https://doi.org/10.1146/annurev.economics.102308.124342

- First published as a Review in Advance on February 09, 2010

- © Annual Reviews

This article reviews important concepts and methods that are useful for hypothesis testing. First, we discuss the Neyman-Pearson framework. Various approaches to optimality are presented, including finite-sample and large-sample optimality. Then, we summarize some of the most important methods, as well as resampling methodology, which is useful to set critical values. Finally, we consider the problem of multiple testing, which has witnessed a burgeoning literature in recent years. Along the way, we incorporate some examples that are current in the econometrics literature. While many problems with well-known successful solutions are included, we also address open problems that are not easily handled with current technology, stemming from such issues as lack of optimality or poor asymptotic approximations.

Article metrics loading...

Full text loading...

- Article Type: Review Article

Most Read This Month

Most cited most cited rss feed, power laws in economics and finance, the gravity model, the china shock: learning from labor-market adjustment to large changes in trade, microeconomics of technology adoption, financial literacy, financial education, and economic outcomes, gender and competition, corruption in developing countries, the economics of human development and social mobility, the roots of gender inequality in developing countries, weak instruments in instrumental variables regression: theory and practice.

Publication Date: 04 Sep 2010

Online Option

Sign in to access your institutional or personal subscription or get immediate access to your online copy - available in PDF and ePub formats

ECONOMETRICS HANDBOOK: BASIC DEFINITION OF CONCEPTS, PRINCIPLES AND METHODS

- November 2023

- Igbinedion University

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Jörn-Steffen Pischke

- Rainer Winkelmann

- TECHNOMETRICS

- Josef Schmee

- T. W. Anderson

- Handbook Econometrics

- D.L. McFadden

- Terry G. Seaks

- Tsoung-Chao Lee

- Philippe Rouzier

- Peter Kennedy

- G.S. Maddala

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

- For Individuals

- For Businesses

- For Universities

- For Governments

- Online Degrees

- Find your New Career

- Join for Free

Hypotheses Testing in Econometrics

This course is part of Econometrics for Economists and Finance Practitioners Specialization

Taught in English

Some content may not be translated

Instructor: Dr Leone Leonida

Financial aid available

1,820 already enrolled

Recommended experience

Intermediate level

Learners must understand basic statistics (mean, variance, skewness, kurtosis). Learners should complete Classical Linear Regression Model.

What you'll learn

How to perform hypothesis testing

How to check that the estimated model is empirically adequate

How to use hypothesis testing for decision making

Skills you'll gain

- Calculate and perform the t-test

- Calculate and perform the various diagnostic test

- Calculate and perform the F-test

- Prove the concept of unbiasedness

- Prove the concept of of efficiency

Details to know

Add to your LinkedIn profile

See how employees at top companies are mastering in-demand skills

Build your subject-matter expertise

- Learn new concepts from industry experts

- Gain a foundational understanding of a subject or tool

- Develop job-relevant skills with hands-on projects

- Earn a shareable career certificate

Earn a career certificate

Add this credential to your LinkedIn profile, resume, or CV

Share it on social media and in your performance review

There are 4 modules in this course

In this course, you will learn why it is rational to use the parameters recovered under the Classical Linear Regression Model for hypothesis testing in uncertain contexts. You will:

– Develop your knowledge of the statistical properties of the OLS estimator as you see whether key assumptions work. – Learn that the OLS estimator has some desirable statistical properties, which are the basis of an approach for hypothesis testing to aid rational decision making. – Examine the concept of null hypothesis and alternative hypothesis, before exploring a statistic and a distribution under the null hypothesis, as well as a rule for deciding which hypothesis is more likely to hold true. – Discover what happens to the decision-making framework if some assumptions of the CLRM are violated, as you explore diagnostic testing. – Learn the steps involved to detect violations, the consequences upon the OLS estimator, and the techniques that must be adopted to address these problems. Before starting this course, it is expected that you have an understanding of some basic statistics, including mean, variance, skewness and kurtosis. It is also recommended that you have completed and understood the previous course in this Specialisation: The Classical Linear Regression model. By the end of this course, you will be able to: – Explain what hypothesis testing is – Explain why the OLS is a rational approach to hypothesis testing – Perform hypothesis testing for single and multiple hypothesis – Explain the idea of diagnostic testing – Perform hypothesis testing for single and multiple hypothesis with R – Identify and resolve problems raised by identification of parameters.

Properties of the OLS Approach

This week we are going to look at the properties of the OLS approach as a basis for the hypothesis testing, focussing on linearity, unbiasedness, efficiency and consistency.

What's included

6 videos 4 readings 5 quizzes 3 discussion prompts

6 videos • Total 10 minutes

- Welcome to Hypotheses Testing in Econometrics • 1 minute • Preview module

- Properties of the OLS Estimator • 2 minutes

- Presentation of Linearity • 1 minute

- Unbiasedness • 1 minute

- Efficiency • 1 minute

- Consistency • 1 minute

4 readings • Total 40 minutes

- Understanding Linearity of the OLS Estimator • 10 minutes

- Understanding Unbiasedness • 10 minutes

- Understanding Efficiency • 10 minutes

- Understanding Consistency • 10 minutes

5 quizzes • Total 140 minutes

- Knowledge Check: Properties of the OLS Approach • 20 minutes

- Linearity • 30 minutes

- Check Your Understanding of Unbiasedness • 30 minutes

- Check Your Understanding of Efficiency • 30 minutes

- Check Your Understanding of Consistency • 30 minutes

3 discussion prompts • Total 30 minutes

- The Importance of Unbiasedness • 10 minutes

- The Importance of Efficiency • 10 minutes

- Exploring Consistency • 10 minutes

Hypothesis Testing

This week we shall be exploring hypothesis testing, looking at the t-test and the F-test, and considering the problems raised by hypothesis testing.

4 videos 6 readings 7 quizzes 1 discussion prompt 2 ungraded labs

4 videos • Total 19 minutes

- Hypothesis Testing • 4 minutes • Preview module

- The t-Test • 4 minutes

- The F-Test • 5 minutes

- Type I and Type II Errors • 4 minutes

6 readings • Total 60 minutes

- Using Hypothesis Testing • 10 minutes

- Exploring the Test of Significance • 10 minutes

- Example of the t-Test • 10 minutes

- Test Joint Hypothesis • 10 minutes

- An Example • 10 minutes

- Types of Errors • 10 minutes

7 quizzes • Total 180 minutes

- Knowledge Check: Hypothesis Testing • 20 minutes

- Building a Hypothesis • 10 minutes

- Interpreting t-Tests • 30 minutes

- Conditions for the f-Test • 30 minutes

- Differences between t and F-Tests • 30 minutes

- Non-Nested Models • 30 minutes

- Check Your Understanding of Hypothesis Testing • 30 minutes

1 discussion prompt • Total 10 minutes

- The Importance of Hypothesis Testing • 10 minutes

2 ungraded labs • Total 120 minutes

- Example t-Test with R • 60 minutes

- Example F-Test with R • 60 minutes

Diagnostic Testing I

This week we shall be discussing diagnostic testing as we look at non-linearity, violation of full rank and errors correlated with regressors.

4 videos 5 readings 5 quizzes 4 discussion prompts 2 ungraded labs

4 videos • Total 17 minutes

- Diagnostic Testing • 4 minutes • Preview module

- Violation of Linearity • 3 minutes

- Violation of Full Rank • 2 minutes

- Violation of Regression Model • 5 minutes

5 readings • Total 50 minutes

- Test for the Violations • 10 minutes

- Test for the Violation of Linearity • 10 minutes

- Test for the Violation • 10 minutes

- Consequences of the Violation • 10 minutes

- Knowledge Check: Diagnostic Testing I • 20 minutes

- Check Understanding of Diagnostic Testing • 30 minutes

- Solving Violations of Linearity • 30 minutes

- Solving Collinearity • 30 minutes

- Solving Endogeneity • 30 minutes

4 discussion prompts • Total 40 minutes

- The Importance of Studying Violations of Assumptions • 10 minutes

- How Do You Solve Linearity? • 10 minutes

- How Do You Solve Collinearity? • 10 minutes

- How Do You Solve Endogeneity? • 10 minutes

- Example of a Violation of Linearity with R • 60 minutes

- Example of Collinearity with R • 60 minutes

Diagnostic Testing II

This week we will continue to look at diagnostic testing as we consider spherical errors, heteroscedasticity, autocorrelation, Stochastic Regressors, and the non-normality of errors.

2 videos 7 readings 6 quizzes 1 peer review 4 discussion prompts 3 ungraded labs

2 videos • Total 5 minutes

- Stochastic Regressors • 2 minutes • Preview module

- Non-Normal Errors • 3 minutes

7 readings • Total 70 minutes

- Consequences of the Violations • 10 minutes

- Congratulations • 10 minutes

6 quizzes • Total 170 minutes

- Understanding Hypothesis Testing • 30 minutes

- Knowledge Check: Diagnostic Testing II • 20 minutes

- Understanding Heteroscedasticity • 30 minutes

- Understanding Autocorrelation • 30 minutes

- Understanding Stochastic Regressors • 30 minutes

- Understanding Non-Normal Errors • 30 minutes

1 peer review • Total 120 minutes

- Hypothesis and Diagnostic Testing • 120 minutes

- Importance of Testing for Heteroscedasticity • 10 minutes

- How Do You Solve Autocorrelation? • 10 minutes

- How Do You Solve Stochastic Regressors? • 10 minutes

- How Do You Solve Non-Normal Errors? • 10 minutes

3 ungraded labs • Total 240 minutes

- Example of Heteroscedasticity with R • 60 minutes

- Example of Autocorrelation with R • 60 minutes

- Example of Normality with R • 120 minutes

Instructor ratings

We asked all learners to give feedback on our instructors based on the quality of their teaching style.

Queen Mary University of London is a leading research-intensive university with a difference – one that opens the doors of opportunity to anyone with the potential to succeed. Ranked 117 in the world, the University has over 28000 students and 4400 members of staff. We are a truly global university: over 160 nationalities are represented on our 5 campuses in London, and we also have a presence in Malta, Paris, Athens, Singapore and China. The reach of our education is extended still further through our online provision.

Recommended if you're interested in Economics

Queen Mary University of London

Topics in Applied Econometrics

The Econometrics of Time Series Data

The Classical Linear Regression Model

Econometrics for Economists and Finance Practitioners

Specialization

Why people choose Coursera for their career

Open new doors with Coursera Plus

Unlimited access to 7,000+ world-class courses, hands-on projects, and job-ready certificate programs - all included in your subscription

Advance your career with an online degree

Earn a degree from world-class universities - 100% online

Join over 3,400 global companies that choose Coursera for Business

Upskill your employees to excel in the digital economy

Frequently asked questions

When will i have access to the lectures and assignments.

Access to lectures and assignments depends on your type of enrollment. If you take a course in audit mode, you will be able to see most course materials for free. To access graded assignments and to earn a Certificate, you will need to purchase the Certificate experience, during or after your audit. If you don't see the audit option:

The course may not offer an audit option. You can try a Free Trial instead, or apply for Financial Aid.

The course may offer 'Full Course, No Certificate' instead. This option lets you see all course materials, submit required assessments, and get a final grade. This also means that you will not be able to purchase a Certificate experience.

What will I get if I subscribe to this Specialization?

When you enroll in the course, you get access to all of the courses in the Specialization, and you earn a certificate when you complete the work. Your electronic Certificate will be added to your Accomplishments page - from there, you can print your Certificate or add it to your LinkedIn profile. If you only want to read and view the course content, you can audit the course for free.

What is the refund policy?

If you subscribed, you get a 7-day free trial during which you can cancel at no penalty. After that, we don’t give refunds, but you can cancel your subscription at any time. See our full refund policy Opens in a new tab .

Is financial aid available?

Yes. In select learning programs, you can apply for financial aid or a scholarship if you can’t afford the enrollment fee. If fin aid or scholarship is available for your learning program selection, you’ll find a link to apply on the description page.

More questions

- About the IMF

- Capacity Development

- Publications

Econometrics: Making Theory Count

Finance & Development

Sam Ouliaris

If economic theory is to be a useful tool for policymaking, it must be quantifiable

Numbers racket (photo: Tom Grill/Corbis)

Economists develop economic models to explain consistently recurring relationships. Their models link one or more economic variables to other economic variables. For example, economists connect the amount individuals spend on consumer goods to disposable income and wealth, and expect consumption to increase as disposable income and wealth increase (that is, the relationship is positive).

There are often competing models capable of explaining the same recurring relationship, called an empirical regularity, but few models provide useful clues to the magnitude of the association. Yet this is what matters most to policymakers. When setting monetary policy , for example, central bankers need to know the likely impact of changes in official interest rates on inflation and the growth rate of the economy. It is in cases like this that economists turn to econometrics.

Econometrics uses economic theory, mathematics, and statistical inference to quantify economic phenomena. In other words, it turns theoretical economic models into useful tools for economic policymaking. The objective of econometrics is to convert qualitative statements (such as “the relationship between two or more variables is positive”) into quantitative statements (such as “consumption expenditure increases by 95 cents for every one dollar increase in disposable income”). Econometricians—practitioners of econometrics—transform models developed by economic theorists into versions that can be estimated. As Stock and Watson (2007) put it, “econometric methods are used in many branches of economics, including finance, labor economics, macroeconomics, microeconomics, and economic policy.” Economic policy decisions are rarely made without econometric analysis to assess their impact.

A daunting task

Certain features of economic data make it challenging for economists to quantify economic models. Unlike researchers in the physical sciences, econometricians are rarely able to conduct controlled experiments in which only one variable is changed and the response of the subject to that change is measured. Instead, econometricians estimate economic relationships using data generated by a complex system of related equations, in which all variables may change at the same time. That raises the question of whether there is even enough information in the data to identify the unknowns in the model.

Econometrics can be divided into theoretical and applied components.

Theoretical econometricians investigate the properties of existing statistical tests and procedures for estimating unknowns in the model. They also seek to develop new statistical procedures that are valid (or robust) despite the peculiarities of economic data—such as their tendency to change simultaneously. Theoretical econometrics relies heavily on mathematics, theoretical statistics, and numerical methods to prove that the new procedures have the ability to draw correct inferences.

Applied econometricians, by contrast, use econometric techniques developed by the theorists to translate qualitative economic statements into quantitative ones. Because applied econometricians are closer to the data, they often run into—and alert their theoretical counterparts to—data attributes that lead to problems with existing estimation techniques. For example, the econometrician might discover that the variance of the data (how much individual values in a series differ from the overall average) is changing over time.

The main tool of econometrics is the linear multiple regression model, which provides a formal approach to estimating how a change in one economic variable, the explanatory variable, affects the variable being explained, the dependent variable—taking into account the impact of all the other determinants of the dependent variable. This qualification is important because a regression seeks to estimate the marginal impact of a particular explanatory variable after taking into account the impact of the other explanatory variables in the model. For example, the model may try to isolate the effect of a 1 percentage point increase in taxes on average household consumption expenditure, holding constant other determinants of consumption, such as pretax income, wealth, and interest rates.

Stages of development

The methodology of econometrics is fairly straightforward.

The first step is to suggest a theory or hypothesis to explain the data being examined. The explanatory variables in the model are specified, and the sign and/or magnitude of the relationship between each explanatory variable and the dependent variable are clearly stated. At this stage of the analysis, applied econometricians rely heavily on economic theory to formulate the hypothesis. For example, a tenet of international economics is that prices across open borders move together after allowing for nominal exchange rate movements (purchasing power parity). The empirical relationship between domestic prices and foreign prices (adjusted for nominal exchange rate movements) should be positive, and they should move together approximately one for one.

The second step is the specification of a statistical model that captures the essence of the theory the economist is testing. The model proposes a specific mathematical relationship between the dependent variable and the explanatory variables—on which, unfortunately, economic theory is usually silent. By far the most common approach is to assume linearity—meaning that any change in an explanatory variable will always produce the same change in the dependent variable (that is, a straight-line relationship).

Because it is impossible to account for every influence on the dependent variable, a catchall variable is added to the statistical model to complete its specification. The role of the catchall is to represent all the determinants of the dependent variable that cannot be accounted for—because of either the complexity of the data or its absence. Economists usually assume that this “error” term averages to zero and is unpredictable, simply to be consistent with the premise that the statistical model accounts for all the important explanatory variables.

The third step involves using an appropriate statistical procedure and an econometric software package to estimate the unknown parameters (coefficients) of the model using economic data. This is often the easiest part of the analysis thanks to readily available economic data and excellent econometric software. Still, the famous GIGO (garbage in, garbage out) principle of computing also applies to econometrics. Just because something can be computed doesn’t mean it makes economic sense to do so.

The fourth step is by far the most important: administering the smell test. Does the estimated model make economic sense—that is, yield meaningful economic predictions? For example, are the signs of the estimated parameters that connect the dependent variable to the explanatory variables consistent with the predictions of the underlying economic theory? (In the household consumption example, for instance, the validity of the statistical model would be in question if it predicted a decline in consumer spending when income increased). If the estimated parameters do not make sense, how should the econometrician change the statistical model to yield sensible estimates? And does a more sensible estimate imply an economically significant effect? This step, in particular, calls on and tests the applied econometrician’s skill and experience.

Testing the hypothesis

The main tool of the fourth stage is hypothesis testing, a formal statistical procedure during which the researcher makes a specific statement about the true value of an economic parameter, and a statistical test determines whether the estimated parameter is consistent with that hypothesis. If it is not, the researcher must either reject the hypothesis or make new specifications in the statistical model and start over.

If all four stages proceed well, the result is a tool that can be used to assess the empirical validity of an abstract economic model. The empirical model may also be used to construct a way to forecast the dependent variable, potentially helping policymakers make decisions about changes in monetary and/or fiscal policy to keep the economy on an even keel.

Students of econometrics are often fascinated by the ability of linear multiple regression to estimate economic relationships. Three fundamentals of econometrics are worth remembering.

• First, the quality of the parameter estimates depends on the validity of the underlying economic model.

• Second, if a relevant explanatory variable is excluded, the most likely outcome is poor parameter estimates.

• Third, even if the econometrician identifies the process that actually generated the data, the parameter estimates have only a slim chance of being equal to the actual parameter values that generated the data. Nevertheless, the estimates will be used because, statistically speaking, they will become precise as more data become available.

Econometrics, by design, can yield correct predictions on average, but only with the help of sound economics to guide the specification of the empirical model. Even though it is a science, with well-established rules and procedures for fitting models to economic data, in practice econometrics is an art that requires considerable judgment to obtain estimates useful for policymaking.

Sam Ouliaris is a Senior Economist in the IMF Institute.

- Archive of F&D Issues

Write to us

F&D welcomes comments and brief letters, a selection of which are posted under Letters to the Editor. Letters may be edited. Please send your letters to [email protected]

F&D Magazine

- About F&D

- Advertising Information

- Subscription Information

- Copyright Information

- Writing Guidelines

- Use the free Adobe Acrobat Reader to view pdf files

Free Email Notification

Receive emails when we post new items of interest to you. Subscribe or Modify your profile

- Country Info

- Data and Statistics

- Copyright and Usage

- Privacy Policy

- How to Contact Us

- Français

- Español

What is Econometrics?

How does econometrics work, examples of using econometrics, what is applied econometrics, what is theoretical econometrics, econometrics.

An area of economics where statistical and mathematical methods are used to analyze economic data

Econometrics is an area of economics where statistical and mathematical methods are used to analyze economic data. Individuals who are involved with econometrics are referred to as econometricians.

Econometricians test economic theories and hypotheses by using statistical tools such as probability, statistical inference, regression analysis , frequency distributions, and more. After testing economic theories, econometricians can compare the results with real data and observations, which can be helpful in forecasting future economic trends.

The purpose is to use statistical modeling and analysis in order to transform qualitative economic concepts into quantitative information that individuals can use. For example, policymakers can use the information to create new fiscal and monetary policies to stimulate the economy.

Suppose that policymakers are creating a new policy to increase the number of jobs in order to improve the unemployment rate and boost the economy. Econometricians test if this hypothesis will be true or not by using statistical models.

The following steps are the methodology of econometrics:

- Econometricians who are examining a dataset will suggest a theory or hypothesis to explain the data. At this stage, econometricians would define variables found in the economic model and the relationship between different variables. In order to come up with a hypothesis to explain the relationships, econometricians would look at existing economic theories.

- The second stage is to define a statistical model to quantify the economic theory that is being analyzed in the first step.

- In the third stage, statistical procedures are used to forecast unknown points in the statistical model. Econometricians may even use econometric software in order to assist with this step.

- Hypothesis testing is done in order to determine whether or not the hypothesis should be rejected or not. If it is rejected, the econometrician should come up with new definitions in the statistical model. The purpose of doing so is to assess the validity of the economic model.

There are various approaches to econometrics, and it is not limited to the methodology described above. Other methodologies include the vector autoregression approach and the Cowles Commission approach.

In the past, econometricians have studied patterns and relationships between different economic concepts, including:

- Income and expenditure

- Production, supply, and cost

- Labor and capital

- Salary and productivity

Econometrics can be separated into two main categories: applied and theoretical . The main goal for an applied econometrician is to turn qualitative data into something quantitative.

Applied econometrics refers to the idea of how economic data and theories are used to draw conclusions to improve decision-making and assist in solving economic issues. Its purpose is to enable the government, policymakers, businesses, and financial institutions to gain insight into possible solutions that can be used to solve economic problems. In order to do so, applied econometricians would analyze economic metrics, try to find out if there are any statistical trends, and predict what the outcome would be for an economic issue.

For example, suppose an applied econometrician is comparing household income with inflation rates and concludes that there is a relationship between the two. As a result, the government can use the research from econometricians to impose changes to policies that can increase household income during times of inflation .

Theoretical econometrics is about analyzing existing statistical procedures in order to predict anomalies or unknown parameters in economic data. Besides analyzing current statistical procedures, theoretical econometricians also develop new statistical procedures and methodologies in order to explain anomalies found in economic data .

As a result, theoretical econometricians depend on mathematical techniques and statistical theories to ensure that the new procedures that they develop can successfully generate correct economic conclusions.

CFI is the official provider of the Capital Markets & Securities Analyst (CMSA®) certification program, designed to help anyone become a world-class financial analyst. To keep advancing your career, the additional CFI resources below will be useful:

- Demographics

- Economic Indicators

- Keynesian Economic Theory

- Quantitative Analysis

- See all economics resources

- Share this article

Create a free account to unlock this Template

Access and download collection of free Templates to help power your productivity and performance.

Already have an account? Log in

Supercharge your skills with Premium Templates

Take your learning and productivity to the next level with our Premium Templates.

Upgrading to a paid membership gives you access to our extensive collection of plug-and-play Templates designed to power your performance—as well as CFI's full course catalog and accredited Certification Programs.

Already have a Self-Study or Full-Immersion membership? Log in

Access Exclusive Templates

Gain unlimited access to more than 250 productivity Templates, CFI's full course catalog and accredited Certification Programs, hundreds of resources, expert reviews and support, the chance to work with real-world finance and research tools, and more.

Already have a Full-Immersion membership? Log in

Introductory Econometrics

Chapter 17: joint hypothesis testing.

Chapter 16 shows how to test a hypothesis about a single slope parameter in a regression equation. This chapter explains how to test hypotheses about more than one of the parameters in a multiple regression model. Simultaneous multiple parameter hypothesis testing generally requires constructing a test statistic that measures the difference in fit between two versions of the same model.

An Example of a Test Involving More than One Parameter

One of the central tasks in economics is explaining savings behavior. National savings rates vary considerably across countries, and the United States has been at the low end in recent decades. Most studies of savings behavior by economists look at strictly economic determinants of savings. Differences in national savings rates, however, seem to reflect more than just differences in the economic environment. In a study of individual savings behavior, Carroll et al. (1999) examined the hypothesis that cultural factors play a role. Specifically, they asked the question, Does national origin help to explain differences in savings rate across a group of immigrants to the United States? Using 1980 and 1990 U.S. Census data with data on immigrants from 16 countries and on native-born Americans, Carroll et al. estimated a model similar to the following :( 1 )

For reasons that will become obvious, we call this the unrestricted model. The dependent variable is the household savings rate. Age and education measure, respectively, the age and education of the household head (both in years). The error term reflects omitted variables that affect savings rates as well as the influence of luck. The subscript h indexes households. A series of 16 dummy variables indicate the national origin of the immigrants; for example, Chinah = 1 if both husband and wife in household h were Chinese immigrants .( 2 ) Suppose that the value for the coefficient multiplying China is 0.12. This would indicate that, with other factors controlled, immigrants of Chinese origin have a savings rate 12 percentage points higher than the base case (which in this regression consists of people who were born in the United States).

If there are no cultural effects on savings, then all the coefficients multiplying the dummy variables for national origin ought to be equal to each other. In other words, if culture does not matter, national origin ought not to affect savings rates ceteris paribus. This is a null hypothesis involving 16 parameters and 16 equal signs:

The alternative hypothesis simply negates the null hypothesis, meaning that immigrants from at least one country have different savings rates than immigrants from other countries:

Now, if the null hypothesis is true, then an alternative, simpler model describes the data generation process:

Relative to the original model, the one above is a restricted model. We can test the null hypothesis with a new test statistic, the F-statistic, which essentially measures the difference between the fit of the original and restricted models above. The test is known as an F-test. The F-statistic will not have a normal distribution. Under the often-made assumption that the error terms are normally distributed, when the null is true, the test statistic follows an F distribution, which accounts for the name of the statistic. We will need to learn about the F- and the related chi-square distributions in order to calculate the P-value for the F-test.

F-Test Basics

The F-distribution is named after Ronald A. Fisher, a leading statistician of the first half of the twentieth century. This chapter demonstrates that the F distribution is a ratio of two chi-square random variables and that, as the number of observations increases, the F-distribution comes to resemble the chi-square distribution. Karl Pearson popularized the chi-square distribution beginning in 1900.

The Whole Model F-Test (discussed in Section 17.2) is commonly used as a test of the overall significance of the included independent variables in a regression model. In fact, it is so often used that Excel’s LINEST function and most other statistical software report this statistic. We will show that there are many other F-tests that facilitate tests of a variety of competing models. The idea that there are competing models opens the door to a difficult question: How do we decide which model is the right one? One way to answer this question is with an F-test. At first glance, one might consider measures of fit such as R2 or the sum of squared residuals (SSR) as a guide. But these statistics have a serious weakness – as you include additional independent variables, the R2 and SSR are guaranteed (practically speaking) to improve. Thus, naive reliance on these measures of fit leads to kitchen sink regression – that is, we throw in as many variables as we can find (the proverbial kitchen sink) in an effort to optimize the fit.

The problem with kitchen sink regression is that, for a particular sample, it will yield a higher R2 or lower SSR than a regression with fewer X variables, but the true model may be the one with the smaller number of X variables. This will be shown via a concrete example in Section 17.5. The F-test provides a way to discriminate between alternative models. It recognizes that there will be differences in measures of fit when one model is compared with another, but it requires that the loss of fit be substantial enough to reject the reduced model.

Organization

In general, the F-test can be used to test any restriction on the parameters in the equation. The idea of a restricted regression is fundamental to the logic of the F-test, and thus it is discussed in detail in the next section. Because the F-distribution is actually the ratio of two chi-square (?2) distributed random variables (divided by their respective degrees of freedom), Section 17.3 explains the chi-square distribution and points out that, when the errors are normally distributed, the sum of squared residuals is a random variable with a chi-square distribution. Section 17.4 demonstrates that the ratio of two chi-square distributed random variables is an F-distributed random variable. The remaining sections of this chapter put the F-statistic into practice. Section 17.5 does so in the context of Galileo’s model of acceleration, whereas Section 17.6 considers an example involving food stamps. We use the food stamp example to show that, when the restriction involves a single equals sign, one can rewrite the original model to make it possible to employ a t-test instead of an F-test. The t- and F-tests yield equivalent results in such cases. We apply the F-test to a real-world example in Section 17.7. Finally, Section 17.8 discusses multicollinearity and the distinction between confi- dence intervals for a single parameter and confidence regions for multiple parameters.

1 Their actual model is, not surprisingly, substantially more complicated. Return to text. 2 There were 17 countries of origin in the study, including 900 households selected at random from the United States. Only married couples from the same country of origin were included in the sample. Other restrictions were that the household head must have been older than 35 and younger than 50 in 1980. Return to text.

Excel Workbooks

ChiSquareDist.xls CorrelatedEstimates.xls FDist.xls FDistEarningsFn.xls FDistFoodStamps.xls FDistGalileo.xls MyMonteCarlo.xls NoInterceptBug.xls

Aaron Smith

Chapter 6 - hypothesis testing and confidence intervals.

Click here to read the chapter (link works only for UC affiliates)

Lecture Slides: Powerpoint PDF

Learning Objectives

- Test a hypothesis about a regression coefficient

- Form a confidence interval around a regression coefficient

- Show how the central limit theorem allows econometricians to ignore assumption CR4 in large samples

- Present results from a regression model

- Central Limit Theorem in Action

What We Learned

- Our result is the same whether we drop CR4 and invoke the central limit theorem (valid in large samples) or whether we impose CR4 (necessary in small samples).

- Confidence intervals are narrow when the sum of squared errors is small, the sample is large, or there’s a lot of variation in X .

- How to present results from a regression model.

Article Categories

Book categories, collections.

- Business, Careers, & Money Articles

- Business Articles

- Economics Articles

Econometrics For Dummies Cheat Sheet

Econometrics for dummies.

Sign up for the Dummies Beta Program to try Dummies' newest way to learn.

To accurately perform these tasks, you need econometric model-building skills, quality data, and appropriate estimation strategies. And both economic and statistical assumptions are important when using econometrics to estimate models.

Econometric estimation and the CLRM assumptions

Econometric techniques are used to estimate economic models, which ultimately allow you to explain how various factors affect some outcome of interest or to forecast future events. The ordinary least squares (OLS) technique is the most popular method of performing regression analysis and estimating econometric models, because in standard situations (meaning the model satisfies a series of statistical assumptions) it produces optimal (the best possible) results.

The proof that OLS generates the best results is known as the Gauss-Markov theorem, but the proof requires several assumptions. These assumptions, known as the classical linear regression model (CLRM) assumptions, are the following:

The model parameters are linear, meaning the regression coefficients don’t enter the function being estimated as exponents (although the variables can have exponents).

The values for the independent variables are derived from a random sample of the population, and they contain variability.

The explanatory variables don’t have perfect collinearity (that is, no independent variable can be expressed as a linear function of any other independent variables).

The error term has zero conditional mean, meaning that the average error is zero at any specific value of the independent variable(s).

The model has no heteroskedasticity (meaning the variance of the error is the same regardless of the independent variable’s value).

The model has no autocorrelation (the error term doesn’t exhibit a systematic relationship over time).

If one (or more) of the CLRM assumptions isn’t met (which econometricians call failing ), then OLS may not be the best estimation technique. Fortunately, econometric tools allow you to modify the OLS technique or use a completely different estimation method if the CLRM assumptions don’t hold.

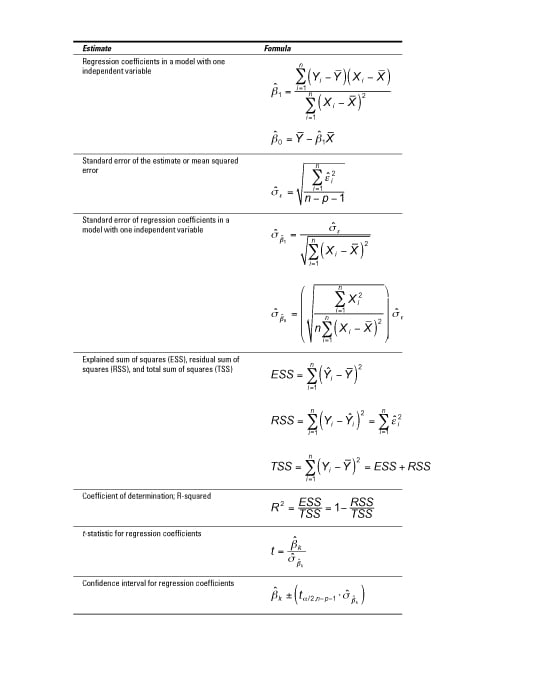

Useful formulas in econometrics

After you acquire data and choose the best econometric model for the question you want to answer, use formulas to produce the estimated output.

In some cases, you have to perform these calculations by hand (sorry). However, even if your problem allows you to use econometric software such as STATA to generate results, it’s nice to know what the computer is doing.

Here’s a look at the most common estimators from an econometric model along with the formulas used to produce them.

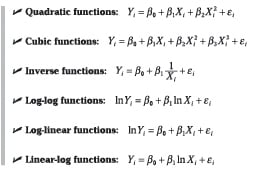

Econometric analysis: Looking at flexibility in models

You may want to allow your econometric model to have some flexibility, because economic relationships are rarely linear. Many situations are subject to the “law” of diminishing marginal benefits and/or increasing marginal costs, which implies that the impact of the independent variables won’t be constant (linear).

The precise functional form depends on your specific application, but the most common are as follows:

Typical problems estimating econometric models

If the CLRM doesn’t work for your data because one of its assumptions doesn’t hold, then you have to address the problem before you can finalize your analysis.

Fortunately, one of the primary contributions of econometrics is the development of techniques to address such problems or other complications with the data that make standard model estimation difficult or unreliable.

The following table lists the names of the most common estimation issues, a brief definition of each one, their consequences, typical tools used to detect them, and commonly accepted methods for resolving each problem.

| Problem | Definition | Consequences | Detection | Solution |

|---|---|---|---|---|

| High multicollinearity | Two or more independent variables in a regression model exhibit a close linear relationship. | Large standard errors and insignificant -statistics Coefficient estimates sensitive to minor changes in model specification Nonsensical coefficient signs and magnitudes | Pairwise correlation coefficients Variance inflation factor (VIF) | 1. Collect additional data. 2. Re-specify the model. 3. Drop redundant variables. |

| Heteroskedasticity | The variance of the error term changes in response to a change in the value of the independent variables. | Inefficient coefficient estimates Biased standard errors Unreliable hypothesis tests | Park test Goldfeld-Quandt test Breusch-Pagan test White test | 1. Weighted least squares (WLS) 2. Robust standard errors |

| Autocorrelation | An identifiable relationship (positive or negative) exists between the values of the error in one period and the values of the error in another period. | Inefficient coefficient estimates Biased standard errors Unreliable hypothesis tests | Geary or runs test Durbin-Watson test Breusch-Godfrey test | 1. Cochrane-Orcutt transformation 2. Prais-Winsten transformation 3. Newey-West robust standard errors |

About This Article

This article is from the book:.

- Econometrics For Dummies ,

About the book author:

Roberto Pedace , PhD, is an associate professor in the Department of Economics at Scripps College. His published work has appeared in Economic Inquiry, Industrial Relations, the Southern Economic Journal , Contemporary Economic Policy , the Journal of Sports Economics , and other outlets.

This article can be found in the category:

- Economics ,

- How to Choose a Forecasting Method in Econometrics

- Specifying Your Econometrics Regression Model

- Ten Practical Applications of Econometrics

- Econometrics: Choosing the Functional Form of Your Regression Model

- Working with Special Dependent Variables in Econometrics

- View All Articles From Book

In order to continue enjoying our site, we ask that you confirm your identity as a human. Thank you very much for your cooperation.

Top 4 Types of Hypothesis in Consumption (With Diagram)

The following points highlight the top four types of Hypothesis in Consumption. The types of Hypothesis are: 1. The Post-Keynesian Developments 2. The Relative Income Hypothesis 3. The Life-Cycle Hypothesis 4. The Permanent Income Hypothesis.

Hypothesis Type # 1. The Post-Keynesian Developments:

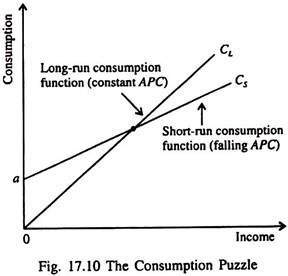

Data collected and examined in the post-Second World War period (1945-) confirmed the Keynesian consumption function.

Time series data collected over long periods showed that the relation between income and consumption was different from what cross-section data revealed.

In the short run, there was a non-proportional relation between income and consumption. But in the long run the relation was proportional. By constructing new aggregate data on consumption and income from 1869 and examining the same, Simon Kuznets discovered that the ratio of consumption to income was fairly stable from decade to decade, despite large increases in income over the period he studied.

ADVERTISEMENTS:

This contradicted Keynes’ conjecture that the average propensity to consume would fall with increases in income. Kuznets’ findings indicated that the APC is fairly constant over long periods of time. This fact presented a puzzle which is illustrated in Fig. 17.10.

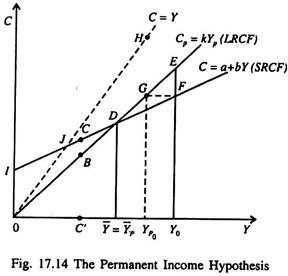

Studies of cross-section (household) data and short time series confirmed the Keynesian hypothesis — the relationship between consumption and income, as indicated by the consumption function C s in Fig. 17.10.

But studies of long time series found that APC did not vary systematically with income, as is shown by the long-run consumption function C L . The short-run consumption function has a falling APC, whereas the long-run consumption function has a constant APC.

Subsequent research on consumption attempted to explain how these two consumption functions could be consistent with each other.

Various attempts have been made to reconcile these conflicting evidences. In this context mention has to be made of James Duesenberry (who developed the relative income hypothesis), Ando, Brumberg and Modigliani (who developed the life cycle hypothesis of saving behaviour) and Milton Friedman who developed the permanent income hypothesis of consumption behaviour.

All these economists proposed explanations of these seemingly contradictory findings. These hypotheses may now be discussed one by one.

Hypothesis Type # 2. The Relative Income Hypothesis :

In 1949, James Duesenberry presented the relative income hypothesis. According to this hypothesis, saving (consumption) depends on relative income. The saving function is expressed as S t =f(Y t / Y p ), where Y t / Y p is the ratio of current income to some previous peak income. This is called relative income. Thus current consumption or saving is not a function-of current income but relative income.

Duensenberry pointed out that during depression when income falls consumption does not fall much. People try to protect their living standards either by reducing their past savings (or accumulated wealth) or by borrowing.

However as the economy gradually moves initially into the recovery and then in to the prosperity phase of the business cycle consumption does not rise even if income increases. People use a portion of their income either to restore the old saving rate or to repay their old debt.

Thus we see that there is a lack of symmetry in people’s consumption behaviour. People find it more difficult to reduce their consumption level than to raise it. This asymmetrical behaviour of consumers is known as the ratchet effect.

Thus if we observe a consumer’s short-run behaviour we find a non-proportional relation between income and consumption. Thus MPC is less than APC in the short run, as Keynes’s absolute income hypothesis has postulated. But if we study a consumer’s behaviour in the long run, i.e., over the entire business cycle we find a proportional relation between income and consumption. This means that in the long run MPC = APC.

Hypothesis Type # 3. The Life-Cycle Hypothesis :

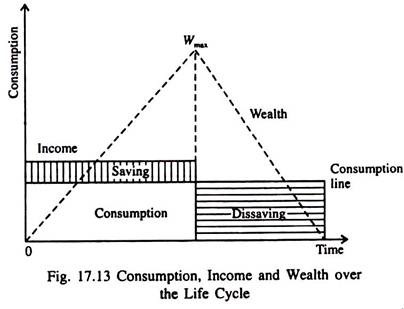

In the late 1950s and early 1960s Franco Modigliani and his co-workers Albert Ando and Richard Brumberg related consumption expenditure to demography. Modigliani, in particular, emphasised that income varies systematically over peoples’ lives and that saving allows consumers to move income from early years of earning (when income is high) to later years after retirement when income is low.

This interpretation of household consumption behaviour forms the basis of his life-cycle hypothesis.

The life cycle hypothesis (henceforth LCH) represents an attempt to deal with the way in which consumers dispose off their income over time. In this hypothesis wealth is assigned a crucial role in consumption decision. Wealth includes not only property (houses, stocks, bonds, savings accounts, etc.) but also the value of future earnings.

Thus consumers visualise themselves as having a stock of initial wealth, a flow of income generated by that wealth over their lifetime and a target (which may be zero) as their end-of-life wealth. Consumption decisions are made with the whole series of financial flows in mind.

Thus, changes in wealth as reflected by unexpected changes in flow of earnings or unexpected movements in asset prices would have an impact on consumers’ spending decisions because they would enhance future earnings from property, labour or both. The theory has empirically testable implications for the relation between saving and age of a person as also for the role of wealth in influencing aggregate consumer spending.

The Hypothesis :

The main reason that an individual’s income varies is retirement. Since most people do not want their current living standard (as measured by consumption) to fall after retirement they save a portion of their income every year (over their entire service period). This motive for saving has an important implication for an individual’s consumption behaviour.

Suppose a representative consumer expects to live another T years, has wealth of W, and expects to earn income Y per year until he (she) retires R years from now. What should be the optimal level of consumption of the individual if he wishes to maintain a smooth level of consumption over his entire life?

The consumer’s lifetime endowments consist of initial wealth W and lifetime earnings RY. If we assume that the consumer divides his total wealth W + RY equally among the T years and wishes to consume smoothly over his lifetime then his annual consumption will be:

C = (W + RY)/T … (5)

This person’s consumption function can now be expressed as

C = (1/T)W + (R/T)Y

If all individuals plan their consumption in the same way then the aggregate consumption function is a replica of our representative consumer’s consumption function. To be more specific, aggregate consumption depends on both wealth and income. That is, the aggregate consumption function is

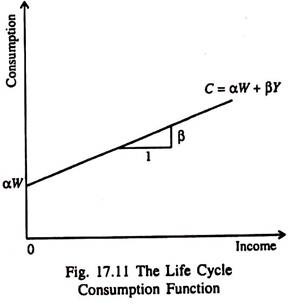

C = αW + βY …(6)

where the parameter α is the MPC out of wealth, and the parameter β is the MPC out of income.

Implications :

Fig. 17.11 shows the relationship between consumption and income in terms of the life cycle hypothesis. For any initial level of wealth w, the consumption function looks like the Keynesian function.

But the intercept αW which shows what would happen to consumption if income ever fell to zero, is not a constant, as is the term a in the Keynesian consumption function. Instead the intercept αW depends on the level of wealth. If W increases; the consumption line will shift upward parallely.

So one main prediction of the LCH is that consumption depends on wealth as well as income, as is shown by the intercept of the consumption function.

Solving the consumption puzzle:

The LCH can solve the consumption puzzle in a simple way.

According to this hypothesis, the APC is:

C/Y = α(W/Y) + β … (7)

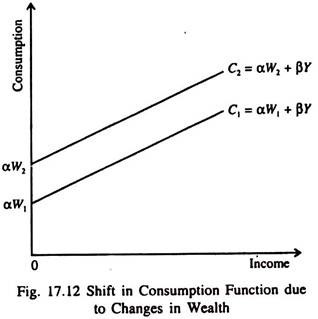

Since wealth does not vary proportionately with income from person to person or from year to year, cross-section data (which show inter-individual differences in income and consumption over short periods) reveal that high income corresponds to a low APC. But in the long run, wealth and income grow together, resulting in a constant W/Y and a constant APC (as time-series show).

If wealth remains constant as in the short run the life cycle consumption function looks like the Keynesian consumption function, consumption function shifts upward as shown in Fig. 17.12. This prevents the APC from falling as income increases.

This means that the short-run consumption income relation (which takes wealth as constant) will not continue to hold in the long run when wealth increases. This is how the life cycle hypothesis (LCH) solves the consumption puzzle posed by Kuznets’ studies.

Other Predictions :